Finetuning of GPT 3 Model For Text Classification l Basic to Advance | Generative AI Series

Summary

TLDRIn this tutorial, the speaker demonstrates how to fine-tune OpenAI's GPT-3 model for a sports classification task, distinguishing between baseball and hockey. The process includes setting up the environment with OpenAI’s API, preparing the dataset in the required format, training the model using Google Colab, and running inference to classify new data. The tutorial covers dataset preparation, using the `jonl` format, and the training steps with OpenAI’s fine-tuning tools. It provides a hands-on approach to customizing GPT-3 for specific tasks, making it accessible for users looking to leverage AI for text classification.

Takeaways

- 😀 **Model Fine-Tuning Overview**: The script demonstrates how to fine-tune OpenAI's GPT-3 model using a custom dataset to classify sports like baseball and hockey.

- 😀 **Environment Setup**: The process requires installing OpenAI’s Python library and setting up Google Colab for training, removing the need for local system resources.

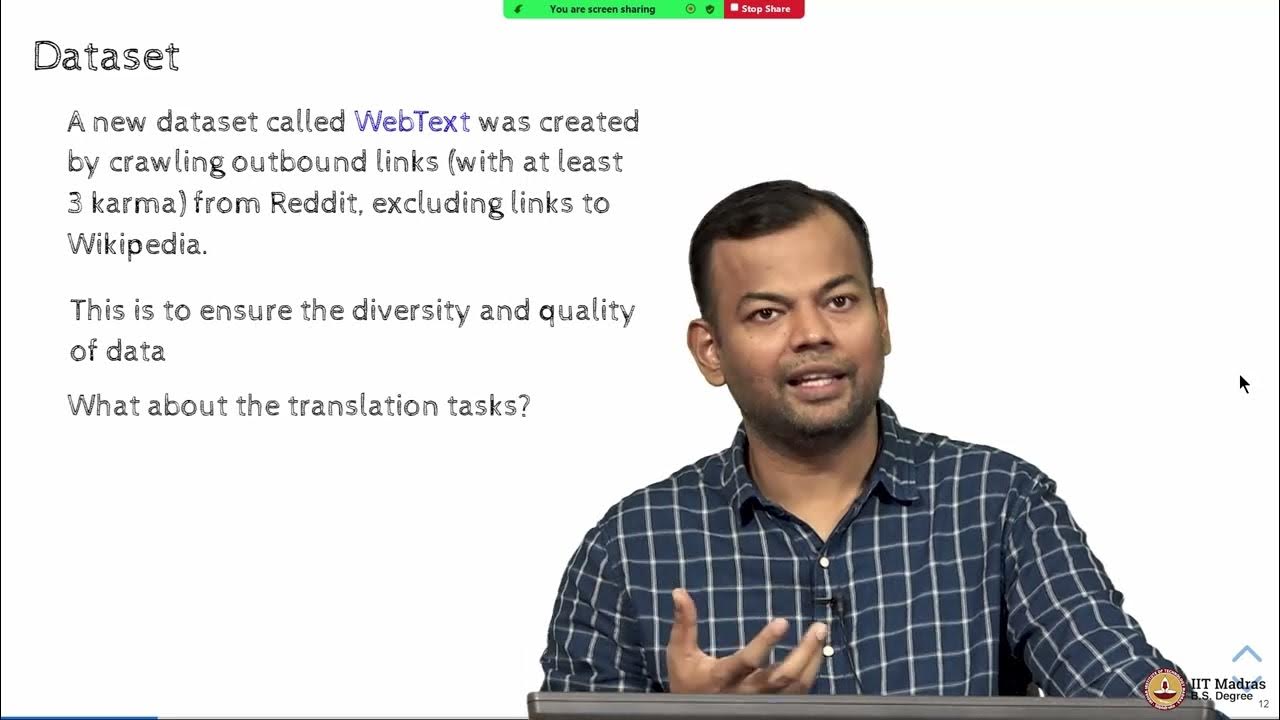

- 😀 **Dataset Preparation**: A sample dataset (20 News Group) is used, containing text data for baseball and hockey. The dataset is formatted into 'prompt' and 'completion' for model fine-tuning.

- 😀 **Data Formatting for Fine-Tuning**: The dataset must be converted into the `.jsonl` format, where each record contains a prompt (input) and completion (label).

- 😀 **OpenAI Fine-Tuning Process**: After preparing the data, the fine-tuning process is initiated using OpenAI’s API, specifying the training and validation datasets.

- 😀 **Handling Model Naming Conflicts**: The script highlights the need to use unique model names when retraining to avoid conflicts, ensuring the fine-tuned model is stored with a new name.

- 😀 **API Key Management**: To use the OpenAI API for training, you need an API key, which is provided in the setup process.

- 😀 **Model Evaluation**: Once the fine-tuning is complete, you can evaluate the model's performance by running inference tasks to classify new examples (e.g., baseball or hockey).

- 😀 **Training Time Considerations**: Training GPT-3 models takes significant time, so using pre-existing models or tools in Google Colab is advised to save time and resources.

- 😀 **Error Handling**: Common errors such as missing files or incorrect data formats are addressed, with troubleshooting advice like ensuring correct file paths and data preparation.

- 😀 **Exploration of OpenAI's Documentation**: The script encourages users to explore OpenAI's documentation to understand various use cases for fine-tuning beyond classification, like summarization and other NLP tasks.

Q & A

What is the goal of the tutorial in the video?

-The goal is to demonstrate how to fine-tune a GPT-3 model using custom data for a classification task, specifically distinguishing between two sports: baseball and hockey.

Why is GPT-3 training done via an API instead of on a local machine?

-GPT-3 has millions of parameters, making it impractical to train on a local machine. The OpenAI API handles all training and computation on their servers, eliminating the need for local configuration.

What dataset is used for this fine-tuning example?

-The dataset used is the 20 News Group dataset, which contains examples of sports-related data categorized into 'baseball' and 'hockey' topics.

What are the two main columns created during the data preparation process?

-The two main columns are 'prompt' and 'completion.' The 'prompt' is the input data, and the 'completion' is the label that corresponds to the correct classification (e.g., baseball or hockey).

What is the file format used for training the model?

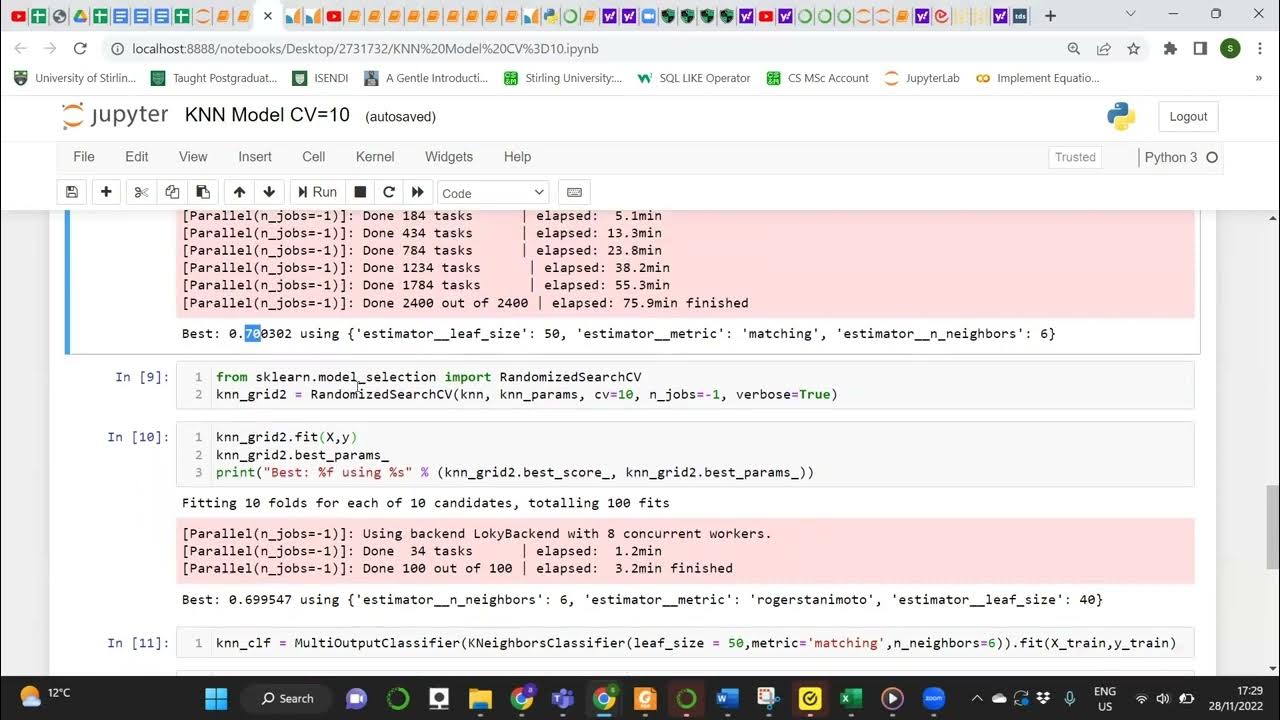

-The data must be converted into a specific file format called 'jonl' (JSON Lines), which organizes the data into prompt and completion pairs for GPT-3 fine-tuning.

What is the role of the OpenAI data preparation tool?

-The OpenAI data preparation tool processes the data in the 'jonl' format, ensuring it is properly split into training and validation sets. It also checks for any issues in the dataset and corrects them.

How can you start the training process for fine-tuning?

-You can start the training process by running a command with the OpenAI API to create a fine-tuned model. This command requires the data file paths and a unique model ID, and it will initiate the training based on the provided data.

What do you do if an error occurs when using the same model name during fine-tuning?

-If an error occurs because the model name already exists, you should rename the model ID to a unique name before proceeding with the training.

How can you check the progress of the fine-tuning process?

-You can monitor the progress through the OpenAI platform by refreshing the page. Once the training is complete, the new model will appear under the 'Fine Tunes' section of the model list.

How is the model used for inference after fine-tuning?

-After fine-tuning, the model can be used for inference by loading it through the OpenAI API with the model's unique ID. You can then input data and get classification results (e.g., baseball or hockey) from the model.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)