CS 285: Lecture 18, Variational Inference, Part 3

Summary

TLDRThis video discusses amortized variational inference (AVI), a method used to efficiently approximate posterior distributions in large datasets. It introduces the reparameterization trick, a technique for reducing gradient variance in the optimization of variational lower bounds. By using a neural network to model the posterior and reparameterizing latent variables, the method improves gradient estimation, making the process more scalable and stable. AVI is particularly useful for continuous latent variables, providing a low-variance, efficient way to train models with complex inference networks.

Takeaways

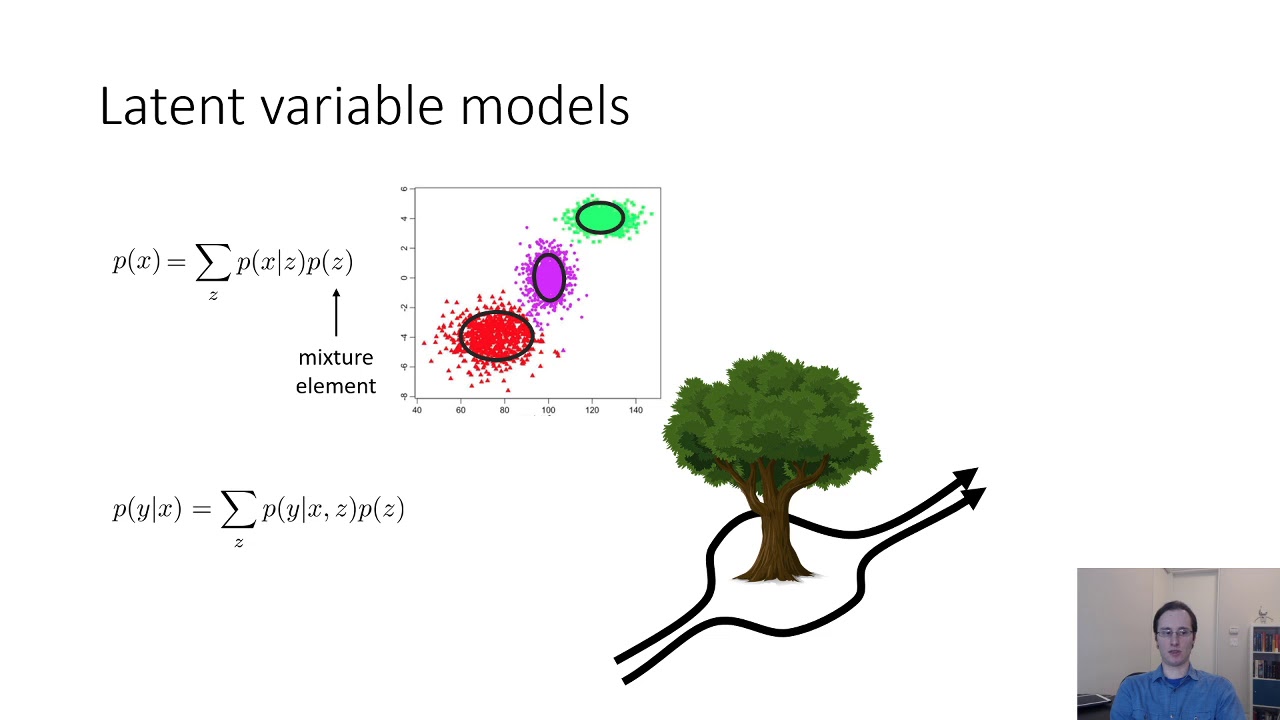

- 😀 Amortized variational inference (AVI) allows learning the posterior distribution q(z|x) even with large datasets by using a neural network to parameterize q.

- 😀 In traditional variational inference, for each data point, the posterior is approximated and the model parameters are updated based on the estimated gradient.

- 😀 In AVI, instead of storing individual Gaussians for each data point, a neural network model is used to predict the mean and variance of the posterior distribution for any given data point.

- 😀 Two networks are involved in AVI: a generative model (p_theta) and an inference model (q_phi), where the inference model approximates the posterior for a given data point.

- 😀 The variational lower bound in AVI is modified to involve two distributions: p_theta(x|z) and q_phi(z|x), which allows for learning both the generative model and the inference network.

- 😀 To calculate the gradient with respect to the inference network parameters (phi), policy gradient methods can be used, similar to reinforcement learning, but the estimator tends to have high variance.

- 😀 The reparameterization trick helps reduce the variance of the gradient estimator by transforming the random variable z into a deterministic function of a new random variable epsilon, independent of phi.

- 😀 The reparameterization trick allows gradients to be computed efficiently, as it avoids high-variance gradient estimators typical of policy gradients and can be implemented using automatic differentiation frameworks.

- 😀 By expressing z as a deterministic function of epsilon (a standard Gaussian), gradients with respect to the inference network parameters (phi) can be computed with lower variance and higher efficiency.

- 😀 In reinforcement learning, the reparameterization trick isn't available, but with amortized variational inference, it enables more accurate and stable gradient estimation with a single sample per data point.

- 😀 The reparameterization trick is highly applicable when working with continuous latent variables, while for discrete variables, policy gradient methods are still required.

Q & A

What is the primary goal of Amortized Variational Inference (AVI)?

-The primary goal of Amortized Variational Inference (AVI) is to make variational inference more efficient, particularly when dealing with large datasets, by using a single neural network to infer the posterior distribution across all data points, rather than having a separate posterior for each data point.

How does Amortized Variational Inference (AVI) differ from standard Variational Inference (VI)?

-In standard Variational Inference, a separate posterior distribution is estimated for each data point, leading to an increase in the number of parameters as the dataset grows. In contrast, AVI uses a single neural network to infer the posterior for all data points, reducing computational overhead and making it scalable for large datasets.

What are the two networks involved in Amortized Variational Inference (AVI)?

-AVI involves two networks: the generative network (parameterized by θ), which models the data, and the inference network (parameterized by φ), which approximates the posterior distribution for each data point.

Why is the gradient estimation in AVI difficult, and how is it addressed?

-The gradient estimation in AVI is difficult because it requires computing gradients with respect to the inference network parameters (φ), which involves an expectation over the latent variable z. This is addressed by using the policy gradient method and, more effectively, the reparameterization trick to reduce gradient variance.

What is the reparameterization trick, and how does it help in AVI?

-The reparameterization trick involves expressing the latent variable z as a deterministic function of a random variable ε (with a known distribution), allowing gradients to be propagated through z. This reduces the variance in the gradient estimator and makes the optimization process more stable and efficient.

What is the main advantage of using the reparameterization trick over policy gradients?

-The main advantage of the reparameterization trick is that it reduces gradient variance, enabling more accurate and stable gradient estimates with fewer samples. In contrast, policy gradients tend to have high variance and require more samples for reliable gradient estimation.

Why is the policy gradient method not the best approach for AVI?

-The policy gradient method, although a reasonable approach, suffers from high variance, meaning that it requires many samples to produce an accurate gradient. This can slow down convergence and require smaller learning rates, making it less efficient compared to the reparameterization trick in AVI.

Can the reparameterization trick be applied to discrete latent variables?

-No, the reparameterization trick only applies to continuous latent variables because it requires taking derivatives with respect to the latent variable z. For discrete latent variables, the derivative is not well-defined, and thus, a policy gradient method must be used.

How does the reparameterization trick improve the gradient estimator in AVI?

-The reparameterization trick improves the gradient estimator by transforming the latent variable z into a deterministic function of a random variable ε, reducing the variance in the gradient estimation. This allows for more efficient and stable optimization using just a single sample per data point.

How does the variational lower bound (L) play a role in training the model in AVI?

-The variational lower bound (L) is used to optimize the parameters of both the generative model (θ) and the inference network (φ). During training, gradients of L with respect to θ and φ are computed, and the parameters are updated iteratively to maximize the lower bound and improve the approximation of the posterior distribution.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

CS 285: Lecture 18, Variational Inference, Part 1

MCMC Gibbs Sampler part 2 aproksimasi diskrit

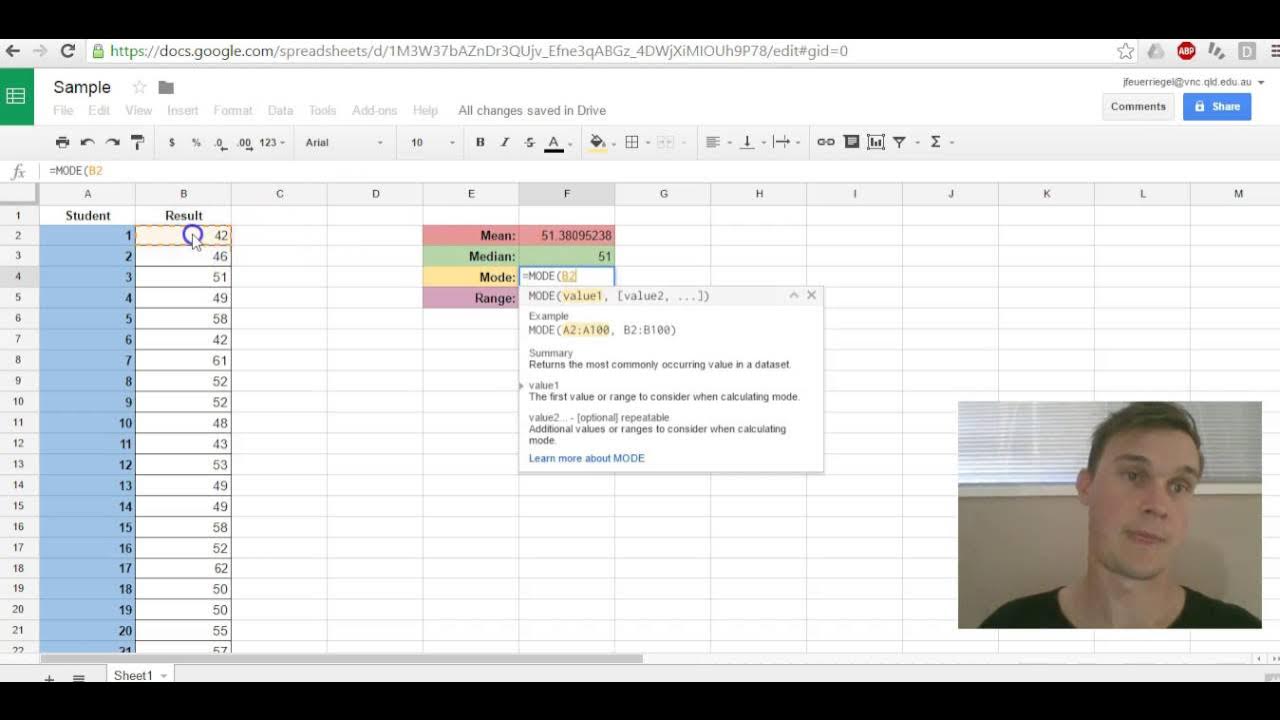

Google Sheets Mean,Median,Mode,Range

How to Filter Array of Objects in Javascript - ReactJS Edition

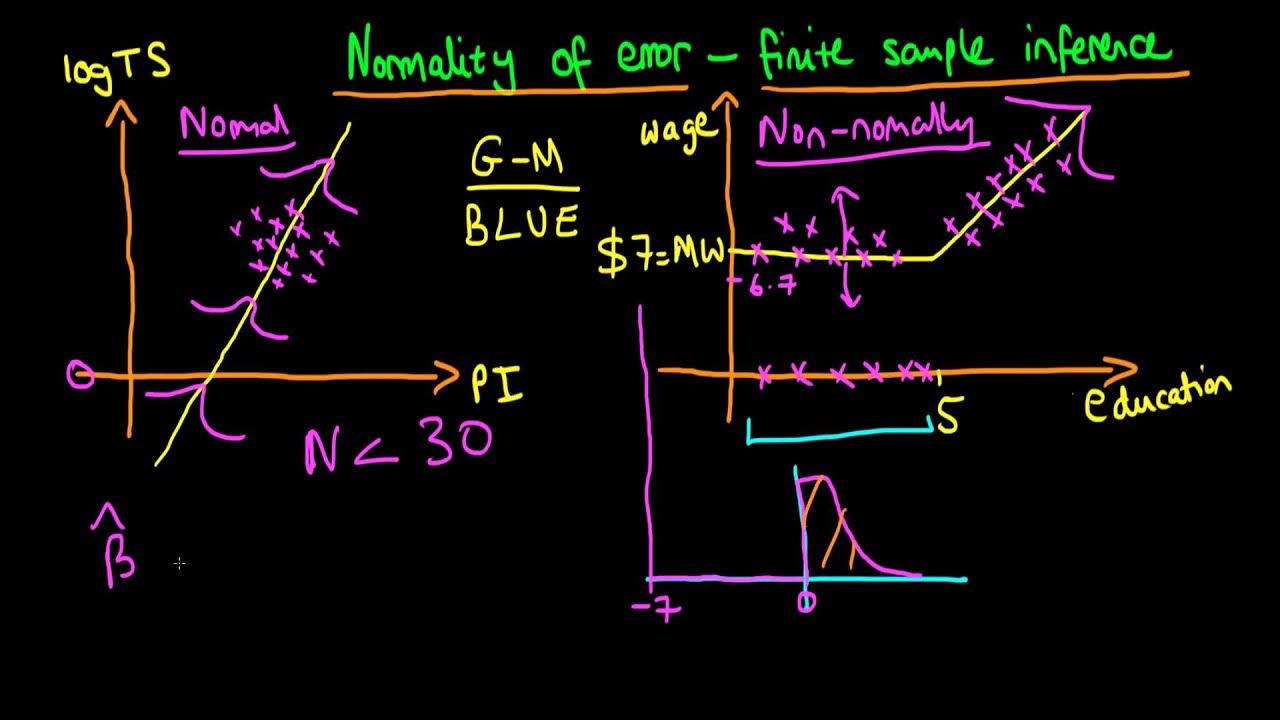

Normally distributed errors - finite sample inference

Chapter 8: Piecewise Interpolation (Part 1 - Introduction)

5.0 / 5 (0 votes)