AdaBoost Ensemble Learning Solved Example Ensemble Learning Solved Numerical Example Mahesh Huddar

Summary

TLDRThis video explains the AdaBoost ensemble learning algorithm through a hands-on example, demonstrating how to classify a dataset with attributes like CGPA, interactiveness, practical knowledge, and communication skills. The video walks through the steps of applying AdaBoost, including initializing weights, iterating over decision stumps, calculating weighted errors, and updating classifier weights. By the end, viewers will understand how AdaBoost combines weak classifiers into a strong, accurate model, making it a valuable tool for machine learning tasks.

Takeaways

- 😀 Adaboost is an ensemble learning algorithm that combines multiple weak classifiers to create a strong classifier.

- 😀 The algorithm starts by assigning equal weights to each training instance.

- 😀 A decision stump is used as a weak classifier, where each attribute (CGPA, interactiveness, etc.) is evaluated individually.

- 😀 The first step involves using CGPA to predict job offers. If CGPA ≥ 9, predict 'Yes', otherwise predict 'No'.

- 😀 Weighted error is calculated for each weak classifier, where misclassified instances are given higher weight in subsequent iterations.

- 😀 For each weak classifier, the algorithm computes a weight (Alpha) that indicates its reliability in predicting correctly.

- 😀 The weights of correctly classified instances are maintained, while those of misclassified instances are increased to emphasize their importance.

- 😀 The process is repeated for each attribute (interactiveness, practical knowledge, communication skills), updating the weights each time.

- 😀 In the final step, the weighted sum of the classifiers' predictions determines the final classification: a positive sum predicts 'Yes', and a negative sum predicts 'No'.

- 😀 The Adaboost algorithm helps improve accuracy by focusing on difficult-to-classify instances, progressively correcting mistakes.

- 😀 Adaboost's power lies in its ability to combine many simple classifiers (weak learners) into a robust predictive model.

Q & A

What is the purpose of the AdaBoost algorithm?

-AdaBoost is an ensemble learning algorithm used to improve the performance of weak classifiers by combining them into a strong classifier. It focuses on misclassified instances and adjusts weights accordingly to improve the overall accuracy of the model.

What is a decision stump in the context of AdaBoost?

-A decision stump is a simple weak classifier used in AdaBoost. It is a one-level decision tree that makes decisions based on a single feature (attribute). In the provided example, the decision stumps are built using attributes like CGPA, Interactiveness, Practical Knowledge, and Communication Skill.

How are the weights of training instances initialized in AdaBoost?

-At the beginning, the weight for each training instance is initialized equally, with each instance receiving a weight of 1 divided by the total number of instances. In this case, with 6 training instances, each instance's weight is 1/6.

What happens after the first weak classifier (decision stump) is trained?

-After training the first decision stump, the weighted error is calculated by comparing the predicted outcomes with the actual outcomes. Instances that are misclassified have their weights increased, while those correctly classified have their weights decreased.

What is the formula used to calculate the weight (alpha) for each weak classifier?

-The weight (alpha) for each weak classifier is calculated using the formula: Alpha = 0.5 * ln((1 - weighted error) / weighted error), where the weighted error is the error rate for the classifier based on the weighted training instances.

How does AdaBoost update the weights of misclassified instances?

-AdaBoost updates the weights by increasing the weight of misclassified instances to make them more important in the next iteration. Correctly classified instances have their weights decreased. This helps the algorithm focus on harder-to-classify instances in subsequent rounds.

What is the significance of normalizing the weights in AdaBoost?

-Normalizing the weights ensures that the sum of all instance weights is equal to 1. This step helps in maintaining the balance of influence that each training instance has during the learning process and ensures the algorithm works correctly.

How does AdaBoost combine the predictions of multiple weak classifiers?

-AdaBoost combines the predictions of multiple weak classifiers by calculating a weighted vote, where each classifier's vote is weighted by its alpha value (calculated from its error rate). The final prediction is made based on the majority weighted vote from all classifiers.

What role does the decision stump based on CGPA play in the algorithm?

-The decision stump based on CGPA predicts the job offer status as 'yes' or 'no' depending on whether the CGPA is above or below a certain threshold (in this case, 9). This classifier is used in the first iteration of AdaBoost, and its performance is evaluated based on its error rate.

What happens when all instances are correctly classified by a decision stump?

-When all instances are correctly classified by a decision stump, there is no need to adjust the weights for those instances. The training process continues with the next weak classifier, but no weight updates are needed for correctly classified instances.

How are the final predictions made using AdaBoost?

-The final predictions in AdaBoost are made by calculating a weighted sum of the predictions from all the weak classifiers. Each classifier’s vote is multiplied by its alpha value. If the sum is positive, the final prediction is 'yes'; if negative, it is 'no'.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

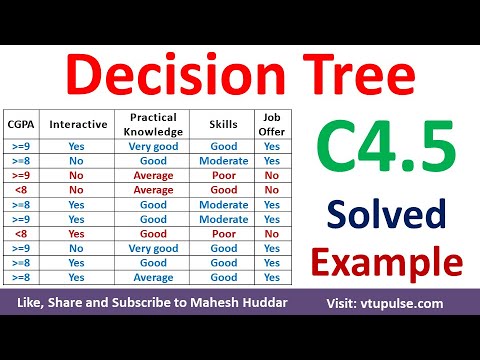

Decision Tree using C4.5 Algorithm Solved Numerical Example | C4.5 Solved Example by Mahesh Huddar

Naive Bayes dengan Python & Google Colabs | Machine Learning untuk Pemula

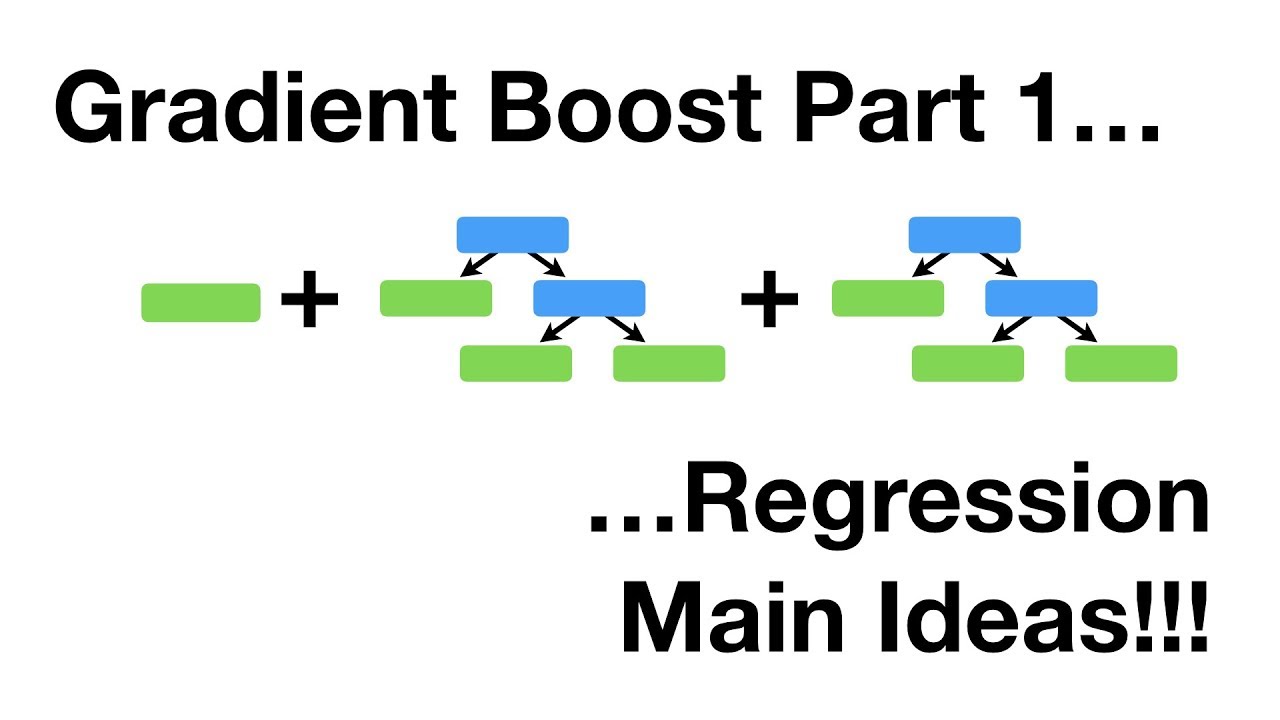

Gradient Boost Part 1 (of 4): Regression Main Ideas

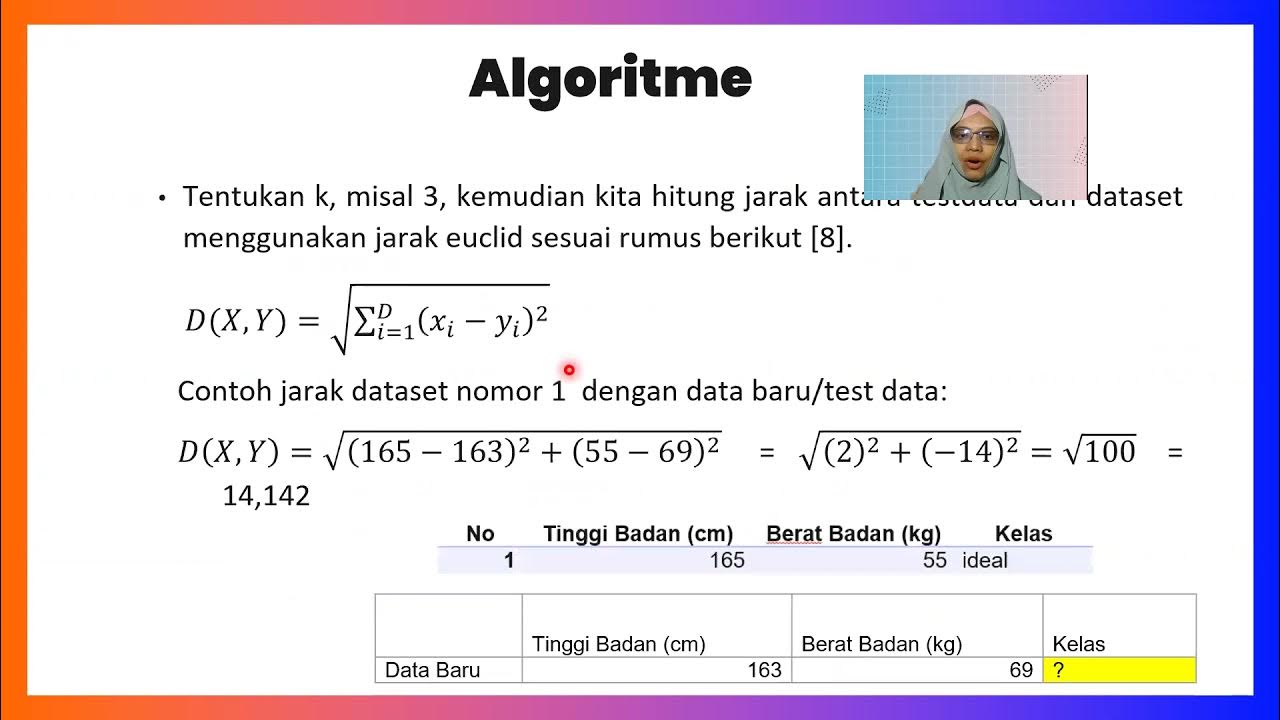

K-Nearest Neighbors Classifier_Medhanita Dewi Renanti

Decision Tree Solved | Id3 Algorithm (concept and numerical) | Machine Learning (2019)

Eps-03 Learning Methods

5.0 / 5 (0 votes)