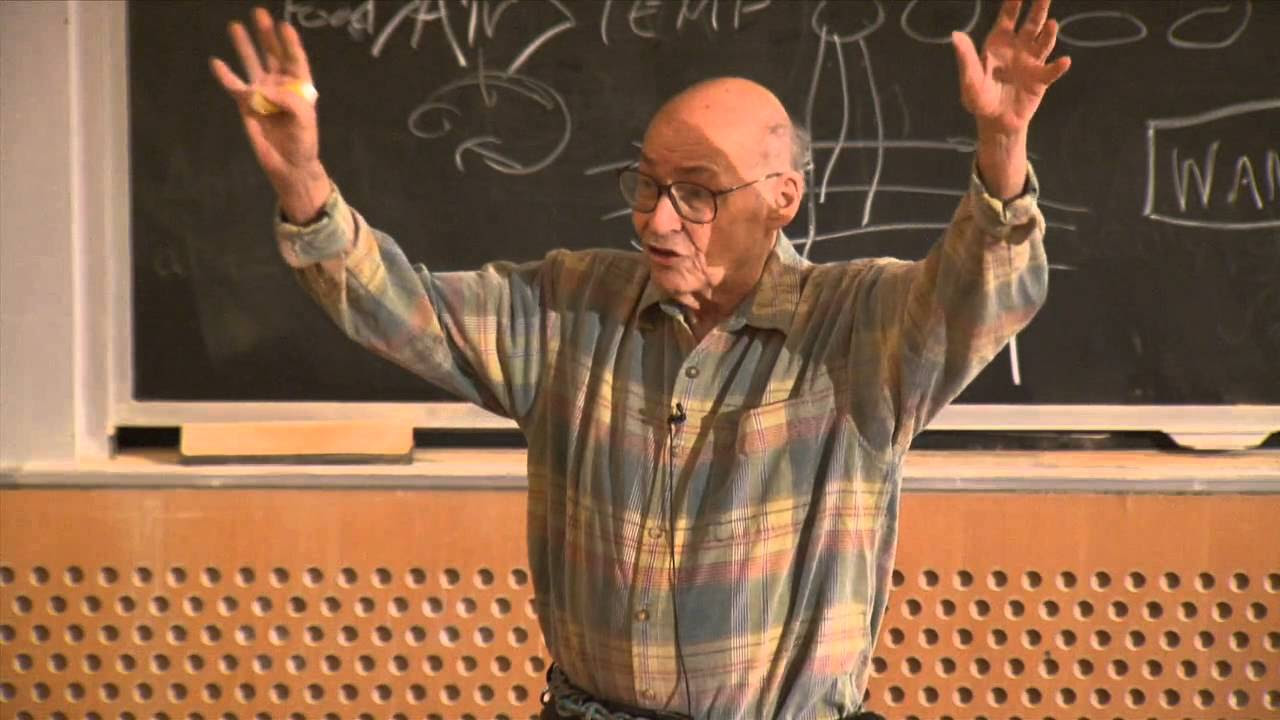

4. Question and Answer Session 1

Summary

TLDR在这段对话中,马文·明斯基教授与观众就多个话题进行了深入的讨论。话题涉及了神经科学、人工智能、认知心理学和语言学习等领域。明斯基教授分享了他对大脑功能、神经元连接以及胶质细胞可能的作用的看法。他还讨论了大脑如何处理不同的心理状态,例如否认、讨价还价、沮丧和抑郁,并探讨了动物(如狗)与人类在认知能力上的差异。此外,明斯基教授还对如何评估机器智能提出了见解,包括是否需要机器具备理解能力或仅仅模拟人类行为。整个讨论充满了对人类心智和机器学习的深刻洞察,展示了明斯基教授对这些复杂主题的深入理解。

Takeaways

- 📚 支持MIT开放课程项目(MIT OpenCourseWare)可以继续免费提供高质量的教育资源。

- 🧠 马文·明斯基(Marvin Minsky)讨论了大脑的工作原理,特别是关于神经胶质细胞可能的作用。

- 🤔 明斯基表达了对大脑研究的担忧,认为神经科学界可能过于关注神经元间的化学物质,而忽视了神经网络结构的重要性。

- 🐙 他提出了一个假设,即动物(如章鱼)可能具有复杂的规划能力,这可能与它们记住长序列和语义内容的能力有关。

- 🐕 明斯基对狗的认知能力表示好奇,尤其是它们是否能够进行前瞻性规划和比较不同情境的能力。

- 🤖 他探讨了人工智能的发展,强调了计算机科学在理解人类认知能力方面的作用。

- 🧐 明斯基认为,即使是简单的计算机注册器数量差异,也可能在解决问题的速度上产生指数级的影响。

- 🧬 他提到了关于人类和其他动物智能的比较,包括对狗的认知能力的看法,以及它们是否具有类似于人类的推理能力。

- 📈 明斯基讨论了关于如何评估智能机器的问题,特别是当它们达到或超越人类智能水平时。

- 🌐 他还提到了关于如何使用不同的模型来解决问题,以及人们如何在面对问题时选择思考方式的问题。

- ❓ 最后,明斯基提出了关于如何识别和选择解决问题的最佳数据和方法的问题,这仍然是人工智能和认知心理学中一个未解决的核心问题。

Q & A

MIT开放课程的捐赠和额外材料如何获取?

-要为MIT开放课程捐款或查看额外材料,可以访问ocw.mit.edu。

Marvin Minsky对于大脑工作方式的看法是什么?

-Marvin Minsky不想对大脑如何工作进行推测,因为神经科学领域有大量研究论文,这些论文提出了不同的假设,包括可能是胶质细胞而非神经元在起作用。

神经元的连接数量对它们处理信息的能力有何影响?

-一个典型的神经元有大约10万个连接,这表明神经元内部必须进行非常复杂的处理工作,可能需要其他支持神经元的细胞参与。

神经科学的历史是如何发展的?

-神经科学的历史起初认为所有神经元都是相连的,直到1890年左右才有了清晰的概念,即神经细胞不是以连续网络的形式排列的,而是存在称为突触的小间隙。

Marvin Minsky对于大脑中化学物质的看法是什么?

-Minsky认为,尽管有关化学物质在大脑激活时的作用有很多民间传说,但现代对神经系统的了解表明,神经途径的连接倾向于交替,不是总是,但经常是抑制性的或兴奋性的。

癫痫发作时大脑发生了什么?

-癫痫发作时,大脑的某些部分会因为足够的电和化学活动(主要是电活动)而开始同步发射,这种情况像森林火灾一样蔓延。

Marvin Minsky对于意识的看法是什么?

-Minsky认为意识这个词太模糊,包含了很多不同的含义。他认为意识可能涉及多种不同的系统,不同的动物可能具有不同层次的意识。

如何理解大脑的软件和硬件?

-Minsky认为大脑的硬件是演化过程中形成的,而软件则是我们所学习的,包括大脑的一部分如何调节另一部分。他提出大脑可能存在多个层次的表示,类似于计算机中的寄存器。

Marvin Minsky对于人工智能的看法是什么?

-Minsky认为人工智能的发展应该考虑人类解决问题的不同方式,而不仅仅是模仿人类大脑的工作方式。他还提到了计算机科学对于理解智能的重要性。

Marvin Minsky对于动物智能的看法是什么?

-Minsky认为动物智能可能与人类智能有本质的不同,他提出了动物可能在某些方面(如记忆长序列)比人类更优秀的可能性。

Marvin Minsky对于神经科学未来的预测是什么?

-Minsky预测,由于大脑扫描技术的成本降低和分辨率提高,以及新的化学示踪方法,神经科学在下一代将会非常激动人心。

Marvin Minsky对于心理学和认知科学的未来趋势有何看法?

-Minsky认为可能会有更多的数学心理学出现,并且认知心理学可能会采用更多的计算复杂性理论来理解解决问题所需的过程。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)