Backpropagation and the brain

Summary

TLDR这篇论文由Timothy Lillicrap、Adam Santoro、Luke Morris、Colin Ackerman和Geoffrey Hinton共同撰写,提出了一个关于大脑中反向传播算法如何工作的假设。尽管之前有大量证据反对大脑中存在类似反向传播的过程,但论文探讨了神经网络如何在没有外部反馈的情况下通过Hebbian学习和通过反馈进行学习。作者们提出了一种类似于反向传播的学习机制,称为“backprop-like”学习,并通过反馈网络实现,使得每个神经元都能接收到如何更新自己的详细指令。论文还讨论了为何之前认为反向传播在大脑中不可能的原因,包括突触对称性的需求和错误信号的类型问题。最后,作者们提出了end-grad假设,认为大脑可以通过使用基于自编码器的近似反向传播算法来实现神经网络的学习。这一算法避免了传统反向传播中的一些问题,如突触对称性和错误信号的传递问题,并通过自编码器的堆叠和训练来实现。

Takeaways

- 🧠 这篇论文提出了一个关于大脑中类似反向传播算法如何工作的假设,尽管之前有很多证据反对大脑中存在类似反向传播的机制。

- 📈 论文中提到了多种神经网络学习的方式,包括Hebbian学习和通过反馈进行的学习,其中Hebbian学习不需要外界反馈,是一种自我强化的模式激活机制。

- 🔄 反向传播算法在机器学习社区中很常见,但在生物学大脑中实现的可能性之前被认为较低,因为其需要前向和后向路径的突触对称性。

- 🤔 论文讨论了为什么之前认为反向传播不可能在大脑中发生,包括突触对称性的需求和错误信号的类型。

- 🧬 论文提出了一种基于自编码器的近似反向传播算法,称为end gradient hypothesis,它使用局部更新规则而不是反向传播。

- 🤓 通过比较人工神经网络和生物神经网络的隐藏表示,研究表明使用反向传播训练的网络在形成隐藏表示方面与生物网络更为接近。

- 🔄 论文提出的算法通过使用近似逆向函数来实现类似于反向传播的效果,同时避免了反向传播在生物学上不可行的问题。

- ⚙️ 该算法利用前向和后向权重的更新,使得网络的隐藏层表示更接近期望状态,并且通过局部信息计算所需的错误信号。

- 📊 论文还探讨了这种算法如何在生物学上实现,包括当前对神经元更为复杂和分化的看法。

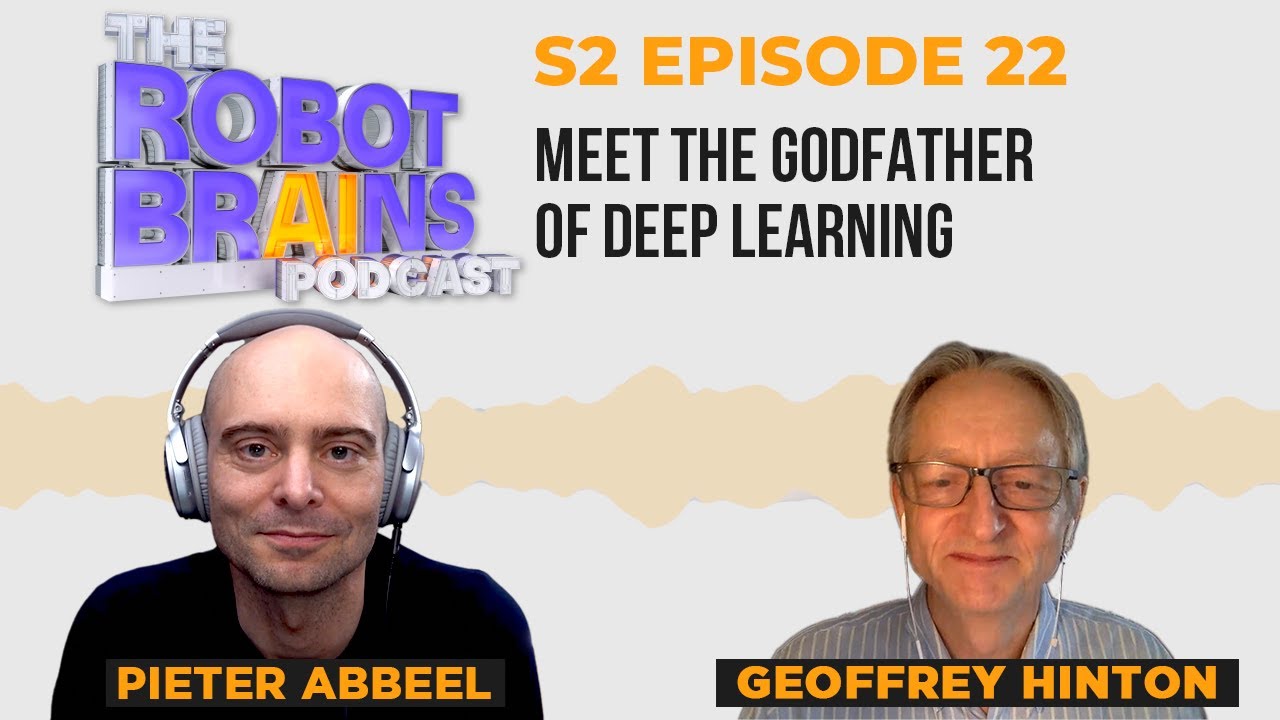

- 📚 论文的作者包括机器学习领域的著名学者Geoffrey Hinton,这也是该论文受到关注的原因之一。

- 🎓 对于对机器学习和神经科学交叉领域感兴趣的读者,这篇论文提供了一个理解和探索大脑学习机制的新视角。

Q & A

本文讨论的论文主要提出了什么假设?

-本文讨论的论文提出了一个关于大脑中类似反向传播算法工作的假设。尽管之前有许多证据反对大脑中存在类似反向传播的机制,论文作者认为大脑中可能确实存在类似反向传播的学习机制。

Hebbian学习和反向传播学习有什么区别?

-Hebbian学习是一种不需要外部反馈的自我强化模式,通过增加或减少连接权重来匹配或增强之前的输出。而反向传播学习则需要外部反馈,通过反向传播误差信号(即梯度)来精确调整每个神经元或连接权重,以减少整体的误差。

为什么之前的研究认为反向传播在大脑中不太可能实现?

-之前的研究认为反向传播在大脑中不太可能实现的原因主要有两个:一是反向传播要求前向和后向路径的突触对称性,但生物神经元的结构并不支持这一点;二是反向传播中的误差信号是有符号的,可能具有极端值,而生物神经元的信号通常是以尖峰率的形式存在,难以表示有符号的误差信号。

论文中提出的“Backdrop学习”是什么?

-“Backdrop学习”是论文中提出的一个类似反向传播的学习算法,它使用近似的逆向自编码器来实现。该算法利用局部信息和激活数据类型来计算所需的误差信号,而不进行真正的误差反向传播,从而解决了生物神经元难以实现反向传播的问题。

自编码器在Backdrop学习中扮演了什么角色?

-自编码器在Backdrop学习中起到了关键作用。通过将多层自编码器堆叠起来,可以在每一层中使用自编码器的重构误差来计算所需的隐藏表示,然后通过近似逆函数来调整这些隐藏表示,使其更接近期望值。这样,每个自编码器层可以独立地调整其权重,而不需要全局的误差反向传播。

如何理解Backdrop学习中的“近似逆向”?

-在Backdrop学习中,由于并不存在完美的逆向函数,所以使用了“近似逆向”的概念。这意味着使用一个近似的逆向函数来估计给定前向传播结果应该对应的隐藏层表示。通过这种方式,即使没有完美的逆向函数,也能够在每个层级上计算出误差信号,并据此更新权重。

论文中提到的实验是如何证明反向传播与生物神经网络的相似性的?

-论文中提到的实验通过使用人工神经网络学习与人类或动物大脑相同的任务,并将这些人工神经网络的隐藏层表示与生物神经网络的隐藏层表示进行比较。结果显示,使用反向传播训练的网络在隐藏层表示上更接近生物网络,而不是使用标量更新算法训练的网络,这为大脑中可能存在类似反向传播的学习机制提供了证据。

为什么说Backdrop学习算法的数据类型检查是必要的?

-Backdrop学习算法的数据类型检查是必要的,因为生物神经元通过尖峰率传递信息,这些信息通常是非负的。算法需要确保前向传播和近似逆向传播的数据类型一致,都是激活信号,这样才能保证算法的生物学可行性。

论文中提到的神经元的生物学实现有哪些可能的证据?

-论文中提到了一些可能的生物学证据,包括现代对神经元更为复杂的视图,例如神经元的不同区域可以独立运作,以及神经元之间的相互干扰等。这些现代观点提供了神经元可能实现类似Backdrop学习算法的生物学基础。

如何理解Backdrop学习算法中的“局部更新”?

-在Backdrop学习算法中,“局部更新”指的是每个神经元或每层网络可以根据本层的信息独立进行权重更新,而不需要依赖于从其他层级传递来的全局误差信号。这种方法利用了局部可用的信息,如激活信号和近似逆函数,来计算必要的误差信号,从而实现了权重的调整。

Backdrop学习算法与标准的反向传播算法相比有哪些优势?

-Backdrop学习算法的优势在于它不依赖于全局误差信号的反向传播,而是利用局部信息和近似逆函数来进行权重更新。这种方法更符合生物神经系统的工作方式,避免了生物神经元难以实现的全局误差反向传播的问题,同时保持了反向传播算法的优化效率。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Geoffrey Hinton Unpacks The Forward-Forward Algorithm

The Most Important Algorithm in Machine Learning

Heroes of Deep Learning: Andrew Ng interviews Geoffrey Hinton

Possible End of Humanity from AI? Geoffrey Hinton at MIT Technology Review's EmTech Digital

畢業好難?寫作心法傾囊相授,幫你克服碩士論文恐懼症

Season 2 Ep 22 Geoff Hinton on revolutionizing artificial intelligence... again

5.0 / 5 (0 votes)