9. CAMBRIDGE IGCSE (0478-0984) 1.2 Representing characters and character sets

Summary

TLDRThis video explores how character sets like ASCII and Unicode enable computers to represent text and symbols in binary form. It explains the evolution from ASCII's 7-bit system to Unicode's extensive character repertoire, which accommodates global languages and emojis. The necessity of standardization in character representation is emphasized to ensure consistency across devices. While Unicode offers a vast array of characters, ASCII remains useful for simpler text due to its smaller file size. Ultimately, understanding character sets is crucial for efficient data storage and communication in the digital age.

Takeaways

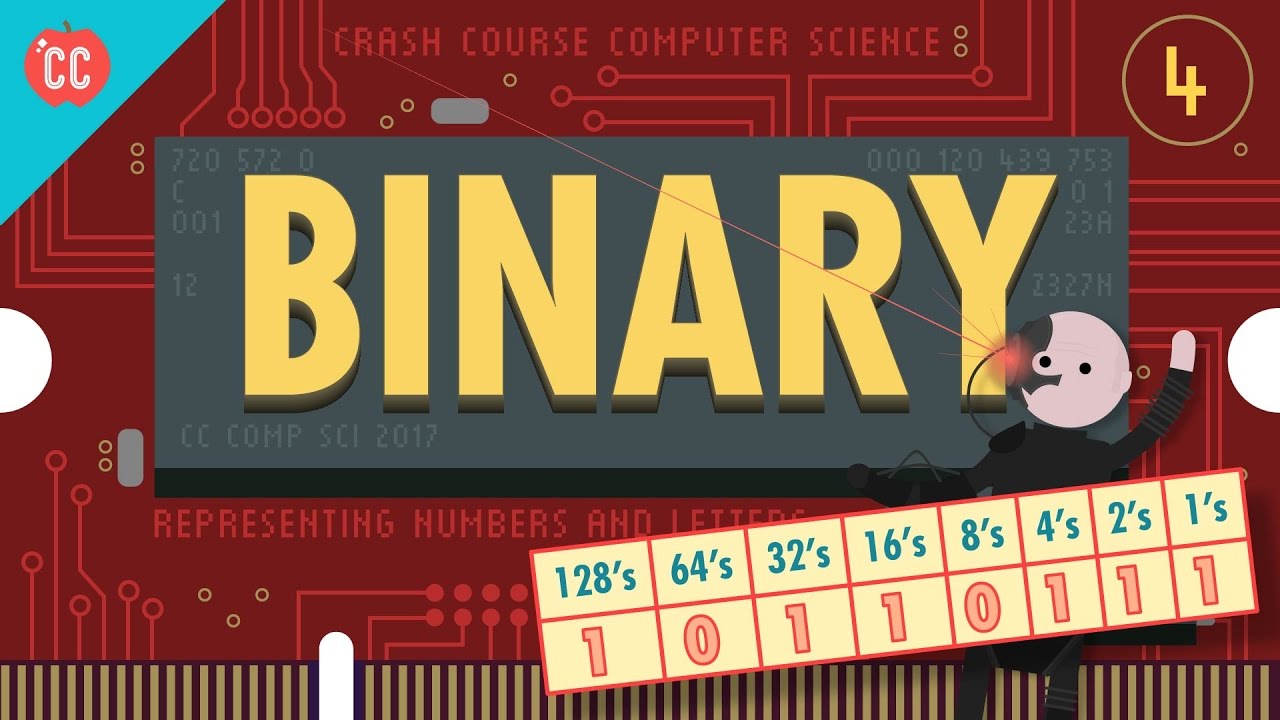

- 🔢 All data in computers is stored in binary (0s and 1s), requiring unique binary codes for each character.

- 📏 One binary digit can represent only two characters; more bits increase the number of characters that can be encoded.

- 📊 Five bits are necessary to represent at least 32 characters, which covers the English alphabet.

- 🔠 A minimum of seven bits is needed to account for uppercase, lowercase, symbols, and punctuation, resulting in 128 unique characters.

- 📜 A character set is a defined list of characters recognized by computer hardware and software, each assigned a unique binary number.

- ✅ ASCII (American Standard Code for Information Interchange) is a widely accepted seven-bit character set allowing for 128 combinations.

- 🔄 Extended ASCII uses eight bits to provide an additional 128 characters, accommodating symbols and foreign languages.

- 🌐 Unicode is a universal character set that includes characters from all written languages and various symbols, including emojis.

- ⚖️ Unicode is typically a 24-bit character set, which allows for a larger array of characters compared to ASCII.

- 💾 File sizes differ significantly between ASCII and Unicode, with ASCII being more efficient when only basic characters are needed.

Q & A

What is the fundamental principle behind how data is stored in computer systems?

-Data in computer systems is stored in binary format, consisting of zeros and ones, which represent two distinct states.

How does the number of bits affect the number of unique characters that can be represented?

-The number of bits determines the number of unique combinations of binary numbers. For example, 1 bit can represent 2 characters, while 5 bits can represent 32 characters (2^5).

Why is a minimum of 7 bits required for character representation in common character sets?

-A minimum of 7 bits is needed to represent at least 128 unique characters, which includes both uppercase and lowercase letters, digits, symbols, and control characters.

What is ASCII, and how does it relate to character encoding?

-ASCII, or American Standard Code for Information Interchange, is a 7-bit character encoding standard that identifies 128 characters and their binary codes.

What is the significance of the extended ASCII character set?

-The extended ASCII character set uses 8 bits to provide an additional 128 characters, accommodating some foreign languages and graphical symbols.

How does Unicode differ from ASCII in terms of character representation?

-Unicode is a universal character encoding standard that can represent a much larger variety of characters (up to 1,114,112) from multiple languages and scripts, typically using 24 bits for encoding.

What are the benefits of using UTF-8 encoding?

-UTF-8 is a variable-width character encoding for Unicode that is backward compatible with ASCII, allowing for efficient storage and representation of a wide range of characters while minimizing file size when possible.

Why might a programmer choose ASCII over Unicode?

-A programmer might choose ASCII over Unicode to reduce file size when only basic Latin characters are needed, as ASCII uses fewer bits (7 or 8) compared to Unicode's larger bit requirements.

What role do character sets play in ensuring consistency across different computer systems?

-Character sets establish agreed standards for numbering characters, ensuring that different computers interpret the same binary sequences consistently and accurately.

What are some challenges associated with character encoding in international communication?

-Challenges include potential misinterpretation of characters if different systems use different encodings, leading to data corruption or display issues, particularly with special characters or languages not included in a given character set.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)