MultiModal Search (Text+Image) using TF MobileNet , HF SBERT in Python on Kaggle Shopee Dataset

Summary

TLDRThis video discusses how to perform multimodal similarity searches, which involve finding similar images and text descriptions from a database. It highlights the challenges of identifying duplicate or near-duplicate products, especially in e-commerce platforms like Shopee. The video explains the use of neural networks, particularly transformers, for analyzing and matching images with different descriptions. Additionally, it covers methods for improving search accuracy, such as using joint embeddings for both text and images, and mentions the application of this technology in online product searches. The speaker encourages users to subscribe for more insights.

Takeaways

- 😀 Multimodal similarity search is a process that identifies related images and text descriptions in a database to find the most similar matches.

- 🔍 The script discusses the use of neural networks and transformers to enhance image and text-based searches for products, especially in e-commerce platforms.

- 🖼️ The aim is to detect duplicates of the same products uploaded with different images and descriptions by users on platforms like Shopee.

- 💻 Training involves working with a dataset of 30,000 images and applying models like convolutional neural networks (CNNs) and transformers for identifying image similarities.

- 📱 The discussion also touches upon the mobile app functionality for detecting duplicate images with different labels and descriptions, improving product categorization.

- 📊 An example of this process is identifying duplicates between similar product postings and ensuring more accurate image-text matching for online businesses.

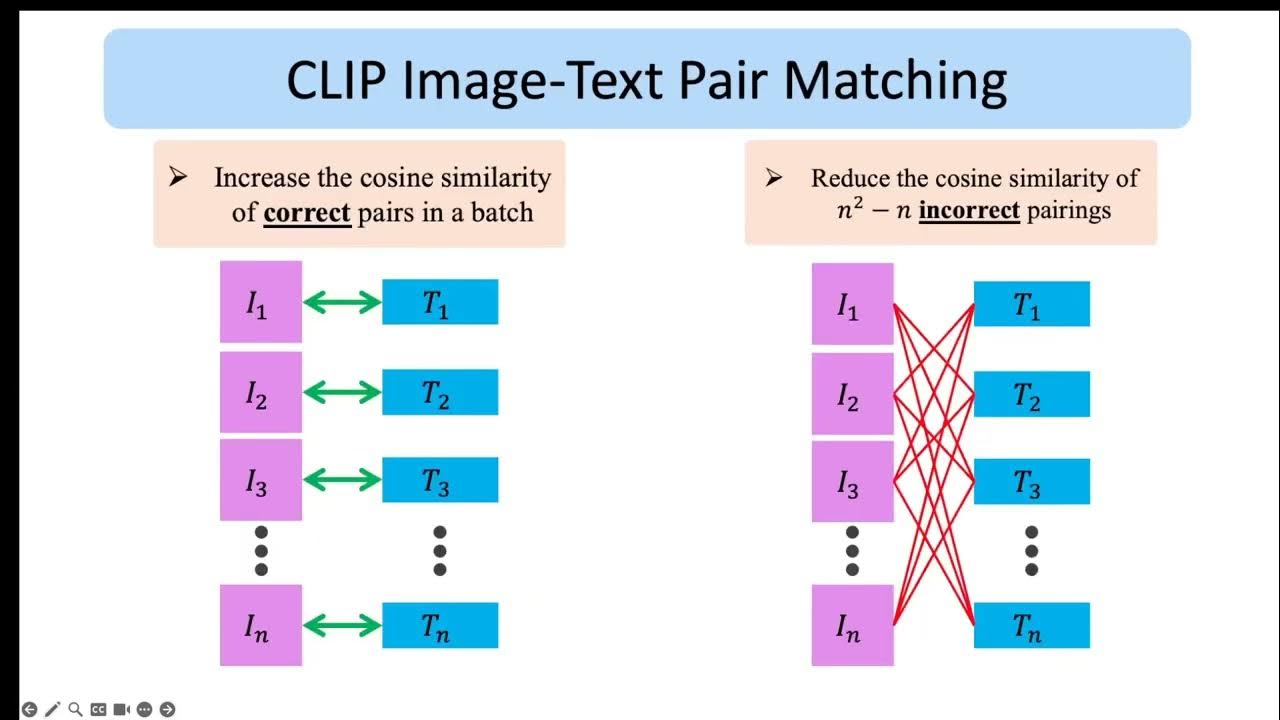

- 🤖 The use of transformers helps to generate joint embeddings, which allow for more accurate similarity detection between images and corresponding text descriptions.

- 🛍️ The script highlights that product descriptions can differ significantly, even if the images are of the same product, making this process vital for reducing inconsistencies.

- 💼 It also mentions the integration of AI-driven systems into commerce platforms for faster and more efficient processing of product data.

- 🔔 The video encourages viewers to subscribe for more content on AI, neural networks, and multimodal search systems for enhanced business efficiency.

Q & A

What is the main focus of the video?

-The video focuses on performing multimodal similarity search, particularly in the context of databases with images and text descriptions.

What is multimodal similarity search?

-Multimodal similarity search is the process of finding similar images and text descriptions within a database, using models that compare both image and text data.

How is multimodal similarity useful in e-commerce?

-In e-commerce, multimodal similarity helps identify duplicate or similar products based on different images and descriptions, ensuring better product organization and preventing redundant postings.

Which platform is mentioned as using multimodal similarity search?

-Shopee, an e-commerce platform in Southeast Asia, is mentioned as utilizing multimodal similarity search to handle product duplication.

How does the model handle different images of the same product?

-The model can identify different images of the same product by analyzing their similarity through both visual and textual descriptions, even when the images or descriptions vary.

What type of neural network is suggested for image and text similarity search?

-A transformer model is suggested for extracting embeddings that represent the similarity between images and text in a joint embedding space.

How many images are mentioned in the training dataset?

-The training dataset contains approximately 30,000 images, which are used to train the model.

What is the importance of embeddings in multimodal search?

-Embeddings help the model understand and represent both text and images in a way that allows it to measure their similarity, which is crucial for multimodal search tasks.

What other platforms or datasets are mentioned for multimodal similarity?

-Amazon and other similar datasets are mentioned as examples where multimodal similarity search models can be applied to find related images and text.

What tools or libraries are referenced for model implementation?

-The video mentions the use of transformer libraries and convolutional neural networks for implementing and training models that perform multimodal similarity searches.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)