How do Multimodal AI models work? Simple explanation

Summary

TLDRThis video explains how multimodal AI works, focusing on models like DALL-E that combine text and image processing. It highlights the use of diffusion models and embedding techniques to create visual representations guided by textual input. The discussion extends to interfaces like ChatGPT, which can handle multiple modalities, allowing for complex interactions where users can request images or audio based on text descriptions. The video clarifies the distinction between the underlying AI models and the user interface, illustrating how these technologies collaborate to generate meaningful outputs across different forms of data.

Takeaways

- 😀 Multimodal AI can process and generate various data types, including text, images, and audio, enhancing user interactions.

- 🎨 Text to image models like DALL-E use diffusion models that generate images from random noise, guided by textual descriptions.

- 🔗 Embedding models convert text and images into vectors to capture their meanings, aligning them semantically for better understanding.

- 📊 The training process involves maximizing cosine similarity between matching text and image pairs and minimizing it for non-matching pairs.

- 🖼️ ChatGPT can accept multiple input modalities (text, images, audio) and generate outputs in these forms, requiring a complex internal pipeline.

- 🤔 Distinguishing user intent is crucial, as requests can be interpreted literally or metaphorically, complicating the model's response.

- 🔄 Multimodal interfaces require an underlying framework that integrates various models (like Whisper for audio processing and DALL-E for image generation).

- 📚 The learning process for these models may include feedback mechanisms that help the model understand user preferences for output modalities.

- 🌐 The power of natural language plays a significant role in linking different modalities, serving as a common factor in multimodal AI.

- 📈 Ongoing advancements in AI technologies continue to refine the capabilities and interactions of multimodal systems.

Q & A

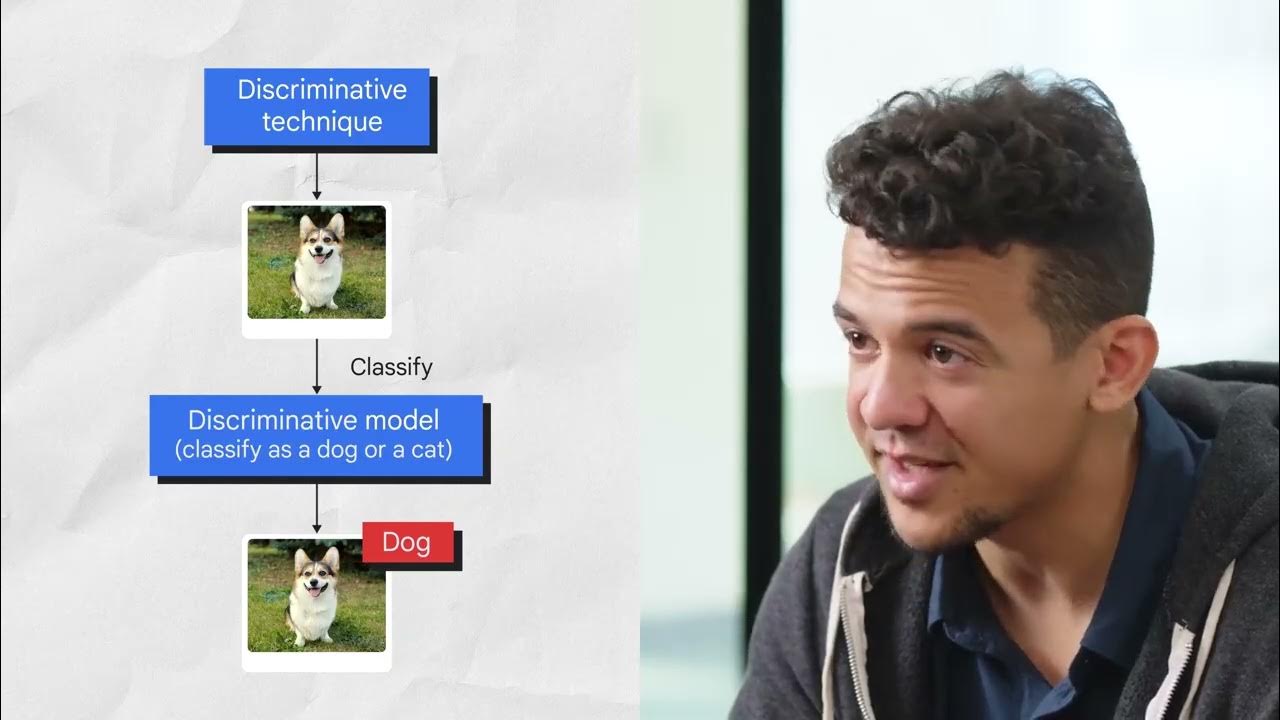

What is multimodality in AI?

-Multimodality refers to the ability of AI models to process and generate different types of data, such as text, audio, and images.

How do text-to-image models like DALL-E generate images?

-Text-to-image models use diffusion models, which generate images from pure Gaussian noise, guided by textual input to control the image generation process.

What role do embedding models play in multimodal AI?

-Embedding models convert text and images into vectors that capture their meanings, allowing the AI to understand and relate different modalities.

How does CLIP contribute to training embedding models?

-CLIP is used to train embedding models by maximizing the cosine similarity between text and image vectors that represent the same concept, ensuring they map to the same meaning space.

What is the significance of cosine similarity in training?

-Cosine similarity measures the angle between vectors in a space, helping to align text and image vectors for the same concept while minimizing similarity for different concepts.

How do multimodal interfaces like ChatGPT differ from traditional models?

-Multimodal interfaces can accept and generate multiple types of data, while traditional models typically handle only one modality at a time.

What happens when a user requests a specific output in ChatGPT?

-The request may involve multiple models; for instance, audio input is converted to text by Whisper, interpreted by the LLM, and then used to generate an image with DALL-E 3.

Why is text considered the common factor in multimodal models?

-Text serves as a bridge among different modalities due to its expressivity and the ability to convey complex meanings that can guide the output.

What challenge arises from the multiple modalities in ChatGPT?

-The model must determine the intended output modality, which can lead to ambiguity in user requests, requiring the model to infer user intent.

What is the relationship between the LLM and the UI in ChatGPT?

-The LLM is the underlying language model, while the UI is the application through which users interact with the model, allowing for input and output across various modalities.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)