4 2 Filtering

Summary

TLDRThe video explains the concept of image filtering through convolution, a key mathematical operation used in image processing. It describes how convolution works by applying a mask or filter to pixels, accounting for their neighbors, and computing values for a processed image. The video explores different types of filters, such as low-pass filters for smoothing and high-pass filters for edge detection. It distinguishes between gradient images, which show pixel intensity changes, and edge images, which highlight contours. The script emphasizes using gradient images to detect interest points in image analysis.

Takeaways

- 📷 Image edge detection typically begins with filtering through a segmented image.

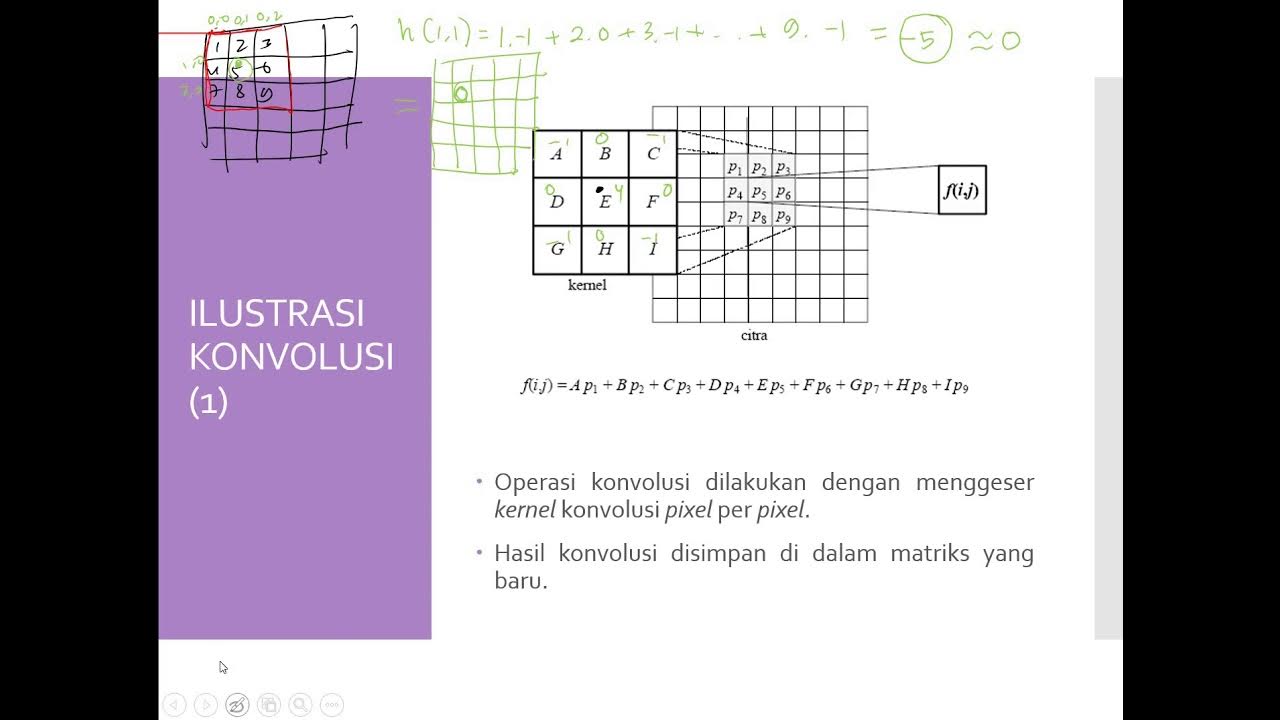

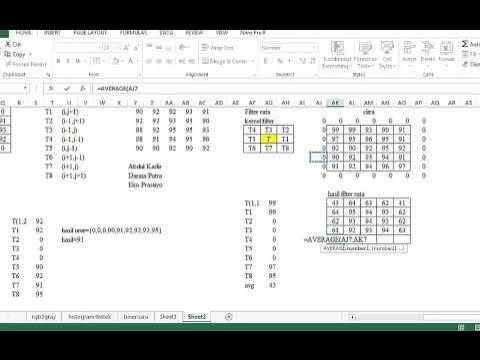

- 🧮 Convolution is a key mathematical operation used for image processing by considering a pixel and its neighbors.

- 🎛️ A mask or filter is applied over the image to modify pixel values, leading to a processed image.

- 📉 Low-pass filters smooth images, often using a Gaussian mask that helps reduce noise and retain key image information.

- 🔍 High-pass filters emphasize high frequencies, helping detect salient edges in an image, using masks like Sobel or Prewitt.

- 🖥️ Efficient convolution can be performed by using optimized masks and avoiding redundant multiplications.

- 🧊 The dynamic range of the image is preserved by normalizing the sum of the filter weights, like dividing by 16 for certain masks.

- 🎚️ Vertical and horizontal edges are detected by comparing pixel neighbors across axes, often using specific masks like Sobel or Prewitt.

- 🧭 A gradient image shows how an image changes across its pixels, using partial derivatives in both x and y directions.

- 🏞️ Gradient images represent how pixels differ from their neighbors, while edge images apply thresholds to identify strong edges.

Q & A

What is the purpose of applying a convolution to an image?

-The purpose of applying a convolution is to process each pixel of the image by taking into account its neighboring pixels and applying a filter (mask). This operation can be used for tasks such as edge detection or blurring.

What is the difference between the original image and the processed image during convolution?

-The original image remains untouched during convolution, while the processed image is where the result of applying the convolution mask is written. The mask is applied to the original image and used to compute values for the processed image.

How does the convolution process work on a pixel level?

-In convolution, each pixel is processed by multiplying its value with the corresponding value of the mask. The mask is applied across the image, and at each position, the mask's values are multiplied by the underlying pixels and summed up to give a new pixel value in the processed image.

What is a low-pass filter, and how is it used in image processing?

-A low-pass filter, often implemented with a Gaussian function, is used to smooth an image by averaging out high-frequency noise. It reduces noise but also results in a slight blur in the image, which smooths out fine details.

How does a high-pass filter detect edges in an image?

-A high-pass filter detects edges by using a mask whose weights sum to zero. It focuses on the differences between neighboring pixels, highlighting areas where pixel values change abruptly, which are likely to be edges.

What is the difference between a vertical and a horizontal edge detection mask?

-A vertical edge detection mask identifies differences between left and right neighboring pixels, while a horizontal edge detection mask finds differences between top and bottom neighboring pixels. Each highlights edges in its respective direction.

What is the Sobel filter used for in edge detection?

-The Sobel filter is used to detect both vertical and horizontal edges. It applies different weights to pixels depending on their distance from the center of the mask, placing more emphasis on closer pixels to create smoother results.

How does the gradient image differ from the edge image?

-The gradient image represents how much each pixel differs from its neighbors, showing changes in intensity across the image. The edge image, on the other hand, is a binary image that highlights only the most significant changes (edges) by applying a threshold to the gradient image.

Why is normalization important when applying a convolution mask?

-Normalization ensures that the result of the convolution stays within the dynamic range of the image (e.g., 0 to 255 for an 8-bit image). Without normalization, the resulting values could go beyond the allowed range, distorting the image.

What is the gradient of an image, and how is it calculated?

-The gradient of an image shows how pixel values change across the image. It is calculated by combining the partial derivatives of the image in both the x and y directions. The gradient magnitude is obtained by squaring these derivatives, summing them, and taking the square root.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)