What are Diffusion Models?

Summary

TLDR扩散模型是一种新兴的生成模型,通过模拟数据从清晰图像逐渐加入高斯噪声至纯噪声的过程,然后逆向去除噪声以恢复原始图像。这种模型在图像生成和条件设置方面表现出色,甚至在某些任务中超越了生成对抗网络(GANs)。视频解释了扩散模型的基本机制,包括正向扩散过程、逆向去噪过程以及如何通过优化变分下界来训练模型。此外,还探讨了如何将扩散模型应用于条件生成和图像修复等任务。

Takeaways

- 🌟 扩散模型(Diffusion Models)是生成模型领域的新兴方法,尤其在图像生成方面取得了显著进展。

- 🚀 扩散模型通过逐步添加高斯噪声将图像转换为纯噪声样本,然后学习逆向过程以恢复原始图像。

- 🔄 正向扩散过程模拟数据逐渐变为噪声,而逆向过程则尝试从噪声中恢复数据。

- 📈 扩散模型使用马尔可夫链(Markov Chain)来描述正向噪声添加过程,每一步的分布仅依赖于前一步的样本。

- 🎯 逆向过程中,模型通过学习去除噪声的步骤,逐步将样本引导回数据流形,生成合理的样本。

- 🔧 在训练过程中,扩散模型的目标是最大化一个下界(变分下界),而不是直接最大化模型对数据的密度分配。

- 🔄 扩散模型可以条件性地生成样本,例如根据类别标签或文本描述来生成图像。

- 🖼️ 扩散模型在图像修复(inpainting)任务中也表现出色,通过专门训练的模型来填补图像中的缺失部分。

- 📊 扩散模型与其他生成模型(如生成对抗网络GANs)相比,在某些任务上表现出更好的性能。

- 🛠️ 扩散模型的训练依赖于对正向过程中每一步的噪声水平的准确学习。

- 🔗 扩散模型与概率流(Probability Flow ODE)有紧密联系,后者通过数值积分近似对数似然。

Q & A

什么是扩散模型?

-扩散模型是一种生成模型,它通过模拟数据从清晰样本逐渐加入噪声直到变成纯噪声的过程,然后再逆向操作,逐步去除噪声以恢复原始样本,从而学习数据的分布。

扩散模型在图像生成领域取得了哪些成就?

-扩散模型在图像生成领域取得了显著的成功,它们在某些任务上甚至超过了生成对抗网络(GANs)等其他类型的生成模型。例如,最近的扩散模型在感知质量指标上超越了GANs,并且在将文本描述转换为图像、绘画和图像操作等条件设置中表现出色。

扩散模型的正向过程是如何定义的?

-扩散模型的正向过程是通过一个马尔可夫链来定义的,其中每一步的分布仅依赖于前一步的样本。这个过程逐渐向图像中加入噪声,最终形成一个纯噪声分布。

为什么扩散模型使用小步长的正向过程?

-使用小步长的正向过程意味着学习逆向过程不会太困难。小步长减少了每一步的不确定性,使得模型能够更准确地推断出前一步的状态。

扩散模型的逆向过程是如何工作的?

-扩散模型的逆向过程是一个学习过程,它通过一个参数化的反向马尔可夫链来逐步去除噪声,目标是恢复到数据的分布。这个过程通过最大化一个变分下界来训练,而不是直接最大化似然函数。

扩散模型的训练目标是什么?

-扩散模型的训练目标是最大化变分下界(也称为证据下界),这是一个关于边际对数似然的下界。这个目标包括一个重建项和一个KL散度项,分别鼓励模型最大化数据的期望密度和使近似后验分布与潜在变量的先验分布相似。

扩散模型如何实现条件生成?

-扩散模型可以通过将条件变量(如类别标签或句子描述)作为额外输入在训练期间进行条件生成。在推理时,模型可以使用这些条件信息来生成特定于条件的样本。

扩散模型在图像修复(inpainting)任务中的表现如何?

-扩散模型在图像修复任务中取得了成功。通过专门针对此任务进行微调的模型,可以在给定完整上下文的情况下更好地填充图像中缺失的部分,从而避免边缘伪影。

扩散模型与变分自编码器(VAEs)有什么相似之处?

-扩散模型与VAEs相似之处在于它们都可以被视为潜在变量生成模型,并且它们都使用变分下界作为训练目标。然而,扩散模型的正向过程通常是固定的,而逆向过程是学习的重点。

扩散模型的连续时间形式可以产生什么?

-扩散模型的连续时间形式可以产生所谓的概率流(probability flow ODE),这允许通过数值积分来近似对数似然,从而提供了一种不同于变分下界的密度估计方法。

扩散模型与分数匹配模型有什么关系?

-扩散模型与分数匹配模型之间存在紧密的联系。分数匹配模型中的分数实际上等于扩散模型中预测的噪声,直到一个缩放因子。因此,我们可以将扩散模型中的去噪过程视为近似地跟随数据对数密度梯度的过程。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Text-to-GRAPH w/ LGGM: Generative Graph Models

【生成式AI導論 2024】第18講:有關影像的生成式AI (下) — 快速導讀經典影像生成方法 (VAE, Flow, Diffusion, GAN) 以及與生成的影片互動

ISMRM MR Academy - Compressed Sensing in MRI

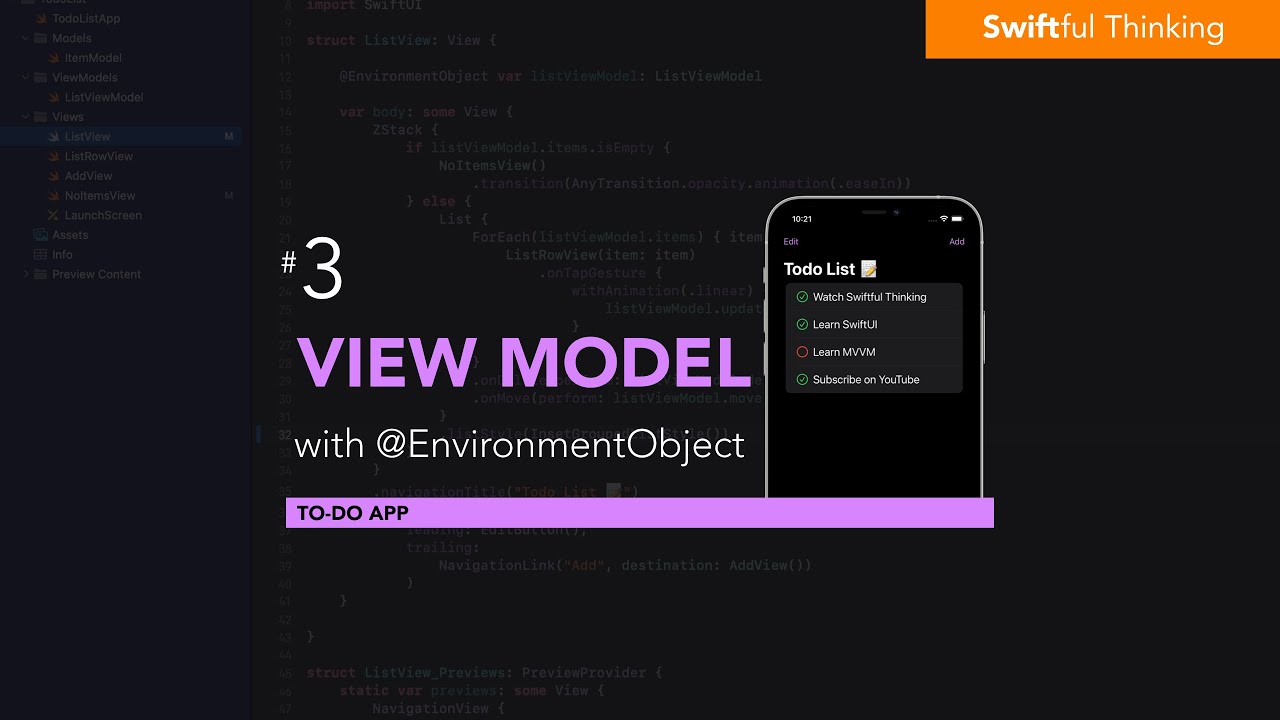

Add a ViewModel with @EnvironmentObject in SwiftUI | Todo List #3

How a Lens creates an Image.

Understand DSPy: Programming AI Pipelines

5.0 / 5 (0 votes)