Let's build GPT: from scratch, in code, spelled out.

Summary

TLDR本视频介绍了如何使用Transformer架构来训练一个类似于GPT的文本生成模型。通过分析文本序列,模型能够生成连贯的文本。讲解了模型的内部机制,包括自注意力机制、多头注意力、位置编码和前馈网络等关键概念,并展示了如何使用Python和PyTorch实现这些机制。最后,通过在小型数据集上的训练,模型成功生成了类似莎士比亚风格的文本。

Takeaways

- 🤖 介绍了Chachi PT系统,它是一种基于AI的文本交互系统,能够执行文本任务并生成内容。

- 📈 通过比较不同提示生成的结果,展示了Chachi PT是一个概率系统,可以为同一提示提供多种答案。

- 🌐 讨论了Transformer架构的重要性,这是支持Chachi PT和其他类似系统的核心神经网络技术。

- 📚 提到了使用'tiny Shakespeare'数据集来训练基于字符的Transformer语言模型。

- 🛠️ 强调了训练Transformer模型的过程,包括预训练和微调阶段,以及如何通过迭代和随机采样来训练模型。

- 🔍 解释了如何通过编码器和解码器将文本转换为整数序列,以及如何使用这些序列进行模型训练。

- 📊 展示了如何使用PyTorch库和张量来处理和训练数据,以及如何通过前向和后向传播来训练神经网络。

- 🎯 讨论了模型训练中的损失函数和如何评估模型性能,以及如何通过调整参数来改善模型的训练。

- 🔄 描述了使用自注意力机制(self-attention)来增强模型对文本序列的理解能力。

- 📈 通过实验和结果分析,展示了模型从随机预测到逐渐学习文本模式并生成更合理文本的过程。

- 🚀 最后,提到了如何通过GitHub上的Nano GPT项目来进一步探索和训练Transformer模型。

Q & A

Chachi PT是什么,它如何影响AI社区?

-Chachi PT是一种允许用户与AI互动并给予基于文本的任务的系统。它通过生成文本序列来响应用户的提示,从而在AI社区引起了轰动。

如何理解Chachi PT生成的文本是概率性的?

-Chachi PT是一个概率性系统,对于同一个提示,它可以生成多个不同的回答。这意味着系统能够根据输入的初始文本序列,预测接下来可能出现的字符或词汇。

Transformer架构是如何在Chachi PT中起作用的?

-Transformer架构是Chachi PT的核心,它负责处理序列数据并生成响应。Transformer通过自注意力机制(Self-Attention)和位置编码(Positional Encoding)来理解输入文本的上下文和结构,从而生成连贯和相关的输出文本。

什么是自注意力机制(Self-Attention)?

-自注意力机制是Transformer架构中的关键组成部分,它允许模型在处理一个序列时考虑序列中的所有位置,这使得模型能够捕捉到长距离依赖关系。通过自注意力机制,模型可以更好地理解文本中的上下文信息。

为什么Transformer架构在AI领域如此重要?

-Transformer架构因其高效的并行处理能力和对长距离依赖关系的捕捉而在AI领域变得极其重要。自从2017年提出以来,它已经被广泛应用于各种AI任务,如机器翻译、文本生成、问答系统等。

如何训练一个基于Transformer的语言模型?

-训练一个基于Transformer的语言模型需要大量的文本数据和计算资源。首先,需要对数据进行预处理,如分词、编码和位置编码。然后,通过反向传播和优化算法(如Adam)来调整模型参数,使模型能够根据给定的输入序列生成准确的输出。

在训练Transformer模型时,为什么需要使用位置编码?

-位置编码用于给模型提供序列中每个元素的位置信息。由于Transformer架构本身不具备捕捉序列顺序的能力,位置编码通过向输入的每个元素添加一个唯一的位置标记来帮助模型理解元素在序列中的位置。

在训练过程中,为什么需要对模型进行预训练和微调?

-预训练是在一个大型的数据集上进行的,目的是让模型学习到语言的通用表示。微调则是在特定任务的数据集上进行的,目的是让模型更好地适应这个任务。通过这两个阶段,模型能够从通用知识中提取出对特定任务有用的信息。

如何评估Chachi PT或类似模型的性能?

-模型的性能通常通过损失函数来评估,如交叉熵损失。此外,还可以通过人工评估生成的文本的质量,包括其连贯性、相关性和准确性。在实际应用中,还需要考虑模型的响应时间和计算效率。

在训练Transformer模型时,如何避免过拟合?

-为了避免过拟合,可以采用多种技术,如数据增强、正则化、dropout和早停(early stopping)。这些方法可以帮助模型在保持良好性能的同时,避免对训练数据过度敏感。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

【生成式AI導論 2024】第18講:有關影像的生成式AI (下) — 快速導讀經典影像生成方法 (VAE, Flow, Diffusion, GAN) 以及與生成的影片互動

【生成式AI導論 2024】第17講:有關影像的生成式AI (上) — AI 如何產生圖片和影片 (Sora 背後可能用的原理)

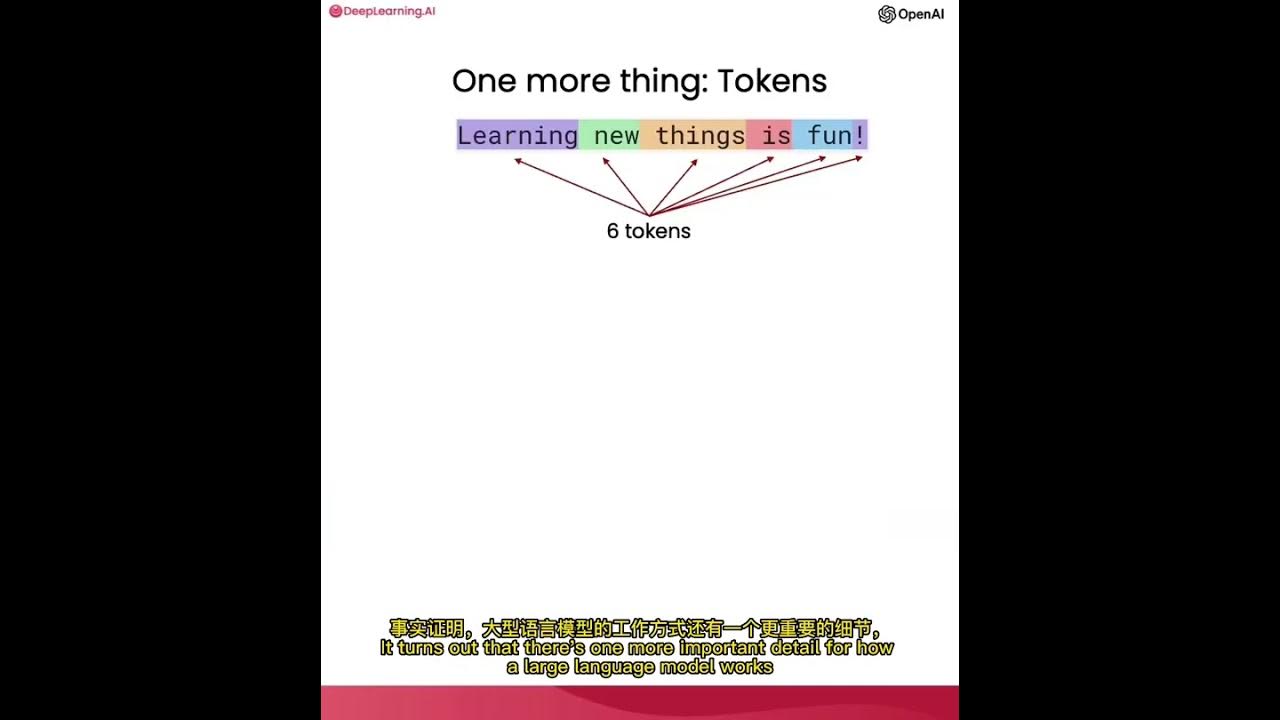

使用ChatGPT API构建系统1——大语言模型、API格式和Token

Create a custom data model for Todo items in SwiftUI | Todo List #2

Text-to-GRAPH w/ LGGM: Generative Graph Models

What are Transformer Models and how do they work?

5.0 / 5 (0 votes)