AWS Architecture for hosting Web Applications

Summary

TLDRThis lesson focuses on constructing an AWS-based architecture for hosting a highly available and scalable web application. It outlines the use of Route 53 for DNS, Amazon CloudFront for content delivery, S3 buckets for storage, and EC2 instances with Elastic Load Balancing for handling traffic. Auto Scaling Groups ensure fault tolerance, and RDS in a multi-AZ configuration provides database reliability. The architecture aims to optimize performance and cost-efficiency by scaling resources in response to fluctuating traffic.

Takeaways

- 🌐 **Global Reach**: AWS provides a global infrastructure that can host web applications with high availability and scalability.

- 🔍 **DNS Service**: Route 53 by AWS is used for DNS services, ensuring highly available domain name system for user requests.

- 📡 **Content Delivery**: Amazon CloudFront is utilized for efficient content delivery, routing requests to the nearest edge location for optimal performance.

- 💾 **Data Storage**: S3 buckets are recommended for storing web application resources due to their durability and scalability.

- 🔄 **Load Balancing**: Elastic Load Balancing (ELB) is used to distribute incoming traffic among EC2 instances, enhancing fault tolerance.

- 🖥️ **EC2 Instances**: EC2 instances are deployed across multiple availability zones for redundancy, ensuring service continuity.

- 🔁 **Auto Scaling**: Auto Scaling groups are essential for automatically handling EC2 instance scaling based on traffic demands.

- 🛠️ **AMIs for EC2**: Amazon Machine Images (AMIs) are recommended for EC2 instances to streamline the deployment of web servers with pre-loaded applications and configurations.

- 🔒 **Database Service**: AWS Relational Database Service (RDS) in a multi-AZ deployment ensures high availability for the database layer.

- 🔌 **Elastic Infrastructure**: The architecture allows for elastic scaling to match IT costs in real-time with fluctuating customer traffic.

Q & A

What is the primary purpose of the architecture discussed in the script?

-The primary purpose of the architecture is to host a reliable and scalable web application on AWS, ensuring high availability and the ability to scale up or down based on traffic fluctuations.

Why is Route 53 used in the architecture?

-Route 53 is used as the DNS service to serve user DNS requests and route network traffic to the infrastructure running in Amazon Web Services.

What role does Amazon CloudFront play in the architecture?

-Amazon CloudFront delivers static, streaming, and dynamic content from a global network of edge locations, ensuring content is delivered with the best possible performance to users regardless of their location.

How does storing resources in an S3 bucket benefit the web application?

-Storing resources in an S3 bucket provides highly durable storage for mission-critical data, which is ideal for web applications served through CloudFront, as it can be designated as the primary source for content delivery.

What is the function of Elastic Load Balancing in the architecture?

-Elastic Load Balancing automatically distributes incoming application traffic among the hosts of EC2 instances, ensuring seamless load balancing and fault tolerance in response to varying application traffic.

Why are EC2 instances deployed across multiple availability zones?

-EC2 instances are deployed across multiple availability zones to provide greater fault tolerance, allowing the infrastructure to handle failures in one zone without affecting the entire application.

What is the significance of using Amazon Machine Images (AMIs) for web servers?

-Using AMIs for web servers allows for a standardized setup with required applications, patches, and software pre-loaded. This enables Auto Scaling groups to quickly provision new instances with the necessary configurations when needed.

How does the Auto Scaling group contribute to the scalability of the web application?

-The Auto Scaling group automatically provisions new EC2 instances when the web servers or EC2 instances fail, ensuring the application can handle increased load and maintain performance during peak traffic.

What is the role of RDS in providing high availability for the database service?

-RDS, or Relational Database Service, is used in a multi-AZ deployment with a primary master RDS and a standby RDS in a different availability zone, ensuring high availability and data redundancy.

Why is it important to deploy the architecture in a multi-AZ environment?

-Deploying the architecture in a multi-AZ environment ensures fault tolerance. If one availability zone fails, the other can pick up the load, allowing the application to continue operating without significant downtime.

What are the core AWS services required for the architecture mentioned in the script?

-The core AWS services required for the architecture are Amazon Route 53, Amazon CloudFront, S3 buckets, Elastic Load Balancing, EC2 instances, Auto Scaling groups, and RDS for the database.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

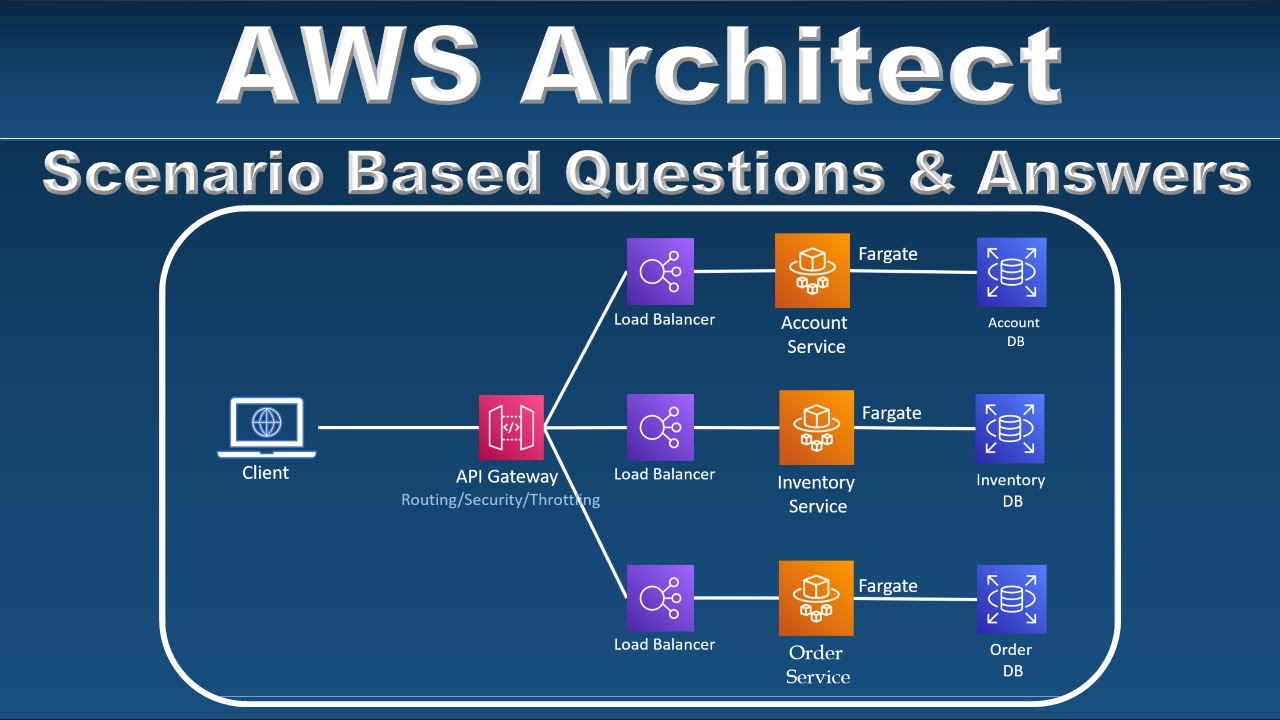

AWS Solution Architect Interview Questions and Answers - Part 2

AWS Quick Start for SQL Server 2017 on Amazon EC2

Cloud Computing For Beginners | What is Cloud Computing | Cloud Computing Explained | Simplilearn

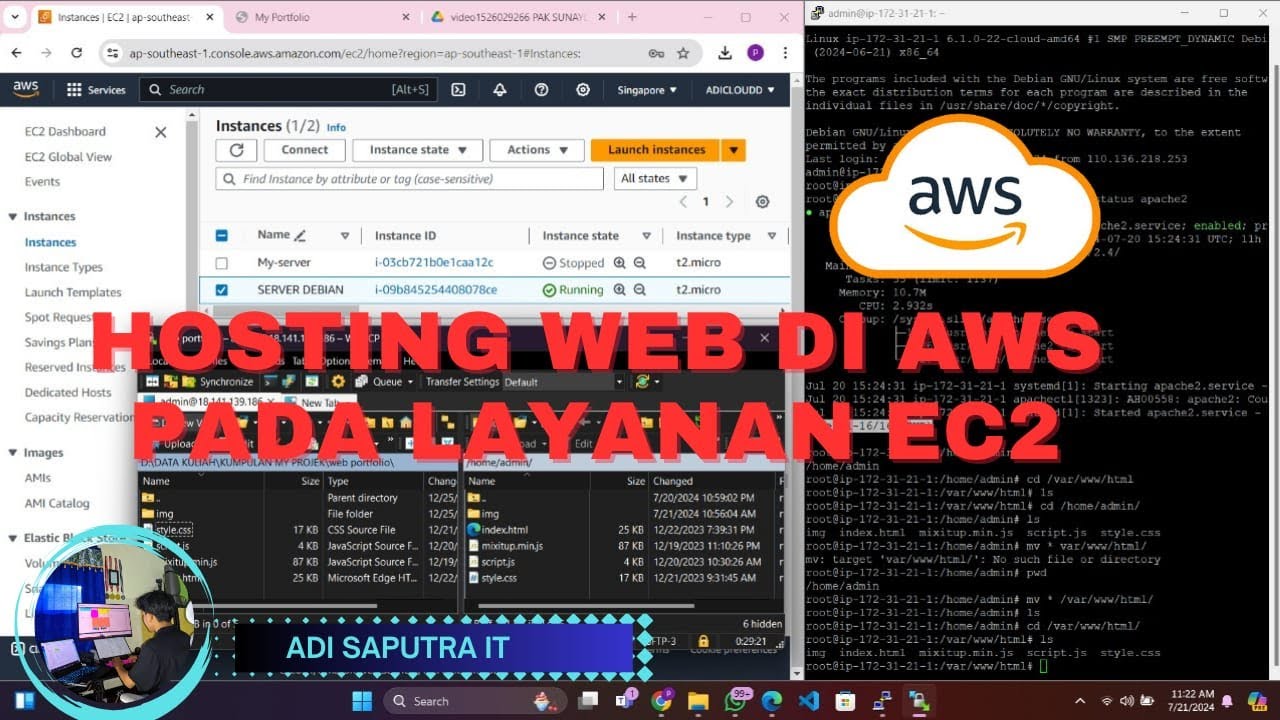

Hosting Dynamic Website Using AWS EC2 Instance

CARA HOSTING/UPLOAD WEBSITE DI AWS MENGGUNAKAN LAYANAN EC2

AWS Acad Lab Cloud Web Application Builder | Building a Highly Available, Scalable Web Application

5.0 / 5 (0 votes)