大语言模型微调之道2——为什么要微调

Summary

TLDR本课程介绍了为什么应该对大型语言模型(LLMs)进行微调,微调是什么,以及如何通过实验比较微调模型与非微调模型。微调是将通用模型专业化,使其更适合特定用途,如聊天或代码自动完成。与提示工程相比,微调可以处理更多数据,纠正错误信息,并减少幻觉现象。此外,微调有助于提高模型在特定领域的性能和一致性,增强隐私保护,降低成本,并提供更好的控制。课程还介绍了用于微调的不同技术,包括PyTorch、Hugging Face和Laminai库。

Takeaways

- 📚 微调(Fine-tuning)是将通用模型(如GPT-3)转变为特定用途的模型,如聊天机器人或代码自动完成工具。

- 👨⚕️ 微调模型类似于从全科医生(通用模型)到心脏病专家(特定用途模型)的转变,提供更深入的专业知识。

- 🧠 微调使模型能够从大量数据中学习,而不仅仅是访问数据,从而提升其性能和专业性。

- 💡 微调有助于模型提供更一致的输出和行为,减少模型的幻觉(hallucinations)问题。

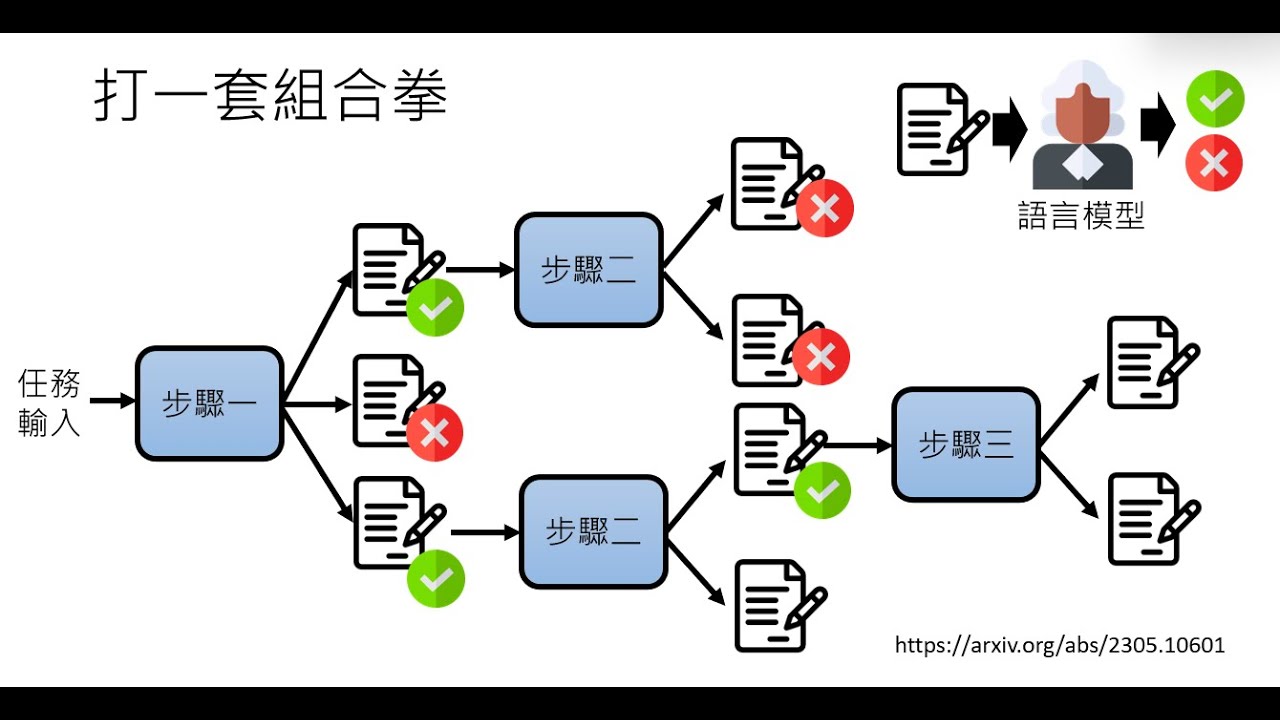

- 🚀 与提示工程(Prompt Engineering)相比,微调可以处理几乎无限量的数据,允许纠正模型之前的错误信息。

- 💼 微调适用于企业级或特定领域的用例,适合生产环境使用。

- 🔒 微调可以在私有环境中进行,有助于防止数据泄露和保护隐私。

- 💰 微调可以提高成本透明度,对于大量使用的模型,可以降低每请求的成本。

- ⏱️ 微调可以减少模型响应的延迟,对于需要快速响应的应用(如自动完成)尤其重要。

- 🛡️ 微调允许为模型设置更多的安全防护,如自定义响应和内容过滤。

- 📚 微调过程中可以使用多种技术,包括PyTorch、Hugging Face和Laminar等库。

- 📈 微调模型在实际应用中的表现明显优于未微调模型,能够提供更准确和有用的信息。

Q & A

为什么要进行模型微调?

-模型微调是为了让通用模型(如GPT-3)专门化,适应特定的使用场景,比如将GPT-4转变为专门自动完成代码的GitHub Copilot。微调可以使模型从更多数据中学习,提升其在特定领域的专业性和一致性,减少错误信息的产生,并能更好地适应用户的需求。

微调和提示工程(prompt engineering)有什么区别?

-提示工程是通过精心设计的问题来引导模型产生期望的输出,不需要额外数据,适合快速开始和原型开发。而微调则是通过大量特定数据训练模型,使其在特定任务上表现得更好,但需要更多的数据和计算资源。

微调模型有哪些优势?

-微调模型可以提高性能,减少生成错误信息的情况,使模型在特定领域有更深入的专业知识,输出更一致,并且可以更好地进行内容审查。此外,微调可以在私有环境中进行,有助于保护数据隐私和防止数据泄露。

微调模型有哪些潜在的缺点?

-微调模型需要大量的高质量数据,存在前置计算成本,并且可能需要一定的技术知识来正确地准备和使用数据。与简单的提示工程相比,微调的门槛更高。

微调模型适合哪些使用场景?

-微调模型适合企业级或特定领域的使用场景,特别是当模型需要频繁使用或者需要处理大量请求时。微调可以使模型更加专业和稳定,适合生产环境。

在微调过程中,隐私如何得到保护?

-微调可以在私有云(VPC)或本地进行,这样可以防止数据泄露和第三方解决方案可能带来的数据安全问题。

微调模型如何帮助降低成本?

-通过微调一个较小的模型,可以降低每请求的成本,从而在大量使用模型时节省开支。此外,微调后的模型可以更好地控制成本,包括响应时间和吞吐量。

在微调模型时,有哪些工具和库可以使用?

-可以使用包括PyTorch、Hugging Face和Laminai(Llama)库在内的多种工具和库。PyTorch是最低级别的接口,Hugging Face提供了更高级别的接口,而Laminai则提供了一个非常高级的接口,可以用很少的代码训练模型。

微调模型时,如何处理模型的自动补全问题?

-在微调模型时,可以通过添加指令标签来告知模型具体的指令和边界,从而避免模型继续自动补全无关的内容。

在比较微调模型和非微调模型时,有哪些明显的差异?

-微调模型在处理特定任务时,如训练狗坐下的指令,能够提供更详细、更准确的指导。相比之下,非微调模型可能无法理解或正确响应特定的指令。

微调模型在对话中的表现如何?

-微调模型在对话中能够更好地理解上下文和问题,提供连贯和相关的回答。而非微调模型可能无法进行有效的对话,其回答可能不相关或者缺乏连贯性。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)