Running Confidential Workloads with Podman - Container Plumbing Days 2023

Summary

TLDRSergio presents a solution for running confidential workloads with Podman, enabling hardware-based memory encryption and attestation for container applications. By nesting virtualization with libvirt's libkrun inside container environments, they offer a compatible workflow leveraging existing container tools while providing confidential computing guarantees. The demo illustrates transforming a regular container into an encrypted, integrity-protected workload, showcasing the protection against host-level memory and storage inspection. Addressing compatibility with other virtualization technologies, Sergio highlights the unique strengths of this approach for low-footprint, single-container deployments prevalent in cloud and edge scenarios.

Takeaways

- 😄 Confidential computing protects data and code by performing computations in a hardware-based trusted execution environment (TEE).

- 🔐 It requires hardware support for memory encryption, integrity protection, and remote attestation.

- 🐳 The goal is to enable running confidential workloads within the existing container workflow using Podman and CRI-O.

- 📦 A confidential workload is an OCI image containing an encrypted disk image and TEE parameters.

- 🔒 The disk image is encrypted using dm-crypt, protecting data at rest, while RAM is encrypted by hardware.

- ✅ Remote attestation verifies the initial memory state before providing decryption keys.

- 🧩 Confidential workloads are nested inside regular container contexts, preserving existing isolation.

- 🌐 Network activity from confidential workloads appears like regular container traffic.

- ⚖️ Kata Containers and confidential workloads have trade-offs in terms of compatibility and overhead.

- 🔬 A live demo showcased the confidentiality guarantees against memory and disk inspection attacks.

Q & A

What is confidential computing?

-Confidential computing is the protection of data and code by performing computation in a hardware-based trusted execution environment. It provides memory encryption, integrity protection, and the ability to generate attestations of the memory contents.

Why is confidential computing important?

-Confidential computing is important because it prevents the host system from accessing sensitive data and code running in the trusted execution environment, providing a secure isolated environment for running sensitive workloads.

What are the main goals of enabling confidential workloads with Podman?

-The main goals are compatibility with the existing container tools and workflows, self-contained OCI images with all necessary information, meeting the confidential computing requirements (encrypted and integrity-protected disk and measurable memory contents), and limiting host leaks.

How does the proposed solution work?

-The solution involves creating a Luks-encrypted disk image containing the contents of the original OCI image, and then creating a new OCI image that includes this encrypted disk image and the necessary parameters for launching a trusted execution environment with libvirt-lkvm.

How is the confidential workload protected?

-The confidential workload's memory is encrypted and integrity-protected by the hardware, and the disk image is Luks-encrypted and mounted inside the trusted execution environment, preventing the host from accessing sensitive data.

What is the role of the attestation server?

-The attestation server stores the expected measurements for registered confidential workloads. It verifies the attestation from the workload's trusted execution environment and provides the encryption key to unlock the disk image if the measurement matches.

How does this solution differ from Kata Containers?

-Kata Containers can run multiple containers in the same VM, while this solution intends to run one container per trusted execution environment by design. This solution aims to provide confidential computing guarantees with a smaller stack addition.

What are the advantages of this solution for specific deployment scenarios?

-For single-container cloud deployments or Function-as-a-Service scenarios, this solution provides a lower footprint and lower TCO. For edge or embedded deployments, it allows meeting the confidential computing requirements with a minimal addition to the existing container infrastructure.

Can this solution coexist with other virtualization technologies like KVM or VirtualBox?

-Yes, this solution can coexist on the same host with other containers running different runtimes, but by design, it does not support nesting trusted execution environments.

What is the purpose of the entrypoint in the confidential workload image?

-The entrypoint is a binary that displays a message if the OCI image is attempted to be run without the libvirt-lkvm runtime specified. It serves as a safeguard against inadvertently running the confidential workload without the proper runtime.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

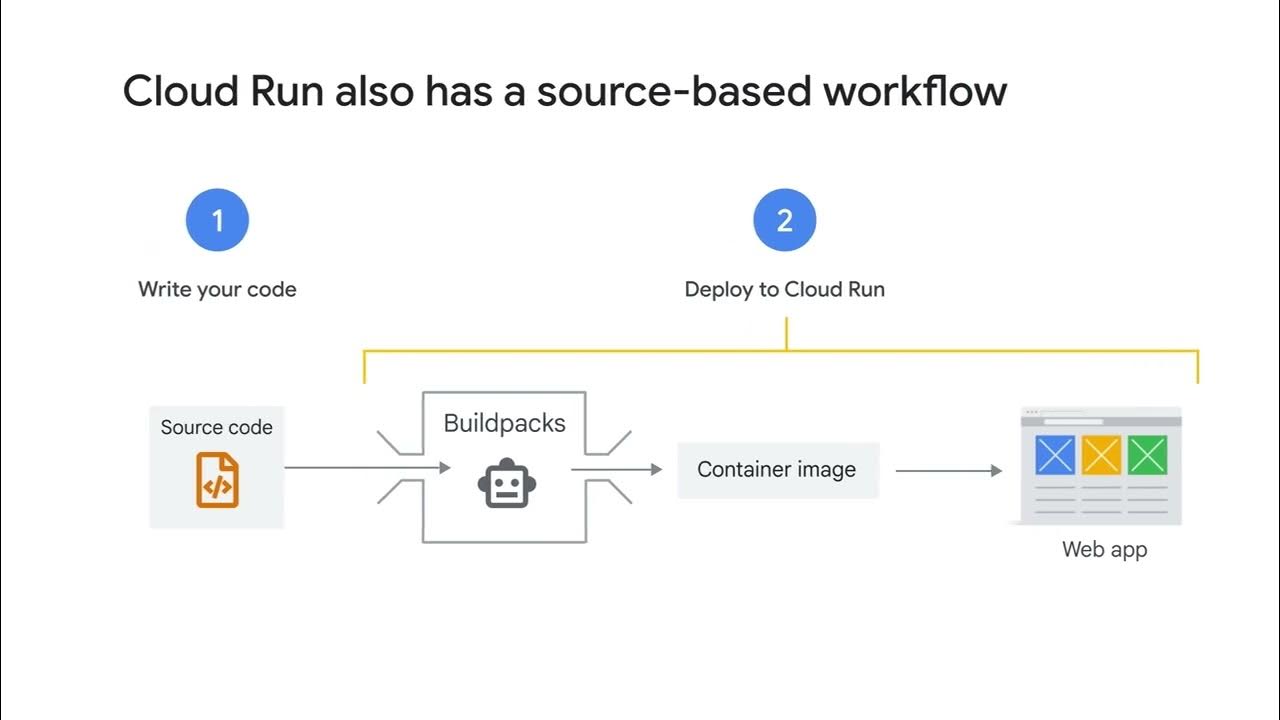

Managed serverless computing with Cloud Run

What runs ChatGPT? Inside Microsoft's AI supercomputer | Featuring Mark Russinovich

Azure Update - 19th April 2024

48. OCR A Level (H446) SLR9 - 1.3 Symmetric & asymmetric encryption

DevSpace - Development Environments in Kubernetes

100+ Docker Concepts you Need to Know

5.0 / 5 (0 votes)