Drone fligth algorithm explained

Summary

TLDRTeam Dragonfly introduces an algorithm for the Madworx Minidrone competition, focusing on image processing to identify and follow a path based on color thresholds. They use MATLAB's color threshold app for calibration and process images by inverting and rotating them to analyze and classify path figures. The path planning subsystem controls the drone using the centroid, eccentricity, and orientation of the largest figure. Challenges include managing drone movement with limited degrees of freedom and reducing noise in movement signals. They address computational cost by utilizing MATLAB's smoothing blocks instead of custom functions.

Takeaways

- 🐉 The team Dragonfly presented an algorithm for a minidrone competition by Madworx.

- 🎨 The image processing subsystem separates the RGB channels and uses a threshold filter to identify the path based on track colors.

- 🌈 A color threshold app from MATLAB is used for easy calibration in real environments.

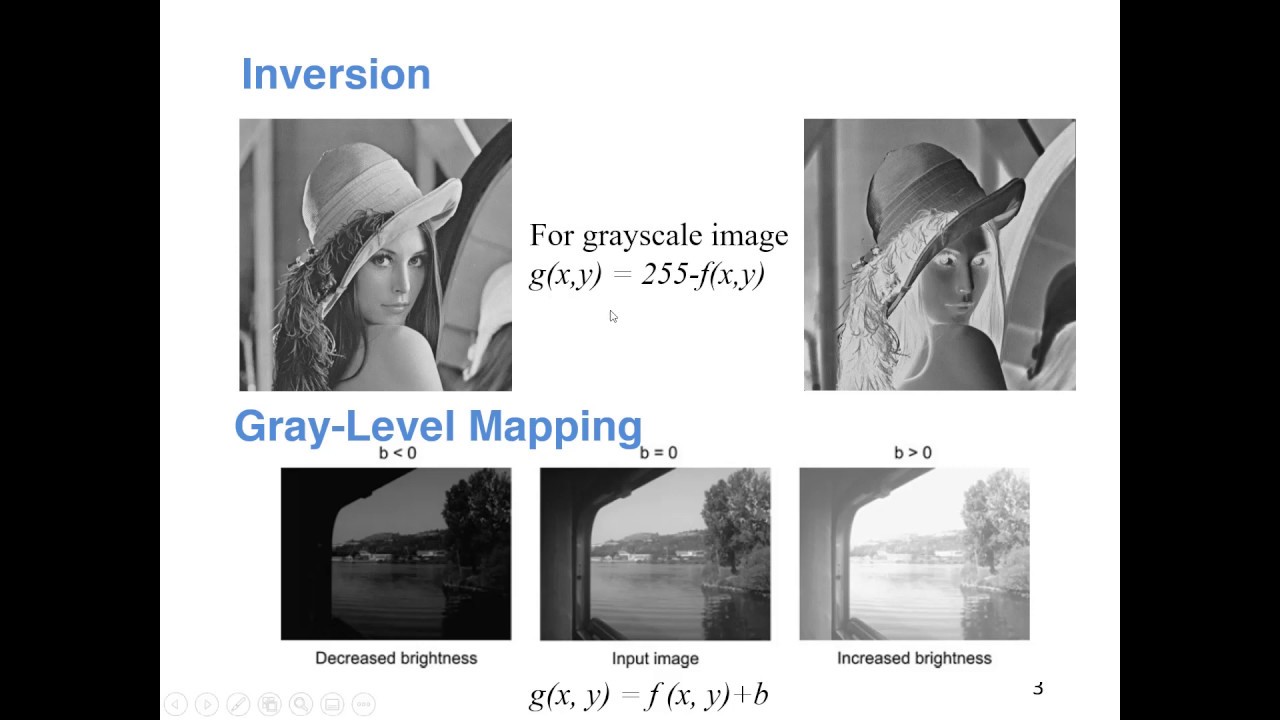

- 🔄 The image undergoes inversion over the y-axis and a 90-degree rotation to transpose the matrix.

- 🔍 Loop analysis identifies and classifies figures in the path, extracting their centroid, orientation, and eccentricity.

- 📍 The path planning subsystem uses the largest figure's information for drone control, focusing on 4 controllable degrees of freedom: x, y, ceta, and jaw.

- 🤖 A state machine is created to determine if the drone should move or has reached the target.

- 🚫 Signal from the movement vector is noisy and requires saturation and filtering to smooth discontinuities and reduce noise.

- 🗺️ A transformation is needed to make the local coordinates of the movement vector compatible with the global coordinates used by the control system.

- 🛠️ The main challenge was reducing computational cost in simulations to prevent performance saturation when using the real drone.

- 🛑 The team switched to using MATLAB's smoothing provided blocks, resulting in significant improvement in computational cost.

Q & A

What is the main focus of the Dragonfly team's presentation?

-The main focus of the Dragonfly team's presentation is the algorithm they used to solve the problem proposed by the Madworx Minidrone competition, specifically discussing the image processing and path planning subsystems.

How does the image processing subsystem begin?

-The image processing subsystem begins by separating the red, green, and blue channels of the image, which are then processed by a threshold filter to identify the path the drone must follow based on the track's colors.

What is the purpose of the threshold filter in the image processing?

-The threshold filter in the image processing subsystem is used to identify the path the drone must follow based on the colors of the track.

What steps are taken after the color components are filtered?

-After filtering, the color components are meshed into a new image, which is then converted into a binary image. The image is inverted over the y-axis and rotated 90 degrees by transposing the image matrix.

What is the role of the loop analysis in the image processing subsystem?

-The loop analysis identifies and classifies the different figures that compose the paths. It organizes these figures according to the exercise to extract the centroid, orientation, and eccentricity of the figure with the largest area.

How is the path planning subsystem connected to the image processing subsystem?

-The path planning subsystem receives information about the biggest figure detected by the image processing subsystem, including the centroid's coordinates, eccentricity, and angular orientation, which is then used to control the drone.

What are the four components of the vector 'u' created by the team?

-The vector 'u' created by the team has four components: x, y, ceta (angle), and yaw, which correspond to the drone's controllable degrees of freedom.

What challenges did the team face in controlling the drone's movement?

-The team faced challenges with noisy, unpredictable, and discontinuous signals in the vector of movement, requiring them to apply saturation and filtering processes to smooth out discontinuities and reduce noise.

How does the team ensure the compatibility between local and global coordinate frames?

-The team ensures compatibility between the local and global coordinate frames by adding the drone's position to the vector of movement, resulting in a signal the drone can follow to complete its path.

What was one of the main lessons learned by the Dragonfly team?

-One of the main lessons learned by the Dragonfly team was the importance of identifying shapes in the drone's images, processing them, and deciding on a course of action based on what was identified.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)