Scraping Data Ulasan Produk Tokopedia Menggunakan SELENIUM & BEAUTIFULSOUP

Summary

TLDRIn this video, the presenter demonstrates how to scrape product reviews from the Tokopedia website, using libraries such as BeautifulSoup and Selenium. The tutorial walks through the process of identifying the right data (reviews), configuring web scraping tools, and handling errors. The presenter explains how to extract review data, navigate through multiple pages, and save the scraped data in CSV format using Python. The video also covers error handling and customization options, providing viewers with a practical guide to automate the data collection process for Tokopedia stores.

Takeaways

- 😀 The video explains how to scrape product reviews from Tokopedia, focusing on extracting customer feedback from a specific store page.

- 😀 Before starting scraping, you need to identify the specific store URL, in this case, a laptop store on Tokopedia.

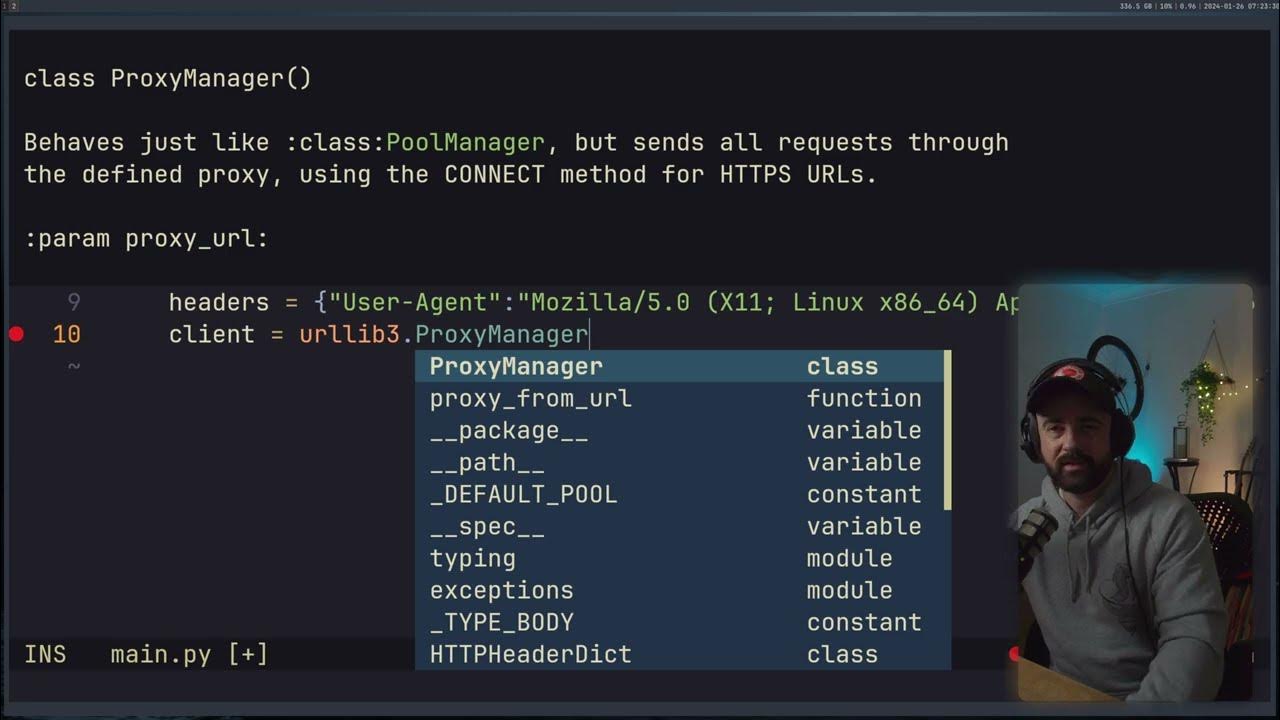

- 😀 The first step involves importing libraries such as BeautifulSoup and setting up a web driver to navigate the Tokopedia site.

- 😀 After accessing the store page, the script extracts review data from the HTML structure, specifically looking for elements containing customer reviews.

- 😀 The script handles multiple review pages by simulating clicks on the 'next' button, allowing the extraction of data from multiple pages.

- 😀 Error handling is incorporated to manage cases where a review might be missing or empty, ensuring the script continues running smoothly.

- 😀 The script uses time.sleep to ensure the pages load correctly before the next scraping step, avoiding errors due to page loading delays.

- 😀 A data variable is created to store the scraped reviews, and the process involves checking the HTML elements for review text or comments.

- 😀 Once the reviews are collected, the data is stored in a list, which is then used to create a Pandas DataFrame for easier manipulation and analysis.

- 😀 Finally, the scraped data is exported to a CSV file named 'Tokopedia.csv' for local storage and future use.

Q & A

What is the purpose of this video tutorial?

-The tutorial demonstrates how to scrape product reviews from Tokopedia using Python, specifically focusing on extracting review data from a store's product page and saving it to a CSV file for further analysis.

What libraries are used in the scraping process?

-The tutorial uses the Selenium library for automating web interactions (like navigating the page) and BeautifulSoup for parsing the HTML and extracting the desired review data.

How does the script handle pagination while scraping reviews?

-The script automates the process of clicking the 'Next' button to load additional review pages. It uses a loop to scrape reviews from multiple pages, limiting it to a specific number of pages (in this case, 3 pages).

What is the importance of inspecting the page's HTML structure?

-Inspecting the HTML structure is crucial for identifying the correct elements that contain the review data. This allows the script to target specific tags, such as `span` tags with a specific ID, to extract the review content accurately.

Why is error handling implemented in the script?

-Error handling is used to ensure the scraping process continues smoothly even if some reviews are empty or missing. For example, if a review field is empty or a page contains an error, the script handles the situation and moves on to the next review or page.

How does the script ensure it doesn't stop if an error occurs on a page?

-The script uses a `try-except` block to catch errors. If an error occurs (e.g., a missing review), the script continues the loop and moves on to the next page or review without stopping the entire process.

What happens if a user wants to scrape more than 3 pages?

-If the user wants to scrape more pages, they can adjust the script to increase the number of pages in the loop. The script will automatically click 'Next' to load and scrape the additional pages.

How is the scraped data stored after extraction?

-The scraped review data is stored in a Pandas DataFrame, which is a structure that organizes the data. The script then exports this DataFrame to a CSV file (e.g., `Tokopedia.csv`) for easy access and further analysis.

What is the role of the `time.sleep()` function in the script?

-The `time.sleep()` function is used to pause the script for a few seconds between actions, ensuring that the page has enough time to load before the script attempts to extract data. This helps to avoid errors due to incomplete page loading.

Can the script handle stores with reviews that contain non-text elements?

-Yes, the script is designed to filter out non-text elements and only extract valid review text. It can identify when a review contains other components (e.g., an empty review or a non-text component) and handle them accordingly without disrupting the scraping process.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Try this SIMPLE trick when scraping product data

Always Check for the Hidden API when Web Scraping

Web scraper dasar (single page)

3 Tips Automation Test menggunakan Selenium dan Python

Render Dynamic Pages - Web Scraping Product Links with Python

Effortlessly Scrape Data from Websites using Power Automate and Power Apps

5.0 / 5 (0 votes)