CNN Architecture | LeNet -5 Architecture

Summary

TLDRIn this video, the presenter dives deep into the architecture of convolutional neural networks (CNNs), explaining fundamental concepts such as convolution layers, pooling, and activation functions. Through the discussion, the presenter elaborates on the creation of a CNN architecture, using the example of the 'Planets' architecture, originally developed in 1998. The video also covers key design principles that can be adapted to create custom CNN architectures for different applications. Additionally, viewers are introduced to the importance of various layers, filters, and activation functions within CNNs, making this an essential guide for understanding deep learning models and building a strong foundation for machine learning projects.

Takeaways

- 😀 LeNet is one of the earliest CNN architectures, designed by Yann LeCun in 1998 for optical character recognition (OCR) tasks, like zip code recognition for the US Postal Service.

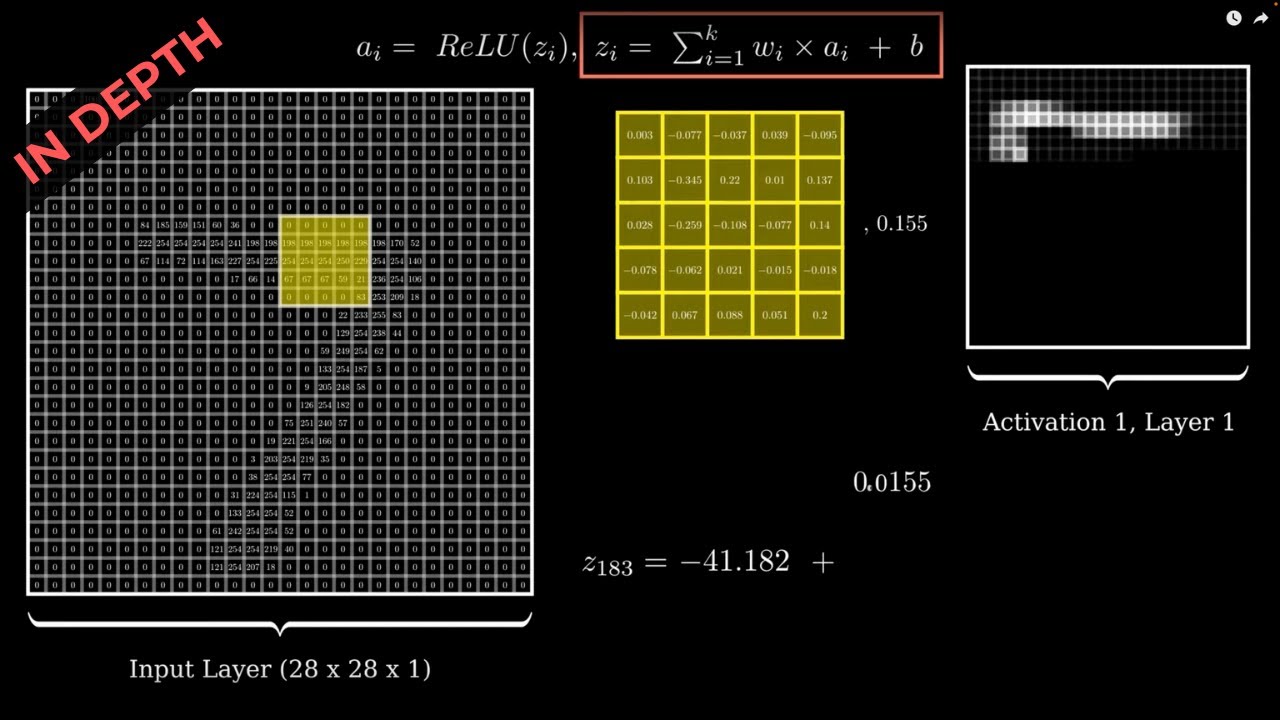

- 😀 CNNs consist of several key components: input layer, convolutional layers, pooling layers, fully connected layers, and an output layer. Each component plays a specific role in feature extraction and classification.

- 😀 The input layer in LeNet processes images, typically resized to 32x32 pixels. The architecture accepts RGB images with multiple channels.

- 😀 The convolutional layers in LeNet use filters (kernels) to extract features from the image. LeNet's first layer uses 6 filters of size 5x5, followed by more filters in deeper layers.

- 😀 Pooling layers, such as average pooling in LeNet, reduce the spatial dimensions of the data and help prevent overfitting. In LeNet, pooling is applied with a 2x2 field and a stride of 2.

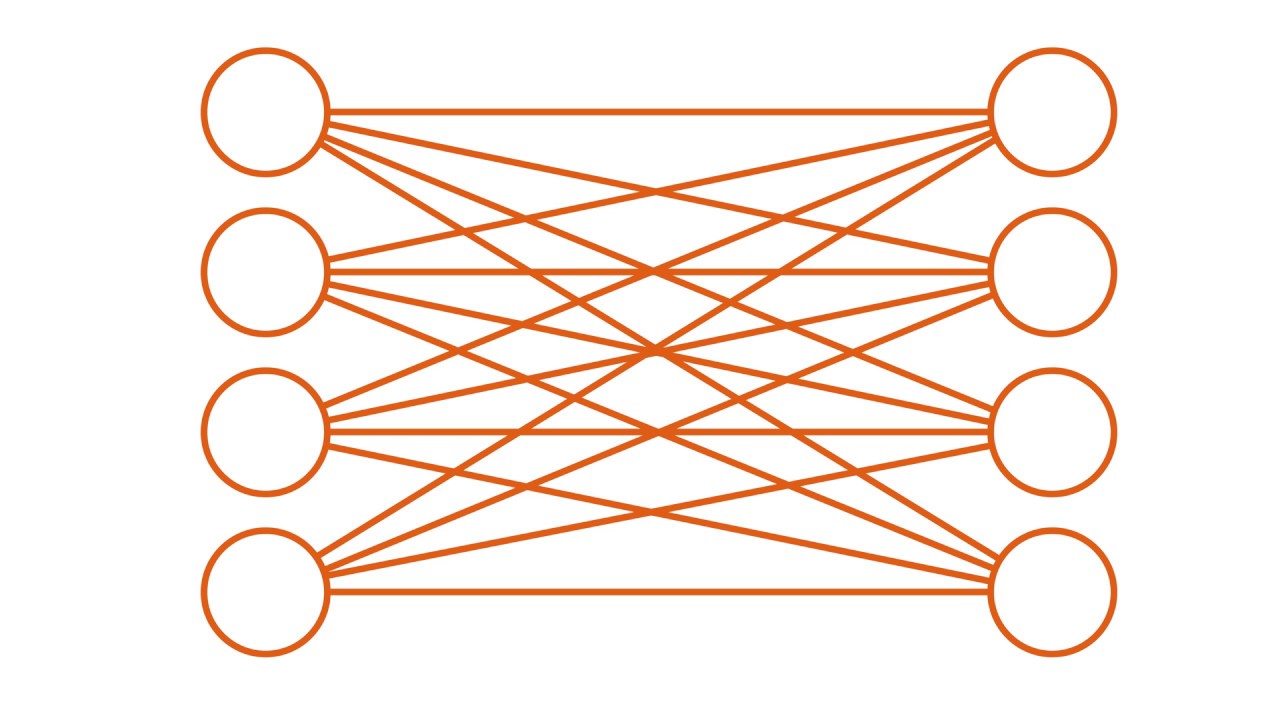

- 😀 Fully connected layers in CNNs flatten the extracted features and pass them through neurons for classification. LeNet has two fully connected layers with 120 and 84 neurons respectively.

- 😀 Activation functions like tanh were used in earlier CNNs like LeNet. Modern CNNs typically use ReLU (Rectified Linear Unit) activation functions for faster convergence and better performance.

- 😀 LeNet was highly influential in the development of more complex CNN architectures, leading to the creation of models like AlexNet, VGGNet, and ResNet.

- 😀 The architecture of LeNet can be modified by adjusting parameters like the number of filters, filter sizes, pooling types, and the number of neurons in fully connected layers.

- 😀 LeNet laid the groundwork for solving complex image classification problems, and its principles are still applied today in modern deep learning models used for tasks like object detection and facial recognition.

Q & A

What is the main topic of this video?

-The main topic of this video is explaining the architecture of Convolutional Neural Networks (CNNs), specifically focusing on the 'LeNet-5' architecture, as well as introducing CNN concepts like filters, pooling, and fully connected layers.

What is a CNN architecture and why is it important?

-CNN architecture refers to the structured arrangement of layers in a Convolutional Neural Network. It is crucial for tasks like image classification and object detection as it defines how the network processes and extracts features from input images.

What is the significance of the LeNet-5 architecture mentioned in the video?

-LeNet-5 is one of the earliest and most significant CNN architectures, developed by Yann LeCun in 1998. It was pivotal in the development of deep learning, especially for handwritten digit recognition in postal code classification.

What role do filters (kernels) play in CNNs?

-Filters, also known as kernels, are used in convolution layers to detect specific features in the input image, such as edges, textures, or patterns. These filters help extract relevant features from the data, which are then passed through the network.

What is the difference between max pooling and average pooling in CNNs?

-Max pooling selects the maximum value from a region of the feature map, whereas average pooling computes the average value. Both techniques help reduce the dimensionality of the feature maps while retaining important features.

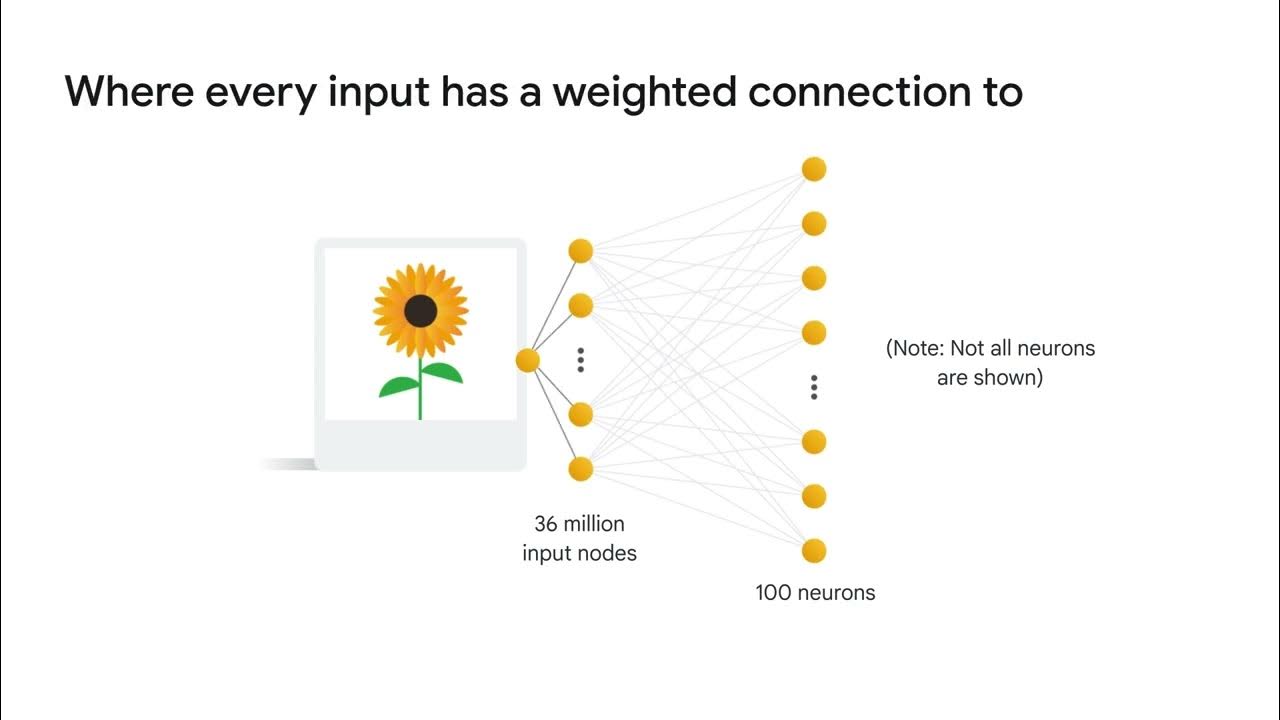

What is the purpose of the fully connected layer in CNN architecture?

-The fully connected layer in a CNN combines the features extracted by the previous layers to make predictions. It connects every neuron in one layer to every neuron in the next layer, helping the network learn complex patterns and classifications.

How does the video explain the process of creating a CNN architecture?

-The video walks through the steps of designing a CNN, starting with input layers, applying convolution and pooling layers, followed by flattening the data, and finishing with fully connected layers to output the final classification results.

What is the significance of the number of filters and kernel size in CNNs?

-The number of filters determines how many feature maps the convolution layer produces, while the kernel size dictates the size of the window that moves over the image to detect features. These parameters are critical in defining the network's capacity to extract features.

What does the activation function (like ReLU) do in CNNs?

-The activation function introduces non-linearity into the network, enabling it to learn more complex patterns. ReLU (Rectified Linear Unit) is commonly used because it helps mitigate the vanishing gradient problem and speeds up training.

What are some variations in CNN architecture design that can improve performance?

-Variations in CNN architecture can include changing the number of convolutional or pooling layers, adjusting filter sizes, using different activation functions, applying dropout to prevent overfitting, or employing normalization techniques like batch normalization.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)