Machine Learning Tutorial Python - 9 Decision Tree

Summary

TLDRThe video script explains the process of solving a classification problem using the decision tree algorithm. It starts with a simple dataset where predicting a person's salary over $100,000 is based on their company, job title, and degree. The decision tree method is illustrated by first splitting the dataset based on the company, then further refining the splits based on job title and degree. The importance of the order of feature selection is emphasized, with information gain and gini impurity as key metrics for choosing the best splits. The script then guides viewers through the practical steps of implementing a decision tree in Python using a Jupyter notebook, including data preparation, encoding categorical variables, and training the model. The tutorial concludes with an exercise where participants are encouraged to apply the decision tree algorithm to a real-world dataset, predicting the survival rate of passengers on the Titanic based on various attributes.

Takeaways

- 📈 **Decision Tree Algorithm**: Used for classification problems where logistic regression might not be suitable due to the complexity of the dataset.

- 🌳 **Building a Decision Tree**: In complex datasets, decision trees help in splitting the data iteratively to create decision boundaries.

- 💼 **Dataset Example**: The example dataset predicts if a person's salary is over $100,000 based on company, job title, and degree.

- 🔍 **Feature Selection**: The order in which features are split in a decision tree significantly impacts the algorithm's performance.

- 📊 **Information Gain**: Choosing the attribute that provides the highest information gain at each split is crucial for building an effective decision tree.

- 🔢 **Entropy and Impurity**: Entropy measures the randomness in a sample; low entropy indicates a 'pure' subset. Gini impurity is another measure used in decision trees.

- 🔧 **Data Preparation**: Convert categorical data into numerical form using label encoding before training a machine learning model.

- 📚 **Label Encoding**: Use `LabelEncoder` from `sklearn.preprocessing` to convert categorical variables into numbers.

- 🤖 **Model Training**: Train the decision tree classifier using the encoded input features and the target variable.

- 🧐 **Model Prediction**: Predict outcomes using the trained model by supplying new input data.

- 📝 **Exercise**: Practice by working on a provided dataset (e.g., Titanic dataset) to predict outcomes such as survival rates.

Q & A

What is the main purpose of using a decision tree algorithm in classification problems?

-The main purpose of using a decision tree algorithm in classification problems is to create a model that predicts the membership of a given data sample in a specific category. It is particularly useful when dealing with complex datasets that cannot be easily classified with a single decision boundary, as it can iteratively split the dataset to come up with decision boundaries.

How does a decision tree algorithm handle complex datasets?

-A decision tree algorithm handles complex datasets by splitting the dataset into subsets based on the feature values. It does this recursively until the tree is fully grown, creating a hierarchy of decisions that leads to the classification of the data.

What is the significance of the order in which features are selected for splitting in a decision tree?

-The order in which features are selected for splitting significantly impacts the performance of the decision tree algorithm. The goal is to select features that result in the highest information gain at each split, which helps in creating a more accurate and efficient decision tree.

What is entropy and how is it related to decision tree algorithms?

-Entropy is a measure of randomness or impurity in a dataset. In the context of decision tree algorithms, a lower entropy indicates a more 'pure' subset, where the samples are more uniformly distributed towards a particular class. The decision tree aims to maximize information gain, which is related to the reduction in entropy after a split.

What is the Gini impurity and how does it differ from entropy in decision trees?

-Gini impurity is another measure used to estimate the purity of a dataset in a decision tree. It is calculated based on the probability of incorrectly classifying a randomly chosen element from the dataset. Gini impurity and entropy both measure impurity, but they do so using different formulas and scales.

How does one prepare the data for training a decision tree classifier?

-To prepare the data for training a decision tree classifier, one must first separate the target variable from the independent variables. Then, categorical features are encoded into numerical values using techniques like label encoding. After encoding, any additional label columns used for encoding are dropped, leaving only numerical data for the model to process.

What is the role of the 'fit' method in training a decision tree classifier?

-The 'fit' method is used to train the decision tree classifier. It takes the independent variables and the target variable as inputs and learns the decision rules by finding the best splits based on the selected criterion, such as Gini impurity or entropy.

How can one evaluate the performance of a decision tree classifier?

-The performance of a decision tree classifier can be evaluated by using metrics such as accuracy, which is the proportion of correct predictions out of the total number of cases. In the script, the score of the model is mentioned as '1', indicating a perfect fit on the training data. However, for real-life complex datasets, the score would typically be less than one.

What is the importance of using a test set when training machine learning models?

-Using a test set is crucial for evaluating the generalization performance of a machine learning model. It helps to estimate how well the model will perform on unseen data. Ideally, the dataset should be split into a training set and a test set, with common ratios being 80:20 or 70:30.

How does one make predictions using a trained decision tree classifier?

-To make predictions using a trained decision tree classifier, one supplies the model with new input data that has been preprocessed and encoded in the same way as the training data. The model then uses the learned decision rules to classify the input data and provide a prediction.

What is the exercise provided at the end of the script for further practice?

-The exercise involves using a Titanic dataset to predict the survival rate of passengers based on features such as class, sex, age, and fare. The task is to ignore certain columns, use the remaining ones to predict survival, and post the model's score as a comment for verification.

What is the importance of practicing with exercises after learning a new machine learning concept?

-Practicing with exercises is essential for solidifying understanding and gaining hands-on experience with the concept. It allows learners to apply the theory to real or simulated datasets, troubleshoot issues, and improve their skills in using machine learning algorithms.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Learning Decision Tree

Introduction to Decision Trees

Algorithm Design | Approximation Algorithm | Traveling Salesman Problem with Triangle Inequality

3 2 Tree Search Algorithms in Artificial Intelligence

Decision Tree Pruning explained (Pre-Pruning and Post-Pruning)

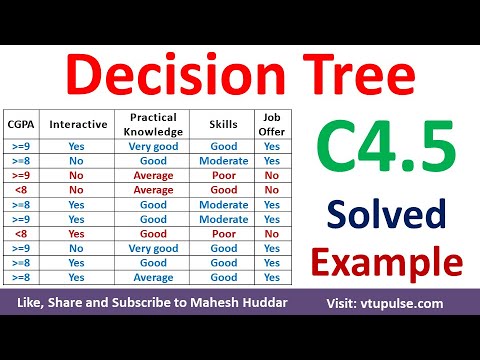

Decision Tree using C4.5 Algorithm Solved Numerical Example | C4.5 Solved Example by Mahesh Huddar

5.0 / 5 (0 votes)