Object Recognition and Obstacle Avoiding Robot Making By Using Webots | CSE461 | Summer 2021

Summary

TLDRThis video showcases a CSE461 object recognition project using Webots simulation software. The team creates a virtual environment with a floor, walls, and four objects (a cone, sphere, cylinder, and wheel) for detection. The robot, equipped with a camera and distance sensors, detects and avoids collisions with the objects. The process involves building the robot, integrating sensors for object detection, and coding to enable interaction with the environment. Key details include configuring the camera's recognition features and sensor collaboration, ensuring the robot can identify objects and move autonomously while avoiding obstacles.

Takeaways

- 😀 The project is an object recognition simulation built using the Weibo software.

- 😀 The environment consists of a floor bounded by four walls to prevent the robot from falling off.

- 😀 Bounding objects are added to the floor to facilitate proper collision detection.

- 😀 Four objects (cylinder, cone, sphere, and wheel) are placed on the floor for the robot to detect.

- 😀 The wheel has two connected components, which are part of the object detection task.

- 😀 The robot is equipped with a camera and distance sensors for object detection.

- 😀 The robot's wheels are powered by rotational motors connected via hinge joints for movement.

- 😀 The distance sensors detect obstacles by emitting rays and measuring reflections to avoid collisions.

- 😀 The camera is used to display the objects recognized by the robot and assists with object recognition.

- 😀 The robot's code integrates both the camera and distance sensors, with necessary adjustments for successful operation.

- 😀 Object recognition parameters such as maximum range, object count, and color detection are adjusted to ensure accuracy.

Q & A

What is the purpose of the object recognition project described in the script?

-The purpose of the project is to simulate a robot that can detect and recognize objects using sensors and a camera in a controlled environment.

What key elements make up the environment in the simulation?

-The environment consists of a floor surrounded by four walls. The floor is solid, and bounding objects are added to ensure proper collision detection and to keep the robot from falling through the floor.

Why is the floor made solid in the simulation?

-The floor is made solid to prevent the robot from falling through it, ensuring that it remains within the designated area for object detection and movement.

What four objects are placed on the floor for object recognition?

-The four objects placed on the floor are a cylinder, a cone, a sphere, and a wheel.

What is the purpose of adding bounding objects to the simulated environment?

-Bounding objects are added to the floor and the four objects to ensure proper collision detection, allowing the robot to interact with them effectively in the simulation.

How are the robot's wheels attached, and what is their function?

-The robot's wheels are attached using hinge joints, which allow for rotational motion. Rotational motors are used to provide movement, enabling the robot to navigate the environment.

What role do the distance sensors play in the robot's functionality?

-The distance sensors detect objects in front of the robot by sending out rays. If an object is detected (the ray reflects back), the robot adjusts its path to avoid collisions.

How does the camera contribute to the robot's object recognition?

-The camera captures the environment and helps the robot recognize objects by analyzing their colors. It is crucial for visual object recognition in the simulation.

What technical adjustments are required for the integration of the camera and sensors into the robot's code?

-The camera and sensors need to be integrated properly in the code. This includes setting the correct parameters for object recognition, such as maximum range and number of detectable objects, and removing unnecessary elements like 'void' from the controller to ensure proper functioning.

What programming changes are needed to make the robot's code work effectively?

-To make the code work, adjustments include removing 'void' from the controller choices and ensuring the project name is correctly set in the code. Additionally, sensor and camera parameters must be configured for effective object detection and movement control.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

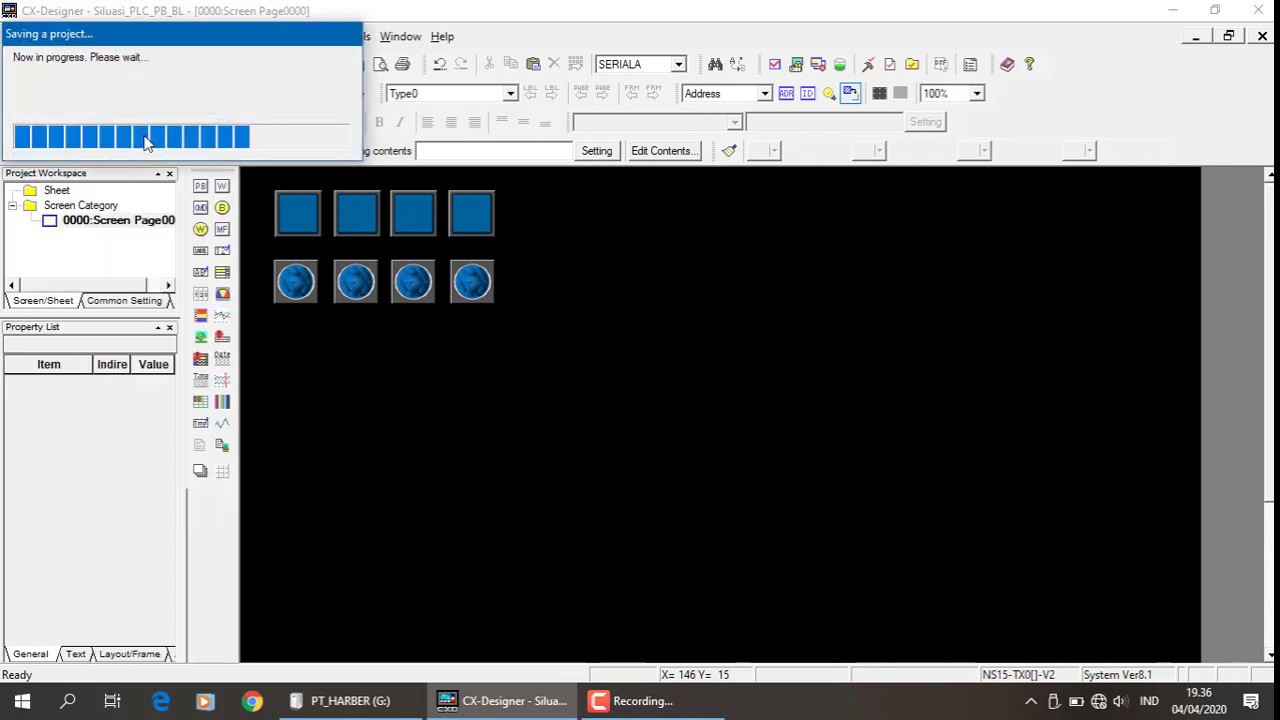

Membuat File CX Designer - Push button dan Bit Lamp

Proteus Schematic - Sistem Kontrol Pompa Air #OTOMATIS

Netizen kepo bakal SUKA sama HP ini - Kesan pertama Samsung Galaxy S24 Indonesia!

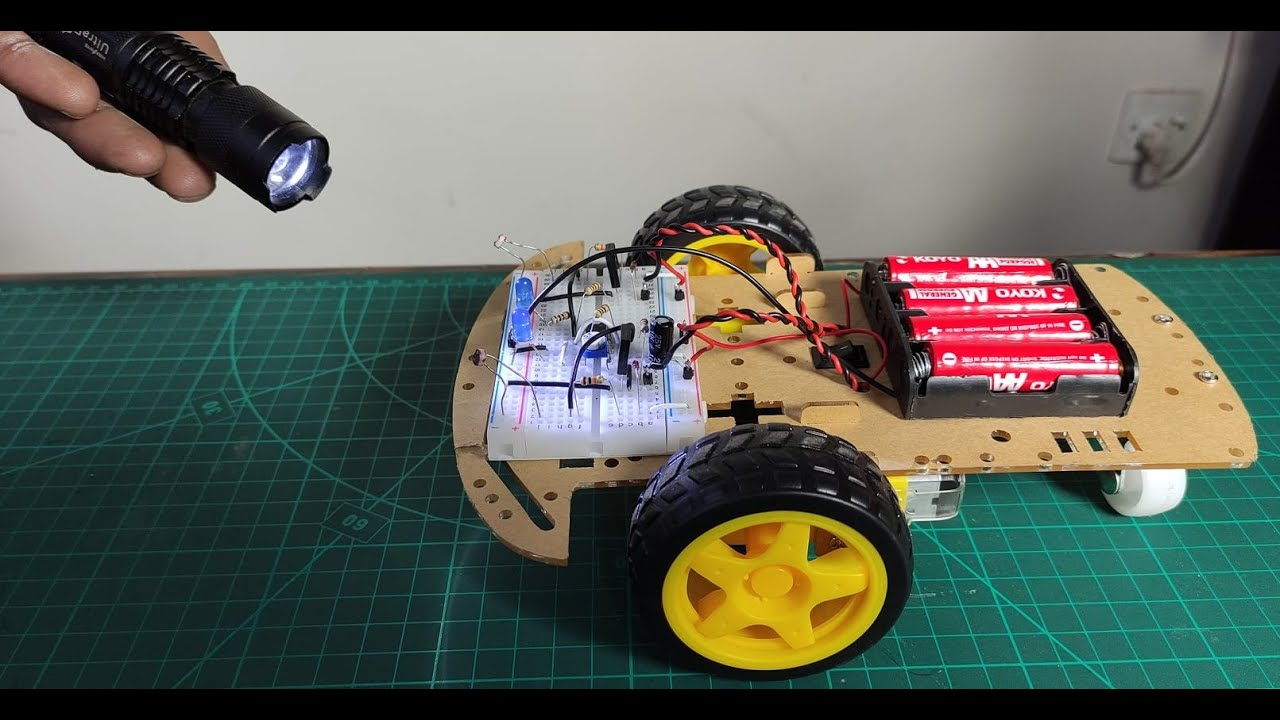

How to make Light Follower Robot using LDR Sensor and LM358 Operational Amplifier | without Arduino

SIMULASI PALANG KERETA API OTOMATIS MENGGUNAKAN ARDUINO UNO DENGAN SIMULATOR WOKWI

CADe SIMU AULA 1

5.0 / 5 (0 votes)