Unity Tutorial: Voice Interaction for Android and iOS

Summary

TLDRThis video tutorial demonstrates how to implement text-to-speech and speech-to-text functionalities using a Unity plugin developed by Ping A Canine. The creator walks through setting up the plugin for Android and iOS, covering Unity project setup, scene creation, and building a basic user interface with interactive elements like cubes to trigger the voice interaction. Key steps include handling speech input, setting up permissions, and troubleshooting on both platforms. The video also discusses pitfalls when working with iOS, such as ensuring permissions and settings are correct. Overall, it's a detailed guide for adding voice interaction to Unity projects.

Takeaways

- 🤖 This tutorial demonstrates how to integrate text-to-speech and speech-to-text functionalities into a Unity project, focusing on both Android and iOS.

- 📥 The first step is to clone or download the repository created by PingAK9, which contains the necessary Unity project files and Android Studio project.

- 📂 The Unity project folder needs to be renamed to 'VoiceText' and added to Unity Hub to begin setup.

- 📱 Switch the Unity platform to either Android or iOS and adjust the build settings for the chosen platform, including updating the package name.

- 🖥️ To build a custom sample scene, users should set up the UI components for displaying text and create two clickable cubes to handle text-to-speech and speech-to-text actions.

- 🎨 Use Unity’s UI elements and material assets to customize the display, including a canvas for text and materials to color the cubes.

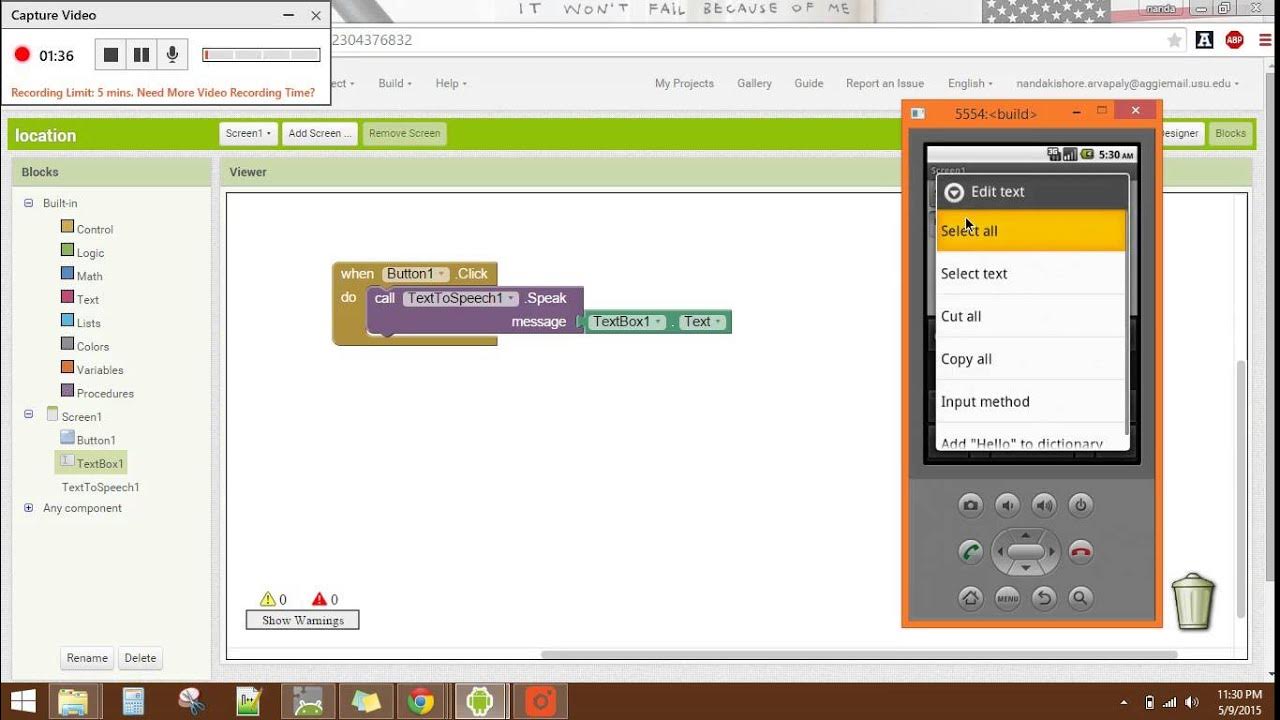

- 🖱️ Create a C# script called 'ClickHandler' that detects mouse click events, allowing speech recording and playback upon interaction with the cubes.

- 🎙️ Implement two Unity game objects, 'SpeechToText' and 'TextToSpeech', and attach scripts to enable communication with the native speech functions on both platforms.

- 🔄 Additional functions for managing permissions, debugging, and handling specific behaviors for Android and iOS are necessary to ensure compatibility across both platforms.

- 📲 For iOS builds, users should ensure the device is up to date with the latest iOS version, has Siri enabled, and is not muted to avoid issues with speech functionalities.

Q & A

What is the purpose of the tutorial in the video?

-The tutorial aims to guide users through implementing text-to-speech and speech-to-text functionalities in Unity, using a plugin created by Ping a Canine for Android and iOS platforms.

Why is voice interaction considered beneficial for AR applications?

-Voice interaction is seen as a great method of interaction for AR because it provides a hands-free, intuitive way for users to interact with AR content, enhancing the overall user experience.

What steps are involved in setting up the plugin in Unity?

-The steps include downloading the repo from Ping a Canine, renaming the Unity project folder, setting the platform to Android or iOS, modifying build settings, creating a new scene, and configuring the UI and object interaction in Unity.

What is the significance of naming the GameObject 'speech-to-text' in Unity?

-The GameObject must be named 'speech-to-text' to ensure proper communication with the plugin, which uses 'SendMessage' to call functions based on specific GameObject names.

What are some pitfalls to avoid when implementing speech-to-text and text-to-speech on iOS?

-Common pitfalls include using outdated iOS versions, forgetting to enable Siri, having the mute button on, or not having proper permissions in place. These factors can cause the functionalities to not work correctly.

How does the plugin handle microphone permissions on Android?

-The plugin checks if the microphone permission is authorized. If not, it requests the permission from the user before allowing the app to access the microphone for speech recognition.

What is the purpose of the two Unity events ‘onMouseDown’ and ‘onMouseUp’ in the script?

-These Unity events are used to trigger actions when the user presses or releases the mouse on the cubes in the scene, with 'onMouseDown' initiating recording for speech recognition and 'onMouseUp' stopping it.

What are the visual elements created in the Unity scene?

-The scene includes two colored cubes (for triggering speech-to-text and text-to-speech actions), a UI canvas to display text results, a directional light, and a skybox for rendering.

How does the partial speech recognition result differ from the final result?

-The partial speech result is returned as the user speaks, allowing real-time feedback, while the final speech result is returned after the user finishes speaking and the system completes recognition.

How can developers troubleshoot issues with the plugin not working correctly on iOS?

-Developers should ensure the device is running the latest version of iOS, check that Siri is enabled, ensure the mute button is off, and verify that proper microphone and speech recognition permissions are in place.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

Tutorial Cepat Membuat Aplikasi Translator, menggunakan MIT App Inventor.

Membuat aplikasi pengubah teks menjadi suara menggunakan app inventor

Text to Speech and Speech to Text Note Taking in Microsoft OneNote 2022

هوش مصنوعی دوبله ویدیو نامحدود | ترجمه فایل pdf | تبدیل متن به صدای خودمون

Text To Speech MIT APP Inventor 2

Build an AI Voice Translator: Keep Your Voice in Any Language! (Python + Gradio Tutorial)

5.0 / 5 (0 votes)