Computer Vision Tutorial: Build a Hand-Controlled Space Shooter with Next.js

Summary

TLDRThis tutorial offers a comprehensive guide to building an offline, browser-based, endless space shooter game using computer vision for hand gesture control. The game, reminiscent of Galaga, is highly responsive and can be played by steering an airplane with hand movements. The tutorial, suitable for all levels, demonstrates setting up the game from scratch, including initializing a Next.js app, detecting hand gestures with MediaPipe, and implementing collision detection. It also covers making the game pause and resume with hand presence, ensuring a seamless gaming experience.

Takeaways

- 🎮 The video is a tutorial for building an endless space shooter game using computer vision and web development skills.

- 🕹️ The game is controlled by hand movements detected via computer vision, without the need for a physical controller.

- 🌐 The game can run entirely in the browser and is capable of operating offline, without an internet connection.

- 🔧 The tutorial assumes some web development background but does not skip any lines of code, making it accessible to various skill levels.

- 🚀 The game features a demo showing an airplane controlled by hand movements, with an infinite stream of boulders to avoid.

- 💻 The tutorial covers setting up the game from scratch, including the use of Next.js and Node.js, with a step-by-step guide.

- 👐 Collision detection with boulders is implemented, and the player is given four lives before the game ends.

- 👀 The game pauses when hands are off the screen and resumes when hands are detected, enhancing the responsiveness of the game.

- 🎥 The tutorial includes loading a MediaPipe JS model for hand gesture detection and setting up a webcam feed.

- 🛠️ The hand recognizer component is responsible for detecting hand gestures and notifying the homepage component of the results.

- 📚 The tutorial will be split into a series of six videos, with a playlist link provided for easy navigation.

Q & A

What is the game being described in the script?

-The game being described is an endless space shooter that uses computer vision for control, allowing the player to move the spaceship with hand gestures, similar to an airplane joystick.

What technology is used to control the game without a traditional controller?

-Computer vision technology is used to detect hand gestures, which control the game without the need for a traditional controller.

Is the game designed to run offline?

-Yes, the game is designed to run completely offline, as it can operate even when not connected to the internet.

What web development framework is used to build the game?

-The game is built using Next.js, a framework for building user interfaces and web applications.

What does the game's collision detection feature do?

-The collision detection feature in the game allows for interactions with boulders that fall from the top of the screen, and it gives the player four tries before the game is over.

How does the game respond to the player's hand movements?

-The game is highly responsive to the player's hand movements, allowing for quick and precise control of the spaceship to avoid the falling boulders.

What is the purpose of the 'hand-recognizer' component in the game?

-The 'hand-recognizer' component is responsible for detecting hand gestures using the MediaPipe JS model and notifying the homepage component of the game about the hand results.

How is the video feed of the user integrated into the game?

-The video feed is integrated by creating a 'video' element in the 'hand-recognizer' component, which uses the user's webcam to capture and display the hand movements.

What is the role of the 'useEffect' hook in the script?

-The 'useEffect' hook is used to initialize the video element and load the MediaPipe JS model when the component loads.

How does the game handle the absence of the player's hands from the screen?

-The game pauses when the player's hands are taken off the screen and resumes as soon as the hands come back into view.

What is the significance of the 'processDetections' function in the script?

-The 'processDetections' function is used to process the hand landmarks detected by the MediaPipe JS model and is responsible for determining the tilt and rotation of the rocket in the game.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

画像判定 機械学習 TM2 Scratch★小学生プログラマーりんたろう★

cara membuat game space shooter di scratch │ tutorial scratch pemula

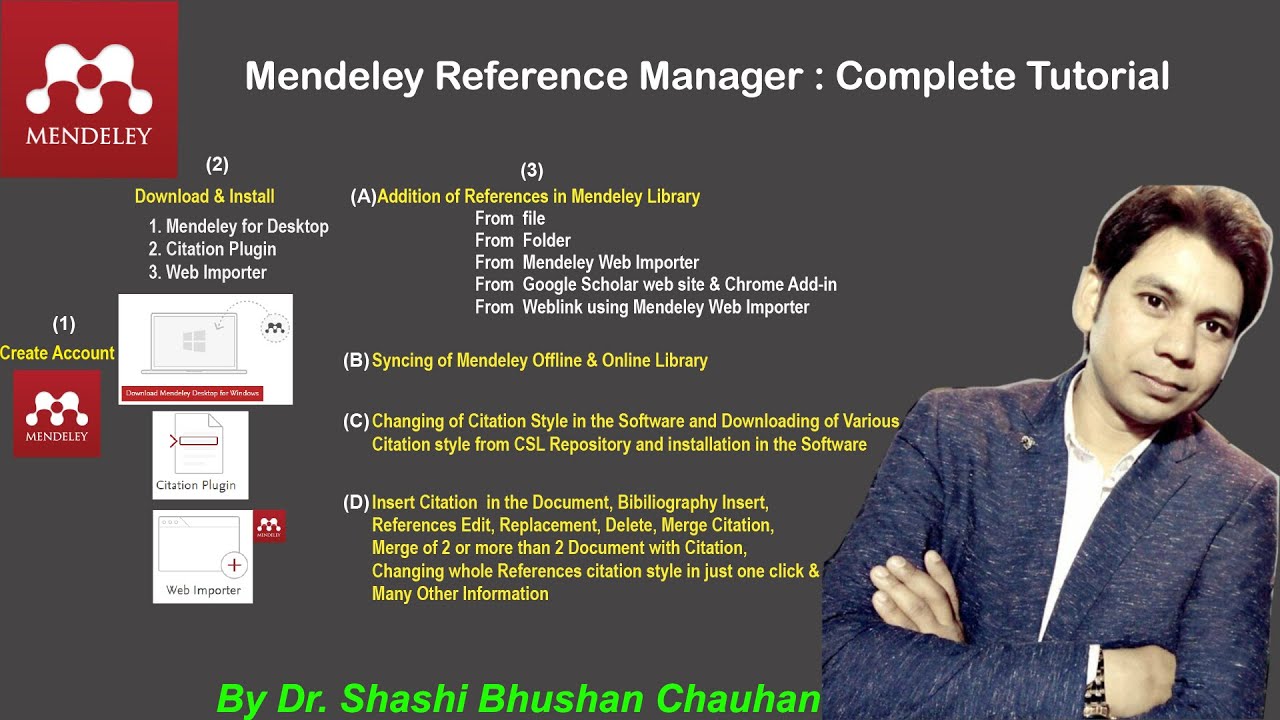

Mendeley Reference Manager Complete Tutorial #how to use #mendeley #reference #manager

AI-Powered Personalized Learning

Unity Endless Tutorial • 1 • Player Character [Tutorial][C#]

Space Shooter in Godot 4 - part 1 (introduction)

5.0 / 5 (0 votes)