Support Vector Machines (SVM) - the basics | simply explained

Summary

TLDRThis video introduces the concept of Support Vector Machines (SVMs), focusing on their mathematical foundations for classification tasks. It explains the equation of a straight line, the general form used in SVMs, and the concept of margins in classification. The video emphasizes how SVM maximizes the margin between two classes to achieve accurate separation. Additionally, it covers training and testing data sets to assess model accuracy. The kernel trick is also introduced, allowing SVM to handle non-linear separations by transforming data into higher dimensions. This overview serves as a stepping stone to further exploration of SVMs in machine learning.

Takeaways

- 😀 SVMs are a supervised machine learning method used to classify data based on a decision boundary (hyperplane).

- 😀 The equation of a straight line can be generalized to help define the hyperplane in SVMs.

- 😀 Coefficients like 'a', 'b', and 'c' in the general line equation help determine the slope, intercept, and position of the hyperplane.

- 😀 Support Vectors are data points closest to the decision boundary and are crucial for defining the optimal margin.

- 😀 The margin is the distance between the hyperplane and the support vectors, which SVM aims to maximize for better classification performance.

- 😀 The regularization parameter 'C' in SVM controls the trade-off between margin size and misclassification of training data.

- 😀 SVM's primary objective is to find the optimal hyperplane that best separates different classes of data.

- 😀 A training dataset is used to train the SVM by determining the optimal coefficients for the hyperplane.

- 😀 The test dataset is used to evaluate the performance of the trained SVM by checking how accurately it classifies unseen data points.

- 😀 The kernel trick allows SVMs to handle non-linearly separable data by transforming it into higher dimensions, enabling more complex decision boundaries.

- 😀 The effectiveness of SVMs is often evaluated by accuracy metrics, which compare the number of correct predictions to the total number of test points.

Q & A

What is the main objective of training a support vector machine (SVM)?

-The main objective of training an SVM is to find a hyperplane that best separates the data points from two different classes, optimizing the classification of future, unseen data.

How does a support vector machine optimize the hyperplane coefficients?

-The SVM optimizes the coefficients of the hyperplane by maximizing the margin between the two classes. It uses a mathematical process that adjusts the hyperplane to correctly classify the data points.

What is the purpose of using a test dataset in SVM training?

-A test dataset is used to evaluate the accuracy of the trained SVM model by predicting the class of new, unseen data points and comparing these predictions with the actual labels.

What happens when an SVM misclassifies data points during the testing phase?

-When an SVM misclassifies data points, it means that the prediction does not match the actual class. The accuracy is then calculated by comparing the number of correct predictions to the total number of test samples.

How is the accuracy of an SVM model evaluated?

-The accuracy of an SVM model is evaluated by checking how many test data points are correctly classified. It is calculated as the ratio of correctly classified points to the total number of test points.

What is the kernel trick, and why is it used in SVM?

-The kernel trick is a method used in SVM when a straight line (linear separator) is not sufficient to separate the data points. It applies a non-linear transformation to map the data into a higher-dimensional space where a linear separator is more effective.

Can you explain the concept of kernels in the context of SVM?

-Kernels are non-linear functions used in SVM to transform the input data into a higher-dimensional space. This transformation allows the SVM to find a decision boundary that can better separate complex data patterns.

What type of data would require the use of the kernel trick in SVM?

-The kernel trick is used for data that cannot be separated linearly. When the data points are not linearly separable in the original feature space, the kernel trick maps the data to a higher-dimensional space where a linear separation is possible.

What is the significance of separating the data correctly in SVM?

-Correctly separating the data is crucial for the SVM to make accurate predictions on new, unseen data. A well-separated dataset leads to better generalization and a more reliable model.

Why is it important to use a large dataset when training an SVM?

-Using a large dataset helps the SVM learn the underlying patterns in the data more effectively, improving its ability to generalize and making it less likely to overfit to noise or small variations in the training data.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Support Vector Machines Part 1 (of 3): Main Ideas!!!

Support Vector Machines - THE MATH YOU SHOULD KNOW

Support Vector Machine: Pengertian dan Cara Kerja

Electrical Machine 1 | Introduction | B.Tech 2nd Year | Abdul Kalam Technical University | AKTutor

All Learning Algorithms Explained in 14 Minutes

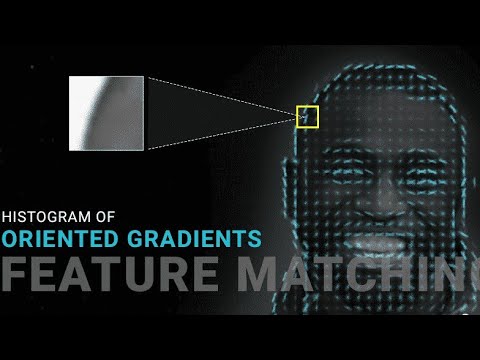

Histogram of Oriented Gradients features | Computer Vision | Electrical Engineering Education

5.0 / 5 (0 votes)