Support Vector Machines Part 1 (of 3): Main Ideas!!!

Summary

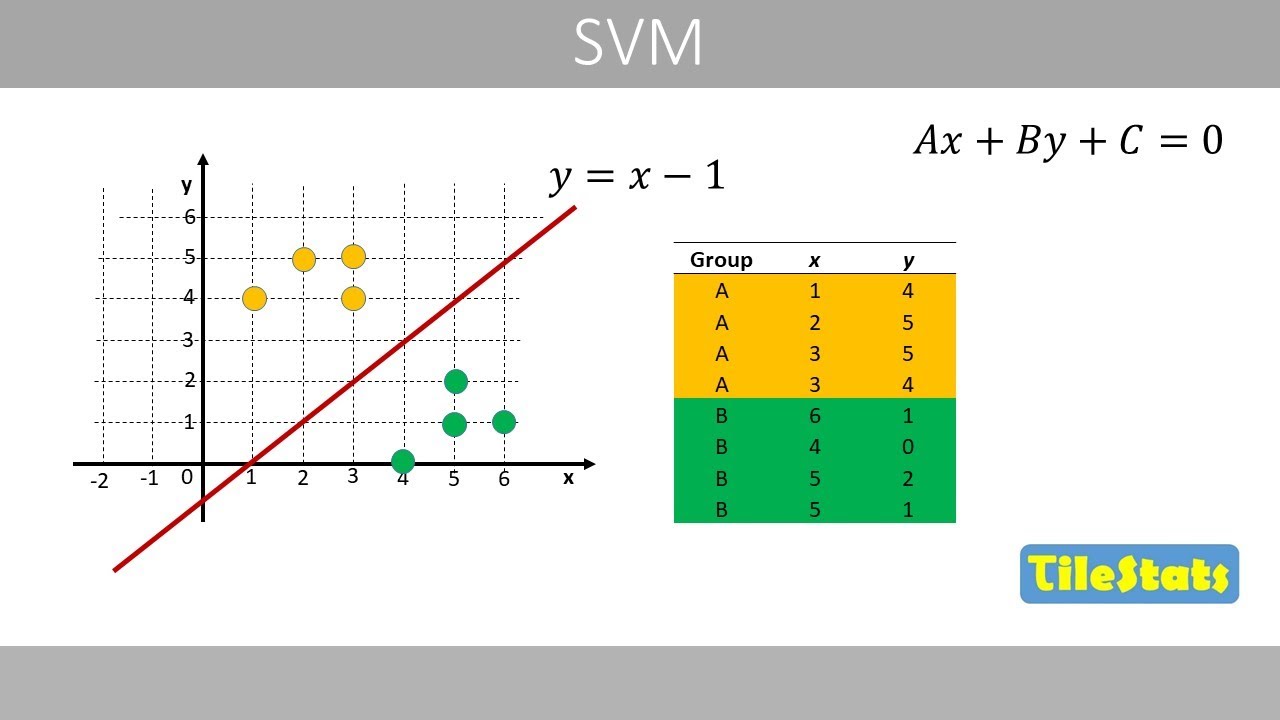

TLDRIn this StatQuest video, Josh Stormer explains Support Vector Machines (SVMs) as powerful tools for classification in machine learning. He introduces key concepts such as the threshold, margin, and the importance of soft margins to reduce sensitivity to outliers. By utilizing kernel functions, SVMs can transform data into higher dimensions, allowing for effective classification even when linear separability is not possible. The video emphasizes the balance between bias and variance in model performance, making SVMs particularly valuable for complex datasets. Viewers are encouraged to explore these concepts further to enhance their understanding of machine learning.

Takeaways

- 😀 Support vector machines (SVMs) are powerful tools for classification tasks, particularly useful when data cannot be easily separated.

- 🧠 Understanding the bias-variance tradeoff is essential for grasping how SVMs work and their performance in different scenarios.

- 🔍 A maximal margin classifier aims to maximize the distance (margin) between different classes, improving classification accuracy.

- ⚠️ Maximal margin classifiers are sensitive to outliers, which can skew results and lead to poor classification performance.

- 🔧 Soft margin classifiers allow for some misclassifications to enhance overall performance, balancing bias and variance.

- 📈 SVMs can operate in higher dimensions, with the support vector classifier represented as a hyperplane separating the classes.

- 📊 Kernel functions (e.g., polynomial, radial basis function) enable the transformation of data into higher dimensions without explicit calculation.

- ✨ The kernel trick is a computational strategy that allows SVMs to work efficiently in high-dimensional spaces by calculating relationships without transforming the data.

- 🌐 Cross-validation is a crucial method for determining the best parameters for SVMs, such as the degree of polynomial kernels.

- 💡 Support vector machines excel in situations where data overlap exists, allowing them to create effective classification boundaries.

Q & A

What is the main objective of Support Vector Machines (SVM)?

-The main objective of Support Vector Machines is to find a decision boundary that maximizes the margin between different classes of data points.

What are the key components of the bias-variance tradeoff mentioned in the script?

-The bias-variance tradeoff involves balancing the model's complexity and its performance. Low bias means the model is closely fitted to the training data, while high variance indicates sensitivity to noise and outliers.

What does the term 'soft margin' refer to in SVM?

-A 'soft margin' refers to allowing some misclassifications in order to create a more robust decision boundary, helping the classifier generalize better to new data.

How does a maximal margin classifier differ from a soft margin classifier?

-A maximal margin classifier strictly separates the classes with a decision boundary that is highly sensitive to the training data, while a soft margin classifier allows for some misclassifications to reduce sensitivity to outliers.

What role do support vectors play in SVM?

-Support vectors are the data points that are closest to the decision boundary and are critical in determining its position. They influence the placement of the classifier significantly.

What is the function of kernel functions in SVM?

-Kernel functions allow SVMs to transform data from a lower dimension to a higher dimension, enabling the model to find more complex decision boundaries that can better separate classes.

Can you explain the concept of the kernel trick?

-The kernel trick is a computational method that allows SVMs to calculate relationships between points in a high-dimensional space without explicitly transforming the data, making computations more efficient.

What is the difference between a polynomial kernel and a radial basis function (RBF) kernel?

-A polynomial kernel transforms the data based on polynomial degrees, systematically increasing dimensions, while an RBF kernel behaves like a weighted nearest neighbor model, emphasizing the influence of the closest data points.

Why are SVMs considered sensitive to outliers in the training data?

-SVMs are sensitive to outliers because a single outlier can significantly affect the position of the decision boundary, leading to misclassifications of other data points.

What happens when data classes overlap significantly in SVM?

-When data classes overlap significantly, SVMs may struggle to find a clear decision boundary, leading to many misclassifications and reduced effectiveness in classifying new observations.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Support Vector Machines (SVM) - the basics | simply explained

Support Vector Machines - THE MATH YOU SHOULD KNOW

Machine Learning Fundamentals: The Confusion Matrix

Gradient Boost Part 1 (of 4): Regression Main Ideas

Gaussian Naive Bayes, Clearly Explained!!!

Neural Networks Part 8: Image Classification with Convolutional Neural Networks (CNNs)

5.0 / 5 (0 votes)