Face recognition in real-time | with Opencv and Python

Summary

TLDRIn this tutorial, Sergio demonstrates how to build facial recognition projects using Python libraries. He explains the difference between face detection and facial recognition, guides viewers through installing necessary libraries like OpenCV and face_recognition, and shows how to encode and compare images of faces. The tutorial progresses to real-time face recognition using a webcam, identifying individuals in live video feeds. Sergio also shares tips for improving accuracy and speed, and encourages viewers to engage with the content.

Takeaways

- 😀 The video is a tutorial by Sergio on building visual recognition projects, specifically focusing on facial recognition.

- 🔍 Sergio explains the difference between face detection (surrounding a face with a box) and facial recognition (identifying the person by name).

- 📚 Viewers are instructed to download certain files from Sergio's website, including 'main.pi' and 'simplefacerect.pi', to follow along with the tutorial.

- 🛠️ Two libraries are required for the project: 'opencv-python' and 'face_recognition', which are installed via the terminal using pip commands.

- 📷 The process involves loading and displaying images, converting them from BGR to RGB format, and encoding them for comparison using the 'face_recognition' library.

- 🔗 Sergio demonstrates how to compare two images to determine if they are the same person, using the 'compare_faces' function from the 'face_recognition' library.

- 🖼️ The tutorial includes a practical example of encoding and comparing images of well-known figures like Elon Musk and Messi.

- 💻 Sergio guides viewers through writing code for real-time facial recognition using a webcam, emphasizing the importance of the 'simplefacerect.pi' module.

- 📹 The real-time demonstration includes capturing video frames, detecting faces, and drawing rectangles around detected faces with the 'cv2.rectangle' function.

- 📝 Sergio discusses the importance of image titles for associating names with detected faces and displays names using the 'cv2.putText' function.

- 🔄 The video concludes with suggestions for improving the project, such as enhancing accuracy, speed, and detection capabilities, and encourages viewers to subscribe for more content.

Q & A

What is the main topic of the video?

-The main topic of the video is teaching viewers how to build facial recognition projects using Python libraries such as OpenCV and face_recognition.

Who is the presenter of the video?

-The presenter of the video is Sergio, who helps companies, students, and freelancers to build visual recognition projects.

What are the two types of face detection mentioned in the video?

-The two types of face detection mentioned are phase detection, which involves surrounding any face with a box, and facial recognition, which includes identifying the person by name.

What are the two libraries that need to be installed for the project?

-The two libraries that need to be installed are 'opencv-python' and 'face_recognition'.

What is the purpose of converting the image format from BGR to RGB?

-The image format is converted from BGR to RGB because OpenCV uses BGR format by default, whereas the face_recognition library requires RGB format.

What is the role of the 'face_recognition' library in the project?

-The 'face_recognition' library simplifies the steps of facial recognition by encoding images and comparing them to identify known faces in real-time.

How does the video demonstrate the facial recognition process?

-The video demonstrates the facial recognition process by encoding images of known individuals, comparing them to other images or live video feeds, and identifying matches by name.

What is the importance of downloading the files from the presenter's website?

-Downloading the files from the presenter's website is important because they contain necessary images and Python files like 'main.pi' and 'simplefacerect.pi' required for the project.

How can the facial recognition accuracy be improved?

-The facial recognition accuracy can be improved by using a GPU for faster processing, adding more diverse datasets, and ensuring good lighting conditions for face detection.

What is the significance of the 'simplefacerect.pi' file in the project?

-The 'simplefacerect.pi' file is a Python module that is not a library but a custom file needed for the project. It is used for detecting and drawing rectangles around faces in real-time video feeds.

How can viewers test the facial recognition project with their own images?

-Viewers can test the facial recognition project by placing their own images in the specified folder, ensuring the images are named with the person's name for correct identification during the project execution.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

How to Install and Run Multiple Python Versions on macOS | pyenv & virtualenv Setup Tutorial

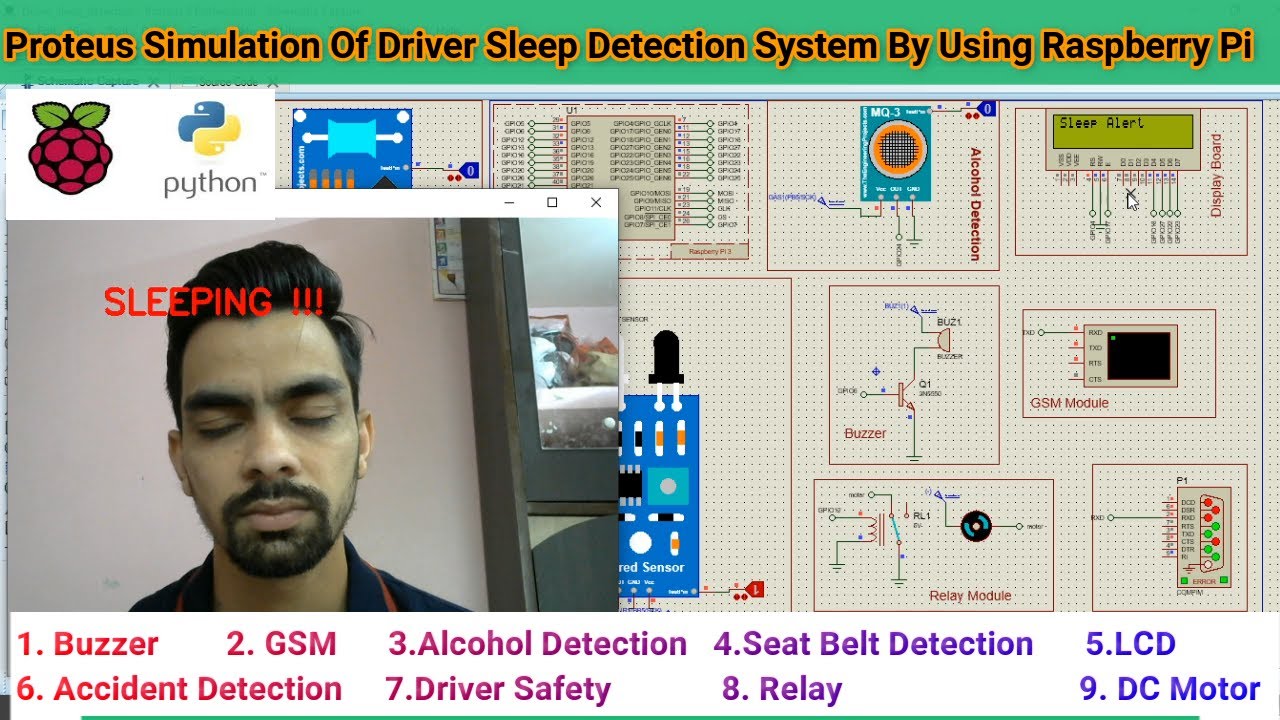

Driver Drowsiness Detection System

Face Recognition With Raspberry Pi + OpenCV + Python

[python] Program Regresi Linear Sederhana

Object Detection using OpenCV Python in 15 Minutes! Coding Tutorial #python #beginners

Raspberry Pi Pico Project - Thermometer & Clock ST7735 & DS3231

5.0 / 5 (0 votes)