These AI/ML papers give you an unfair advantage

Summary

TLDRThis video script advises machine learning newcomers to focus on mastering fundamentals like linear regression and neural networks before delving into research papers. It emphasizes the importance of reading papers for both research and staying current in the field, especially in big tech companies. The script then lists five essential papers, including 'Attention Is All You Need' for understanding Transformers, 'Handwritten Digit Recognition with a Back-Propagation Network' for the origins of CNNs, and 'Retrieval Augmented Generation for Knowledge-Intensive NLP Tasks' for insights into RAG's neural networks.

Takeaways

- 📚 For beginners in machine learning, focusing on research papers is not the initial priority; mastering the fundamentals is more important.

- 🔗 The speaker provides links to videos on basic machine learning topics such as linear regression, gradient descent, and neural networks, each with a quiz.

- 🏢 In many large tech companies, engineers are expected to read papers to stay updated on the latest theories.

- 📈 Reading papers is a significant time investment, but it's crucial to not attempt to read every paper; prioritization is key.

- 📝 'Attention Is All You Need' is highlighted as an essential paper, foundational to understanding the Transformer architecture used in Google Translate and GPT.

- 👨🔬 The paper on handwritten digit recognition is noted for being the first to train CNNs with deep learning methods, authored by Yann LeCun, a key figure in AI.

- 📸 'An Image is Worth 16x16 Words' is recognized for proposing that Transformers can outperform CNNs in image classification when trained on sufficient data.

- 🧠 The paper on low-rank adaptation of LLMs introduces a matrix multiplication trick for fine-tuning models without expensive GPUs, influencing a community of enthusiasts.

- 🔍 The original paper on RAG from Facebook AI is recommended for its insights into retrieval augmented generation for knowledge-intensive NLP tasks.

- 💬 The speaker invites viewers to comment if they have specific papers they're interested in, fostering engagement and further discussion.

Q & A

What is the main advice given for beginners in machine learning regarding research papers?

-For beginners in machine learning, the main advice is not to focus primarily on research papers but to master the basics such as linear regression, creating a descent, and neural networks.

Why are the basics of machine learning emphasized over research papers for beginners?

-Mastering the basics is emphasized because it provides a solid foundation, which is crucial before diving into the complexity of research papers.

What are the three machine learning topics mentioned in the script that have accompanying videos and quizzes?

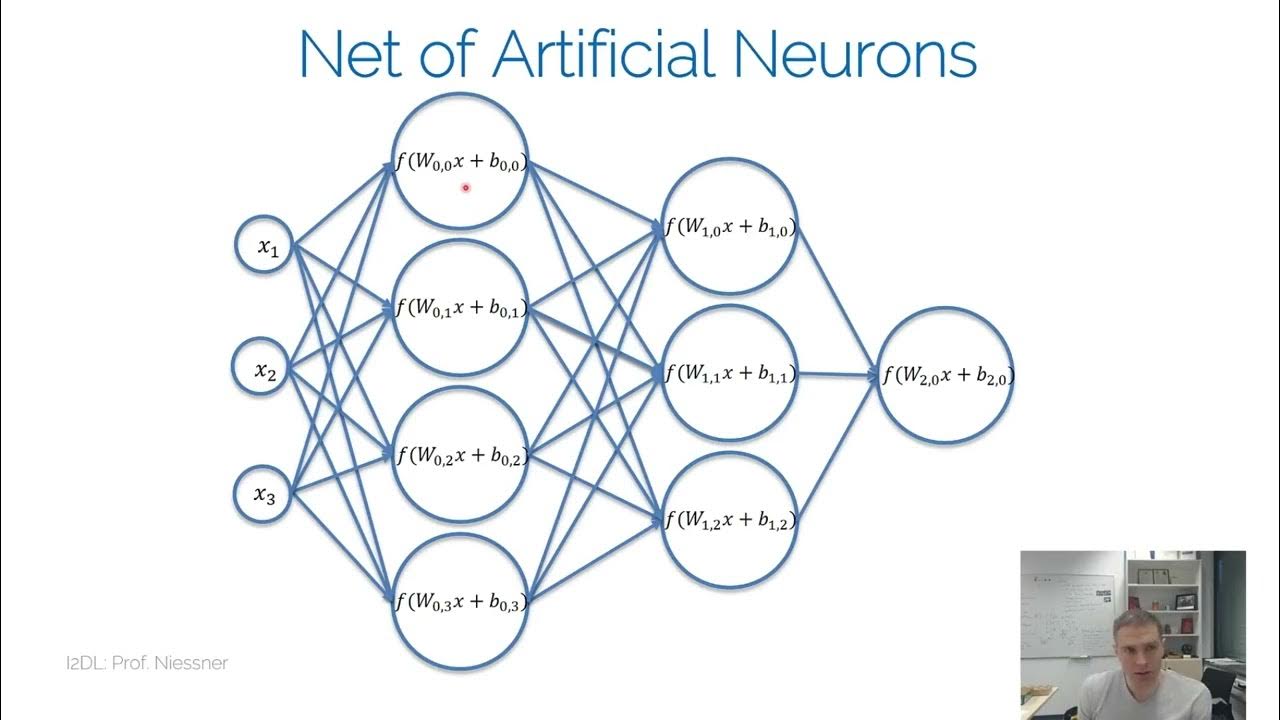

-The three topics mentioned are linear regression, creating a descent, and neural networks, each with a video and a multiple-choice quiz.

Why is it important for engineers in big tech companies to read research papers?

-Engineers in big tech companies are expected to read papers to stay up to date on the latest theories and advancements in the field, as they may be relevant to their work.

What is the fifth essential paper mentioned in the script, and what is its significance?

-The fifth essential paper is 'Attention is All You Need,' which introduced the Transformer architecture and is significant because it underpins technologies like Google Translate and GPT.

What is the fourth paper on the list, and why is it considered essential?

-The fourth paper is 'Handwritten Digit Recognition with a Back-Propagation Network,' which is essential because it was the first to train convolutional neural networks (CNNs) with deep learning methods.

Who is Yan LeCun, and why is he mentioned in the script?

-Yan LeCun is the first author of the fourth paper and is mentioned because he is a chief AI scientist at Meta and considered the 'Godfather of CNNs.'

What is the central idea of the paper 'An Image is Worth 16x16 Words'?

-The central idea is that large Transformer models can outperform CNNs in image classification when trained on large enough datasets.

What is the trick mentioned in the script related to representing an image for a Transformer?

-The trick involves representing an image as a sequence that can be passed into a Transformer without being too long, which is a challenge due to the high number of pixels in high-resolution images.

What is the second paper on the list, and what does it discuss?

-The second paper is 'Lora: Low-Rank Adaptation of LLMs,' which discusses a matrix multiplication trick for fine-tuning large language models (LLMs) without expensive GPUs.

What is the number one paper on the list, and what does it focus on?

-The number one paper is 'Retrieval Augmented Generation for Knowledge-Intensive NLP Tasks,' which focuses on the workings of the RAG model, including similarity search, embedding models, and vector databases.

Why is understanding the neural networks behind RAG considered useful?

-Understanding the neural networks behind RAG is useful because RAG is an increasingly important tool in NLP, and knowing its underlying mechanisms can enhance one's ability to apply it effectively.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

5.0 / 5 (0 votes)