Explainable AI explained! | #1 Introduction

Summary

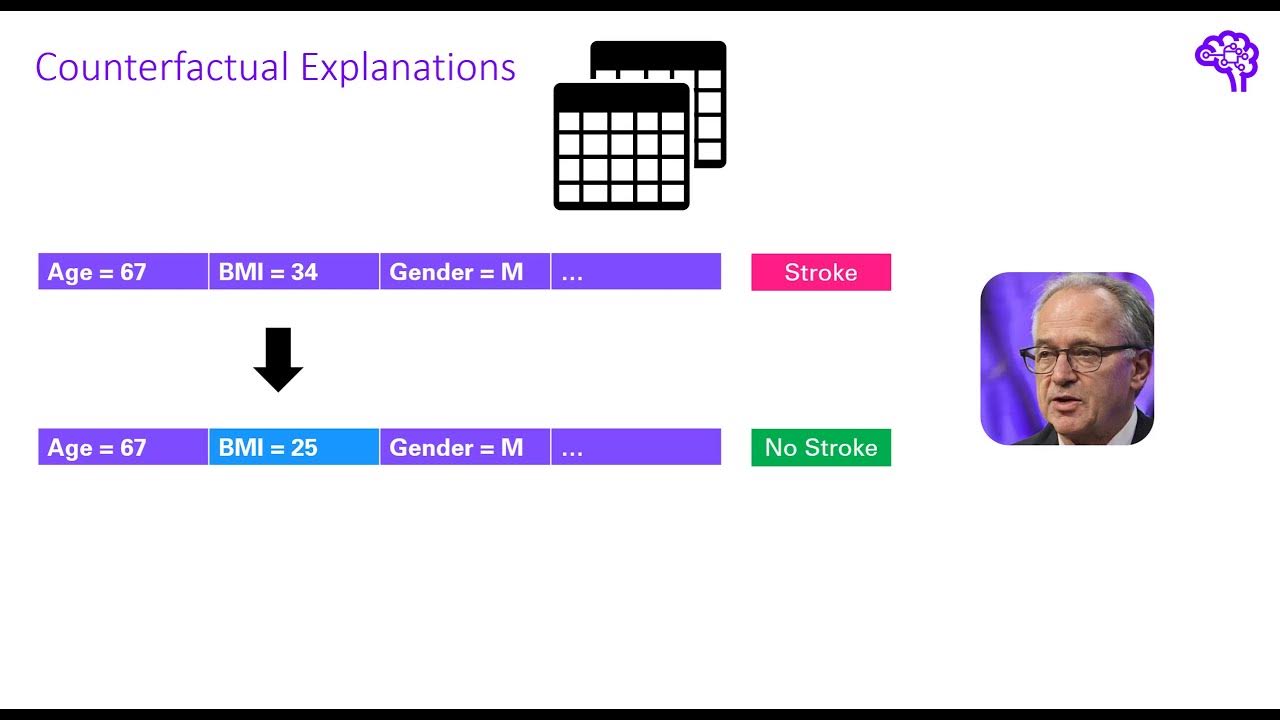

TLDRIn this new series, 'Explainable AI Explained,' the focus is on understanding machine learning models using explainable AI techniques. The series covers methods like identifying important input features, ensuring model robustness, and providing human-understandable explanations. The complexity of AI systems, often referred to as black boxes, necessitates these techniques, especially in critical areas like healthcare and autonomous driving. The series will explore both interpretable models and post-hoc methods like LIME and SHAP, as well as counterfactual explanations and layer-wise relevance propagation, offering practical examples and insights from the field.

Takeaways

- 📘 The series 'Explainable AI Explained' will cover how to better understand machine learning models using various methods from the explainable AI research field.

- 🤖 Explainable AI is crucial for understanding what machine learning models are learning, which parts of the input are most important, and ensuring model robustness.

- 🔍 The complexity of AI systems has led to them being seen as 'black boxes,' making it challenging even for experts to understand their inner workings.

- 🚗 Explainable AI is especially important in safety-critical areas like autonomous driving and healthcare, where understanding model decisions is essential.

- 📊 The field of explainable AI, also known as interpretable machine learning, has grown in interest, as seen in trends and research activity.

- ⚖️ A trade-off often exists between simple, interpretable models and complex models that provide better performance but are harder to understand.

- 🧠 The series will explore different explainable AI methods, including model-based and post hoc approaches, with a focus on methods like LIME and SHAP.

- 🔗 Methods in explainable AI can be categorized as either model-agnostic, applicable to any model, or model-specific, designed for specific models.

- 🌐 The series will also cover the difference between global and local approaches in explainable AI, focusing on explaining either the entire model or individual predictions.

- 📚 The content is largely based on the book 'Interpretable Machine Learning' by Christoph Molnar, which provides an extensive overview of explainable AI methods.

Q & A

What is the main focus of the video series 'Explainable AI Explained'?

-The main focus of the series is to explain and discuss methods for understanding machine learning models, particularly through the research field of Explainable AI (XAI).

Why is Explainable AI important in fields like autonomous driving and healthcare?

-Explainable AI is crucial in these fields because they involve safety and health-critical environments where understanding and validating AI decisions is essential for trust and transparency.

What is meant by AI models being referred to as 'black boxes'?

-AI models are referred to as 'black boxes' because their complexity makes them incomprehensible, even to experts, making it difficult to understand how they arrive at specific decisions.

What are the two main types of machine learning models discussed in terms of interpretability?

-The two main types are simple linear models, which are easy for humans to interpret but may not perform well on complex problems, and highly non-linear models, which perform better but are too complex for humans to understand.

What is the difference between model-based and post hoc explainability methods?

-Model-based methods ensure that the machine learning algorithm itself is interpretable, while post hoc methods provide human-understandable explanations after the model has been trained, often without requiring access to the model's internal workings.

What is the difference between global and local explainability approaches?

-Global explainability aims to explain the entire model, while local explainability focuses on understanding specific predictions or parts of the model, especially in areas with complex decision boundaries.

What are model-agnostic and model-specific explainability methods?

-Model-agnostic methods can be applied to any type of machine learning model, whereas model-specific methods are designed for a particular model type, such as neural networks.

What types of explanations can Explainable AI methods provide?

-Explainable AI methods can provide various types of explanations, including correlation plots, feature importance summaries, data points that clarify the model's behavior, and simple surrogate models that approximate complex ones.

What is a surrogate model in the context of Explainable AI?

-A surrogate model is a simpler model built around a complex machine learning model to provide explanations that are easier for humans to understand.

Which specific Explainable AI methods will be discussed in the video series?

-The series will cover interpretable machine learning models, LIME, SHAP, counterfactual explanations, adversarial attacks, and layer-wise relevance propagation, with a focus on their application and effectiveness.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahora5.0 / 5 (0 votes)