HOW TO ANSWER CICD PROCESS IN AN INTERVIEW| DEVOPS INTERVIEW QUESTIONS #cicd#devops#jenkins #argocd

Summary

TLDRIn this informative video, Abhishek guides aspiring DevOps engineers through explaining a CI/CD pipeline in interviews. He begins with version control systems like Git and target platforms like Kubernetes, then details the stages of a Jenkins-orchestrated pipeline, including code checkout, build, unit testing, code scanning, image building, and scanning. Abhishek emphasizes the importance of pushing images to a registry and updating Kubernetes manifests with tools like Argo CD for deployment. The video simplifies complex concepts, making it easier for viewers to articulate their CI/CD implementations in interviews.

Takeaways

- 😀 CI/CD is a critical component for DevOps engineers and a common interview topic.

- 🔧 The CI/CD process starts with a version control system like Git, GitHub, GitLab, or Bitbucket.

- 🎯 The target platform for deployment is often Kubernetes, emphasizing the importance of containerization.

- 🔄 A user's code commit triggers a series of actions in the CI/CD pipeline via a pull request and review process.

- 🛠️ Jenkins is used as an orchestrator to automate the CI/CD pipeline, starting with the checkout stage.

- 🏗️ The build stage involves compiling code and running unit tests, with optional static code analysis.

- 🔍 Code scanning is performed to identify security vulnerabilities and ensure code quality.

- 📦 Image building involves creating a Docker or container image, specific to the Kubernetes platform.

- 🔬 Image scanning is crucial to verify the security and integrity of the created container image.

- 🚀 The final stage includes pushing the image to a registry like Docker Hub or AWS ECR.

- 📝 Declarative Jenkins pipelines are preferred for their ease of collaboration and flexibility.

- 🔄 CI/CD pipelines can be extended for deployment to Kubernetes using tools like Argo CD or GitOps practices.

- 🔧 Alternative deployment methods include using Ansible, shell scripts, or Python scripts for automation.

- 🔒 Security checks are an integral part of the pipeline to ensure the code and images are free from vulnerabilities.

- 📚 The video script provides a detailed guide for explaining a CI/CD pipeline in a DevOps interview context.

Q & A

What is the primary topic discussed in the video script?

-The primary topic discussed in the video script is explaining the CI/CD pipeline in the context of DevOps engineering interviews.

Why is CI/CD a critical component in a DevOps engineer's job role?

-CI/CD is a critical component in a DevOps engineer's job role because it is essential for automating the software delivery process, ensuring code quality, and enabling rapid and reliable deployments.

What is the first step in setting up a CI/CD pipeline according to the script?

-The first step in setting up a CI/CD pipeline is to start with a Version Control System (VCS) like Git, using platforms such as GitHub, GitLab, or Bitbucket.

What is the role of a continuous integration continuous delivery orchestrator like Jenkins in the CI/CD pipeline?

-The role of a CI/CD orchestrator like Jenkins is to automate the pipeline process, trigger builds and tests when changes are pushed to the repository, and manage the workflow of the CI/CD stages.

What is the purpose of the checkout stage in a Jenkins pipeline?

-The purpose of the checkout stage in a Jenkins pipeline is to retrieve the latest code commit from the version control system to start the build process.

What actions are typically performed during the build and unit testing stage of a Jenkins pipeline?

-During the build and unit testing stage, the code is compiled, a build artifact is created, and unit tests are executed to verify the functionality of the code.

What is the importance of code scanning in the CI/CD pipeline?

-Code scanning is important for identifying security vulnerabilities, code quality issues, and potential bugs early in the development process, ensuring the code meets security and quality standards.

Why is image scanning performed after building a container image in the CI/CD pipeline?

-Image scanning is performed to verify that the created container image is free from security vulnerabilities and to ensure the safety and integrity of the software being deployed.

What is the role of an image registry like Docker Hub or ECR in the CI/CD pipeline?

-An image registry serves as a central repository to store and manage container images, making them accessible for deployment to the target platform, such as Kubernetes.

What is the purpose of updating the Kubernetes YAML manifests or Helm charts after building and pushing the image?

-The purpose of updating the Kubernetes YAML manifests or Helm charts is to ensure that the new version of the application, contained within the updated image, is correctly configured for deployment to the Kubernetes cluster.

What is the role of a GitOps tool like Argo CD in the CI/CD pipeline?

-Argo CD, as a GitOps tool, automates the deployment process by continuously monitoring the Git repository for changes in the manifests or Helm charts and deploying those changes to the Kubernetes cluster.

Why might someone choose to use scripted Jenkins pipelines over declarative pipelines?

-Some might choose scripted Jenkins pipelines for their flexibility, allowing for custom scripting and control over the pipeline process, although declarative pipelines are generally recommended for easier collaboration.

What are some alternatives to using GitOps for deploying changes to a Kubernetes cluster?

-Alternatives to using GitOps for deploying changes include using scripting solutions like Ansible, shell scripts, or Python scripts to automate the deployment process.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Day-18 | What is CICD ? | Introduction to CICD | How CICD works ? | #devops #abhishekveeramalla

Complete CICD setup with Live Demo | #devops #jenkins| Write CICD with less code| @TrainWithShubham

#1 What is DevOps ,Agile and CICD

Day-21 | CICD Interview Questions | GitHub Repo with Q&A #cicd #jenkins #github #gitlab #devops

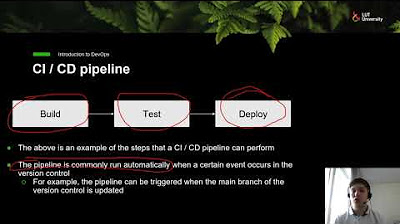

Introduction to DevOps - CI / CD

What is CICD Pipeline? CICD process explained with Hands On Project

5.0 / 5 (0 votes)