01 Notebooks y acceso a ADLS

Summary

TLDRThis video tutorial provides a comprehensive overview of using Databricks' workspace and notebooks, detailing how to navigate the interface, create and manage notebooks, and connect to Azure Data Lake Storage (ADLS). It highlights the unique features of Databricks notebooks, including the ability to combine multiple programming languages within a single cell. The tutorial also explains how to access data in ADLS using access keys, demonstrating how to read and display data in a structured format. This informative session equips users with essential skills for efficient data handling in Databricks.

Takeaways

- 🎮 The Databricks workspace allows users to manage multiple projects through a single interface, each accessible via a specific URL.

- 📁 Users can create and organize files in the workspace, typically using notebooks or code files, and can clone repositories from various providers like GitHub and GitLab.

- 🔍 Databricks notebooks are similar to Jupyter notebooks but allow for multiple programming languages in a single notebook, enabling more flexible coding.

- 🐍 By default, new notebooks are set to Python, but users can specify other languages using directives at the start of the cell.

- 📊 Cells can be evaluated independently, and users can easily create new cells or delete existing ones using keyboard shortcuts.

- 📋 The interface includes options for managing cells, such as adding widgets, executing all cells, and undoing actions.

- 💻 Each notebook connects to an existing cluster, and users must link it explicitly to the desired cluster if it was previously closed.

- 🔗 Multiple notebooks can be opened simultaneously and can share executors while having their own Spark sessions.

- 🔐 Accessing Azure Data Lake Storage (ADLS) can be done through various methods; the simplest method involves using access keys directly in the notebook.

- 📊 The display method in Databricks provides a more elegant way to show DataFrame results compared to traditional show functions, enhancing data visualization.

Q & A

What is a workspace in Databricks?

-A workspace in Databricks is an environment where users can store various types of files, typically notebooks or scripts, and manage databases deployed at specific URLs.

How can you create new cells in a Databricks notebook?

-New cells can be created by clicking on the left side of the current cell, or by using the keyboard shortcuts: 'A' to add a cell above and 'B' to add a cell below.

What is the difference between Databricks notebooks and Jupyter notebooks?

-Databricks notebooks allow combining multiple programming languages within the same notebook, whereas Jupyter notebooks are typically linked to a specific kernel that determines the programming language used.

How do you execute a cell in a Databricks notebook?

-You can execute a cell by pressing 'Control + Enter' or 'Shift + Enter'. The former keeps the focus on the same cell, while the latter moves the focus to the next cell.

What is the purpose of the Spark session in a Databricks notebook?

-The Spark session in a Databricks notebook is used to interact with Spark and perform data operations. Each notebook has its own Spark session that allows executing Spark commands.

What does the variable 'Spark' represent in a Databricks notebook?

-The variable 'Spark' represents the Spark session that is automatically created and configured for the user when the notebook is connected to a cluster.

What is the recommended way to connect Databricks to Azure Data Lake Storage (ADLS)?

-The recommended way to connect is to create a service user and use it; however, due to limitations in some accounts, a simpler method is often used, such as accessing ADLS using access keys.

Why is it not advisable to hardcode access keys in production applications?

-Hardcoding access keys in production applications is not advisable due to security risks. Instead, it is better to store them securely in services like Azure Key Vault.

How can you read a CSV file from Azure Storage in Databricks?

-To read a CSV file, you specify the path starting with 'abfss://' followed by the container name and the storage account details, and use Spark's reading functions to infer the schema.

What does the 'display' method do in Databricks notebooks?

-The 'display' method in Databricks notebooks provides a formatted output of data, displaying it in a more elegant and user-friendly way compared to the standard 'show' method.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Intro To Databricks - What Is Databricks

What is Databricks? | Introduction to Databricks | Edureka

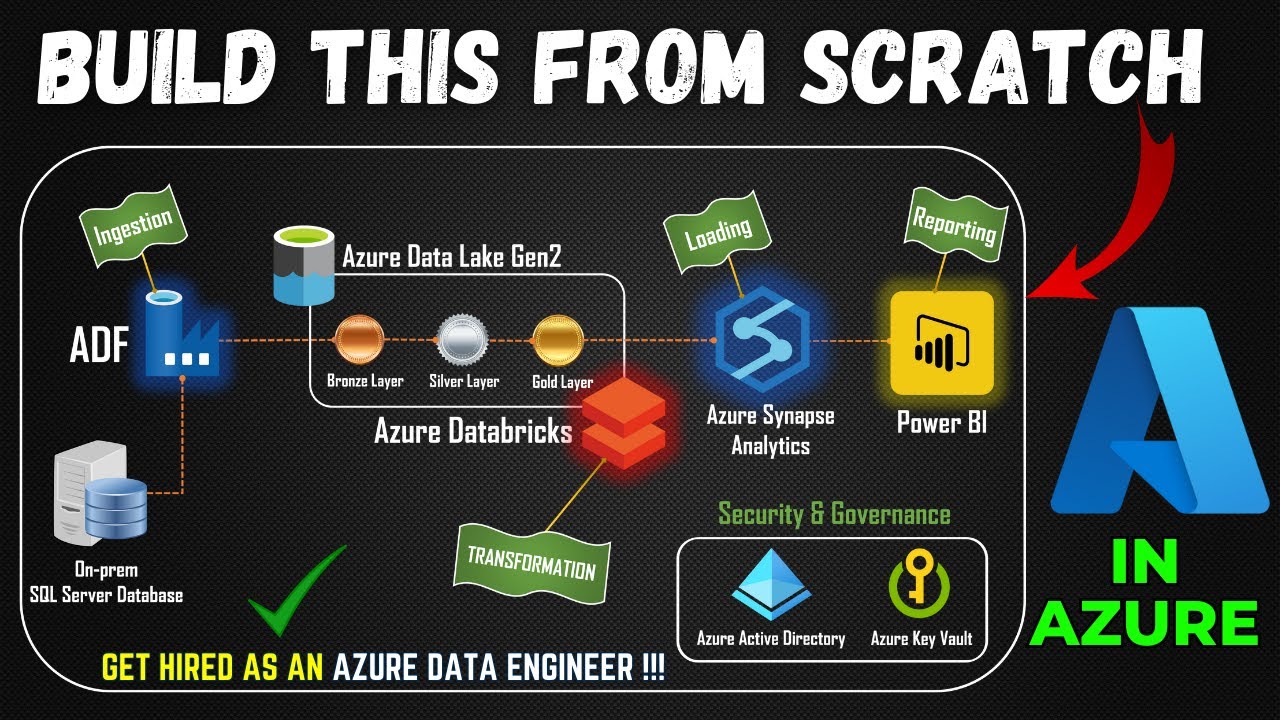

Part 1- End to End Azure Data Engineering Project | Project Overview

83. Databricks | Pyspark | Databricks Workflows: Job Scheduling

Azure Blob Storage & Angular - Using Azure Blob Storage Javascript Library with SAS Tokens

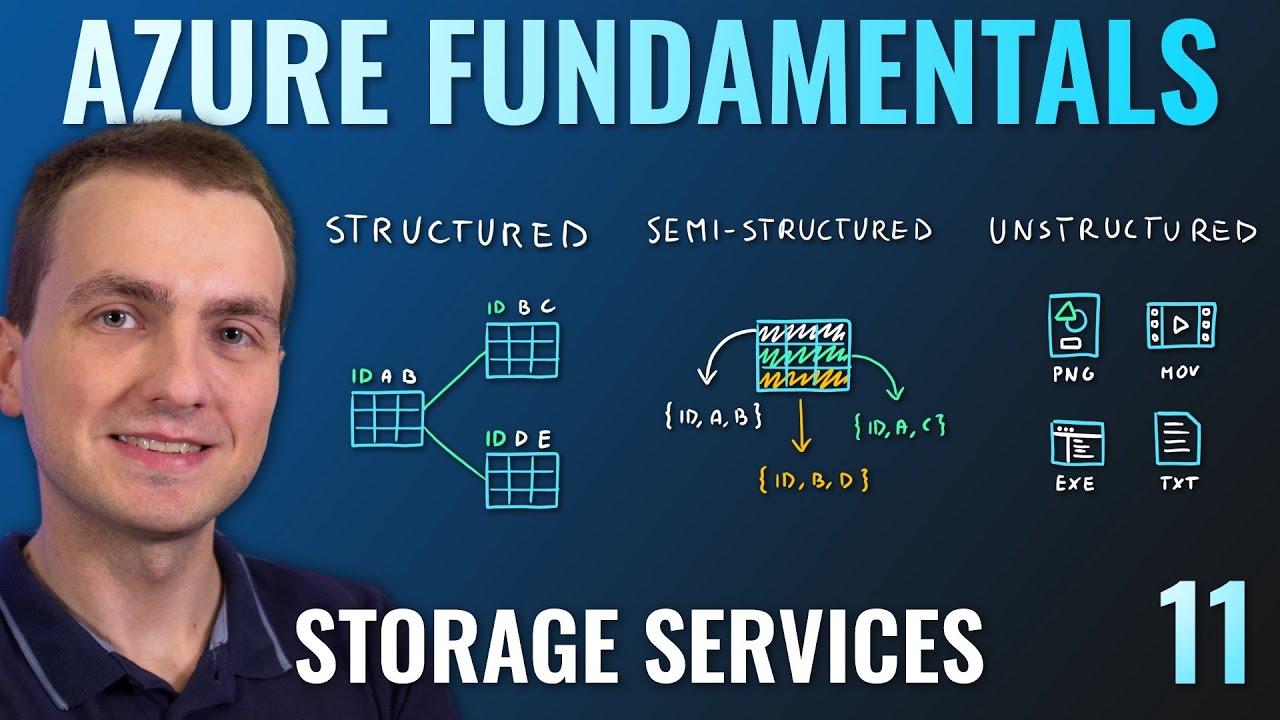

AZ-900 Episode 11 | Azure Storage Services | Blob, Queue, Table, Files, Disk and Storage Tiers

5.0 / 5 (0 votes)