Tiny URL - System Design Interview Question (URL shortener)

Summary

TLDRThe video covers the TinyURL system design interview question, exploring a robust approach to solving it. The key focus is on creating a short URL from a long URL and redirecting users back to the original URL. The speaker explains API design, schema, scalability concerns, and the use of tools like Zookeeper for distributed synchronization. It also touches on caching strategies, database considerations, and optional features like analytics, rate limiting, and security enhancements. The video emphasizes scalability and performance, aiming to address typical interviewer expectations in such design challenges.

Takeaways

- 😀 The Tiny URL system requires both functional (URL shortening and redirection) and non-functional requirements (low latency, high availability).

- 📊 A simple REST API with a POST endpoint for URL creation and a GET endpoint for redirection is proposed.

- 🔢 Key decisions involve determining the length of the short URL based on expected scale, like 1,000 URLs per second, leading to 31 billion URLs annually.

- 🧮 By using a character set of 62 (a-z, A-Z, 0-9), a seven-character URL can handle up to 3.5 trillion URLs, making it scalable enough for large applications.

- 🌐 A naive approach would involve a load balancer distributing requests to web servers, fetching long URLs from a database and caching them for scalability.

- ⚠️ To avoid the single point of failure and collision issues with a caching system, a distributed Zookeeper is introduced to manage URL ranges assigned to web servers.

- 🛠️ Web servers request unique ranges from Zookeeper, ensuring that even if one web server fails, it won’t result in URL collisions.

- 🗄️ Database choices include SQL (Postgres with sharding) or NoSQL (Cassandra) based on trade-offs, with distributed caching like Redis or Memcached to reduce load.

- 📈 Analytics, like counting URL hits and storing IP addresses, can optimize performance and provide location-based cache distribution.

- 🔐 Security concerns include adding random suffixes to URLs to prevent predictability, with a trade-off between URL length and security.

Q & A

What are the functional requirements of the Tiny URL system design?

-The functional requirements are to create a short URL when provided with a long URL and to redirect the user to the long URL when given the short URL.

What are the non-functional requirements for the system?

-The non-functional requirements include very low latency and high availability.

Why is the API design important in this system, and what endpoints are proposed?

-API design is crucial because it defines how the system will interact with users. The system proposes two endpoints: a POST endpoint to create a short URL and a GET endpoint to redirect users to the long URL.

What is the purpose of choosing a short URL length, and how is it determined?

-The short URL length is determined based on the scale of the application. The system needs to handle billions of URLs, so using a seven-character string is ideal, allowing for 3.5 trillion unique combinations.

How does the system handle scalability issues in the proposed design?

-Scalability is addressed by horizontally scaling both web servers and cache systems, using a range allocation technique through Zookeeper to prevent collisions between web servers.

What role does Zookeeper play in the system, and how does it help prevent collisions?

-Zookeeper provides distributed synchronization by assigning each web server a unique range of values for URL generation. This prevents collisions by ensuring no two web servers generate the same short URL.

What are the potential drawbacks of using Zookeeper, and how can they be mitigated?

-One drawback is that if a web server dies after obtaining a range, that range of URLs will be lost. However, given the 3.5 trillion possible combinations, losing a few million URLs is not critical. Additionally, running multiple Zookeeper instances can prevent it from being a single point of failure.

Why might a NoSQL database like Cassandra be better suited for this system?

-Cassandra is better suited due to its ability to handle large-scale data, and it supports horizontal scaling which fits the high read/write demands of this URL shortening system.

How does the system optimize performance using caching?

-The system uses a distributed cache like Redis or Memcached to store frequently accessed short URLs, reducing the load on the database and improving response times.

What additional features or enhancements could be considered for the system?

-Additional features include analytics (tracking popular URLs), IP address logging for optimization, rate limiting to prevent DDoS attacks, and security measures such as adding a random suffix to short URLs to avoid predictability.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

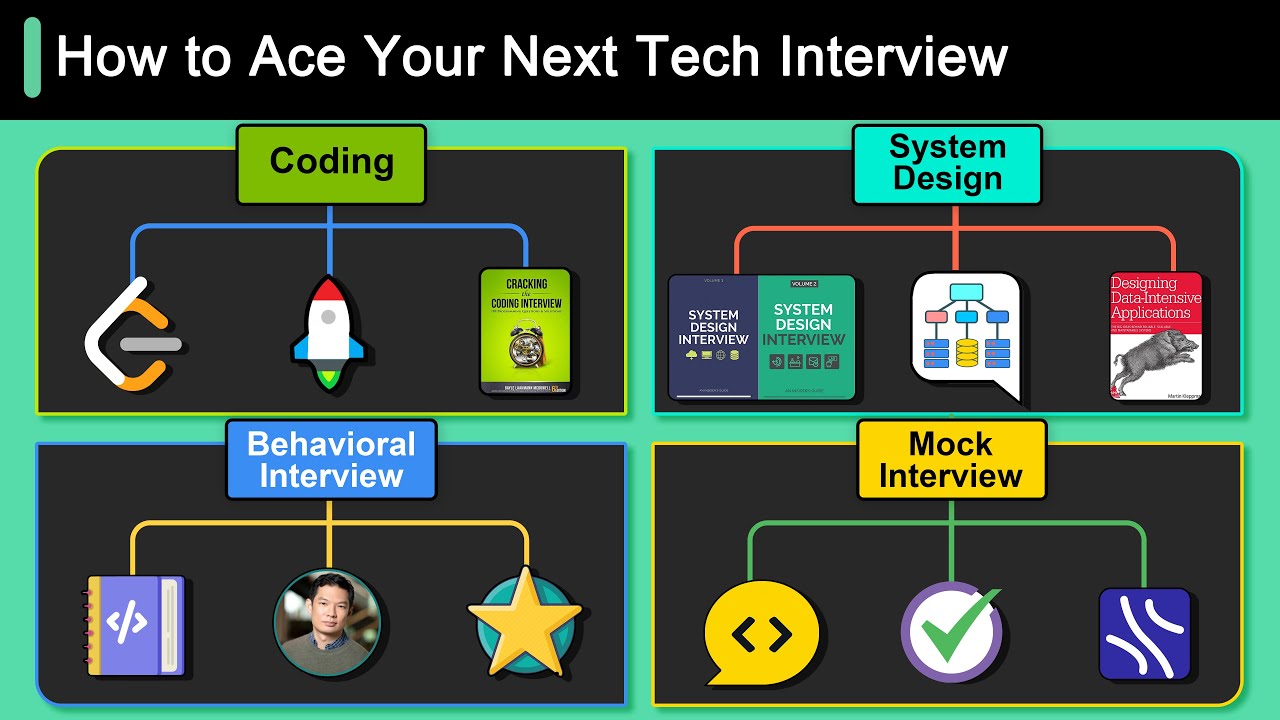

Our Recommended Materials For Cracking Your Next Tech Interview

The Best Interview Advice You Never Got (from ex-Amazon Principal Engineer)

Movie Recommendations - ML System Design Interview

Teori Sistem dan Berpikir Sistem

Os Pobres da Dinamarca. Como Vive Um Pobre No país Nórdico. Dinamarca hoje.

5 Most Common Interview Questions and Answers for any Interview | Interview Tips

5.0 / 5 (0 votes)