W05 Clip 10

Summary

TLDRThis video script delves into various text sampling methods for language models, emphasizing the trade-offs between coherence, diversity, and creativity. It explains the 'greedy approach', which selects the most probable token, leading to predictable outputs. 'Random weighted sampling' introduces randomness for more varied results. 'Top-k sampling' balances coherence and diversity by considering only the top k probable tokens. 'Top-p sampling', or 'nucleus sampling', dynamically selects tokens based on a cumulative probability threshold, enhancing contextual appropriateness. The script illustrates how these methods can be tailored for different applications, such as creative writing or conversational AI.

Takeaways

- 📚 Understanding different sampling methods is essential for working with language models like GPT.

- 🔍 The Greedy Approach always selects the token with the highest probability, which can lead to coherent but predictable text.

- 🎰 Random Weighted Sampling introduces randomness by selecting the next token from the probability distribution, enhancing diversity.

- 🔑 Top-K Sampling considers only the top K probable tokens at each step, balancing coherence and diversity.

- 📈 Top-P Sampling, or Nucleus Sampling, dynamically includes tokens whose cumulative probability exceeds a threshold, P, offering flexibility.

- 📝 Top-P Sampling is effective for tasks requiring natural and coherent responses, such as conversational AI.

- 🐱 An example of Top-P Sampling involves building a context, getting a probability distribution, setting a threshold, sorting tokens, accumulating probabilities, and selecting a token.

- 🔄 The script provides a step-by-step explanation of how Top-P Sampling works in generating the next word in a sentence.

- 💡 Each sampling method offers a unique trade-off between coherence, diversity, and creativity in text generation.

- 🛠 By understanding these techniques, one can tailor language models to achieve desired output quality for specific applications.

Q & A

What is the greedy approach in text generation?

-The greedy approach is a method where the model always selects the token with the highest probability as the next word, leading to coherent but often repetitive and predictable outputs.

How does random weighted sampling differ from the greedy approach?

-Random weighted sampling introduces randomness by selecting the next token based on the probability distribution, allowing less probable tokens to be chosen and leading to more diverse and creative outputs.

What is the purpose of Top K sampling in text generation?

-Top K sampling considers only the top K most probable tokens at each step, balancing coherence and diversity by limiting choices to the most likely tokens.

How does Top P sampling work, and what is its advantage?

-Top P sampling includes all tokens whose cumulative probability exceeds a certain threshold, P. It dynamically determines the set of possible next tokens, providing flexibility and leading to more fluent and contextually appropriate text generation.

Why might a language model using the greedy approach produce a straightforward narrative?

-A model using the greedy approach might produce a straightforward narrative because it always chooses the most probable token, which often follows common patterns and lacks diversity.

In creative writing, why might random weighted sampling be preferred over the greedy approach?

-Random weighted sampling might be preferred in creative writing because it allows for unprecedented twists and variations, resulting in more engaging and less predictable interactions.

What is the role of the parameter K in Top K sampling?

-The parameter K in Top K sampling determines the number of most probable tokens the model considers at each step, influencing the balance between coherence and diversity in the generated text.

How does setting a Top P threshold affect the text generation process?

-Setting a Top P threshold affects the text generation by determining the smallest set of tokens to consider, based on their cumulative probability reaching the specified threshold, which influences the model's flexibility and contextual appropriateness.

Can you provide an example of how Top P sampling might lead to more natural text generation?

-In conversational AI, Top P sampling can lead to more natural text generation by dynamically adapting to the context and considering a broader range of tokens, which can result in responses that are both coherent and contextually relevant.

What is the significance of the initial context in the Top P sampling example provided?

-The initial context 'the cat sat on the' in the Top P sampling example is significant because it serves as the basis for the model to generate a probability distribution of possible next words, which is essential for the sampling process.

How does the Top P sampling method ensure a balance between coherence and creativity?

-Top P sampling ensures a balance by considering a dynamic set of tokens based on their cumulative probability, allowing for creativity while maintaining a threshold that ensures the generated text remains coherent and contextually relevant.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

How to tweak your model in Ollama or LMStudio or anywhere else

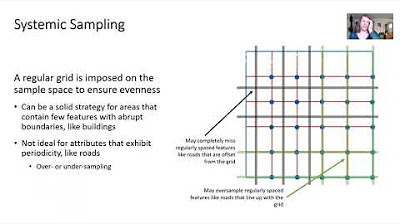

Spatial Sampling & Interpolation

Aliasing and the Sampling Theorem

Why Does Diffusion Work Better than Auto-Regression?

Stanford CS25: V1 I Transformers in Language: The development of GPT Models, GPT3

Whitepaper Companion Podcast - Foundational LLMs & Text Generation

5.0 / 5 (0 votes)