Sagemaker Model Monitor - Best Practices and gotchas

Summary

TLDRIn this episode of 'Tech with Nila', viewers are introduced to advanced practices for utilizing Amazon SageMaker Model Monitor, a tool for tracking data and model quality. The session assumes a pre-existing understanding of Model Monitor and discusses its integration with SageMaker Clarify for bias and explainability reports. The video outlines the types of monitoring available, the process of setting up baselines, and the importance of real-time monitoring with ground truth labels. It also touches on customization options, limitations, and the benefits of SageMaker's managed services for ML engineers and data scientists.

Takeaways

- 🛠️ SageMaker Model Monitor is an advanced tool for monitoring data quality, model quality, and bias in machine learning models, requiring at least a level 200 understanding of the service.

- 🔗 SageMaker Clarify and Model Monitor can be used together, with Clarify providing bias and explainability reports, and Model Monitor performing ongoing monitoring tasks.

- 📈 Model Monitor categorizes monitoring into two types: one requiring ground truth labels for model quality and bias drift, and one not requiring ground truth for data quality and feature attribution drift.

- 📊 To set up Model Monitor, you first create a baseline from the training dataset, which computes metrics and constraints, followed by scheduling monitoring jobs for ongoing data capture and analysis.

- 📝 For monitoring jobs that require ground truth, an additional ETL process is needed to merge production data with true labels before Model Monitor can compare and report discrepancies.

- 👥 Different personas prefer different access methods; machine learning engineers or data scientists might prefer AWS SDKs and CLI, while business analysts might prefer the SageMaker UI.

- 🚫 Limitations exist with the UI, such as the inability to delete endpoints with Model Monitor enabled directly from the console, requiring CLI or API use instead.

- 📊 Model Monitor computes metrics and statistics for tabular data only, meaning it can work with image classification models but will focus on output analysis rather than input.

- ⚙️ Custom VPC configurations may be needed for SageMaker Studio launched in a custom Amazon VPC to ensure Model Monitor can communicate with necessary AWS services.

- 💻 Pre-processing and post-processing scripts can be used with Model Monitor to transform data or extend monitoring capabilities, but are limited to data and model quality jobs.

- 🔄 Real-time monitoring can be achieved by quickly generating ground truth labels, potentially using Amazon's Augmented AI service to add human-in-the-loop capabilities.

- 🔄 Automating actions based on Model Monitor's alerts and data is crucial for effective deployment and ensures continuous model performance monitoring and improvement.

Q & A

What is the prerequisite knowledge required for this session on SageMaker Model Monitor?

-The prerequisite knowledge for this session is at least a level 200 understanding of Model Monitor.

What does SageMaker Clarify provide in the context of model monitoring?

-SageMaker Clarify is a container that produces bias and explainability reports, which can be used independently or in conjunction with SageMaker Model Monitor for recurring monitoring tasks.

What are the two types of monitoring jobs in SageMaker Model Monitor that require the help of SageMaker Clarify?

-The two types of monitoring jobs that require SageMaker Clarify are model quality drift and bias drift, as they both need to compute final matrices based on ground truth labels.

How does SageMaker Model Monitor categorize the monitoring process?

-SageMaker Model Monitor categorizes the monitoring process into two types: one that requires ground truth and one that does not require ground truth.

What is the first step in the monitoring process when using SageMaker Model Monitor?

-The first step is to create a baseline from the dataset used to train the model, which computes the matrices and suggested constraints for the matrices.

What are the limitations of using the SageMaker UI for model monitoring?

-Using the SageMaker UI, certain actions like deleting an interface endpoint with model monitoring enabled cannot be done directly in the console and require CLI or API usage.

How does SageMaker Model Monitor handle data capture for non-tabular data like images?

-For non-tabular data like images, SageMaker Model Monitor calculates matrices and statistics for the output (the label based on the image), not the input.

What is the recommended disk utilization percentage to ensure continuous data capture by SageMaker Model Monitor?

-It is recommended to keep disk utilization below 75% to ensure continuous data capture by SageMaker Model Monitor.

How can real-time monitoring be achieved with SageMaker Model Monitor?

-Real-time monitoring can be achieved by quickly generating ground truth labels, which can be facilitated by leveraging Amazon's Augmented AI service to add human-in-the-loop capabilities.

What customization options does SageMaker Model Monitor offer for pre-processing and post-processing?

-SageMaker Model Monitor allows the use of plain Python scripts for pre-processing to transform input data or for post-processing to extend the code after a successful monitoring run. This can be used for data transformation, feature exclusion, or applying custom sampling strategies.

What are the requirements for creating a data quality baseline in SageMaker Model Monitor?

-For a data quality baseline, the schema of the training dataset and inference dataset should be the same, with the same number and order of features. The first column should refer to the prediction or output, and column names should use lowercase letters and underscores only.

How can the generated constraints from the baseline job be reviewed and modified?

-The generated constraints can be reviewed and modified based on domain and business understanding to make the constraints more aggressive or relaxed, controlling the number and nature of violations.

What is the significance of setting start and end time offsets in monitoring jobs that require ground truth?

-Start and end time offsets are important to select the relevant data for monitoring jobs that require ground truth, ensuring that only data for which the ground truth is available is used.

How can SageMaker Pipeline be used for on-demand model monitoring?

-SageMaker Pipeline can be used to set up on-demand monitoring jobs by leveraging the 'check_job_config' method for quality checks and 'clarify_check_config' for bias and explainability checks, making it serverless and cost-effective.

What are the extension points provided by SageMaker Model Monitor for bringing your own container?

-SageMaker Model Monitor provides extension points that allow you to adhere to the contract input and output to leverage the model monitor. The container can analyze data in the 'dataset_source_path' and write the report to the 'output_path', with the ability to write any report that suits your needs.

How can the data from SageMaker Model Monitor be used to automate actions like model retraining?

-The data from SageMaker Model Monitor can trigger CloudWatch alarms and EventBridge rules when drifts are detected and matrix constraints are exceeded, which can in turn start a model retraining pipeline, automating the retraining process based on monitoring violations.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

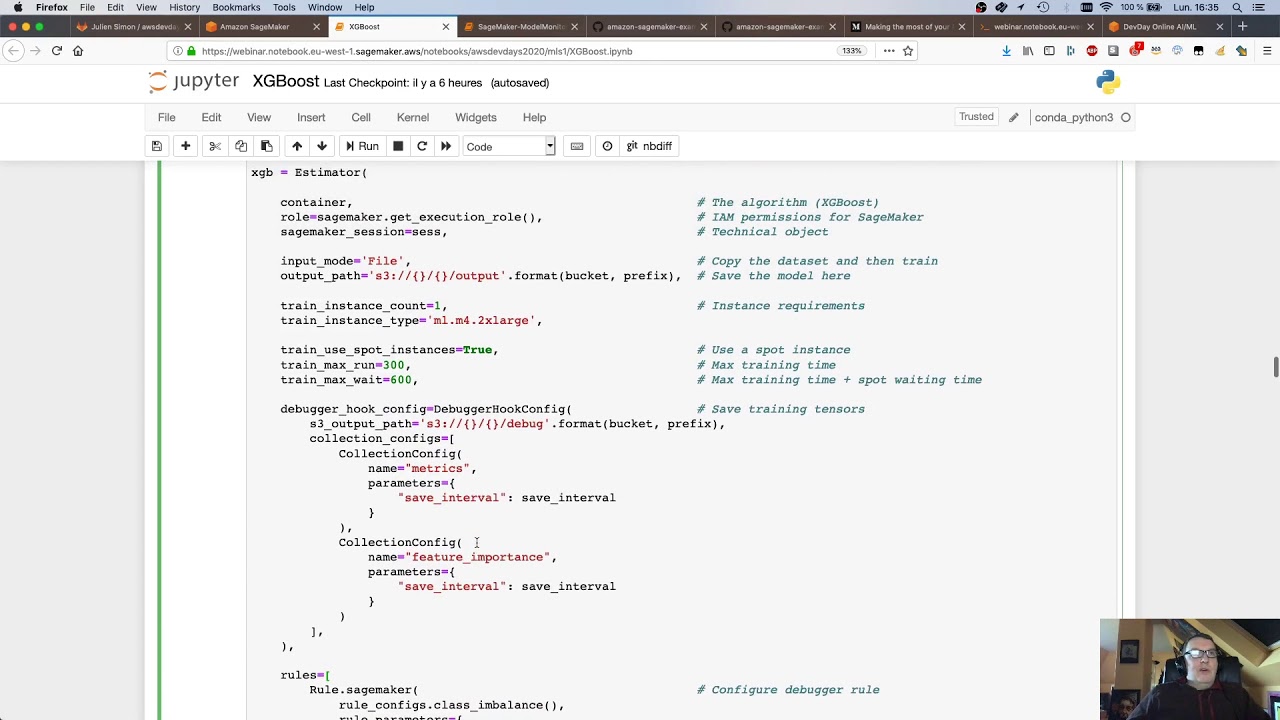

AWS DevDays 2020 - Deep Dive on Amazon SageMaker Debugger & Amazon SageMaker Model Monitor

Model Monitoring with Sagemaker

Introduction to Amazon SageMaker

Helical Model of Communication || Communication: Concepts & Processes || Ms. Honey Shah || BA(JMC)

Estimating Healthcare Receivables using Amazon SageMaker Canvas | Amazon Web Services

How to grow your Amazon influencer status

5.0 / 5 (0 votes)