How Prometheus Monitoring works | Prometheus Architecture explained

Summary

TLDRThis video delves into Prometheus, a pivotal monitoring tool for dynamic container environments like Kubernetes and Docker Swarm. It outlines Prometheus' architecture, including its server, time-series database, and data retrieval worker. The script explains how Prometheus collects metrics from targets via a pull system, using exporters for services lacking native support. It also touches on alerting mechanisms, data storage, and the use of PromQL for querying metrics. The video promises practical examples, highlighting Prometheus' importance in modern DevOps for automating monitoring and alerting to maintain service availability.

Takeaways

- 📈 **Prometheus Overview**: Prometheus is a monitoring tool designed for dynamic container environments like Kubernetes and Docker Swarm, but also applicable to traditional infrastructure.

- 🔍 **Use Cases**: It's used for monitoring servers, applications, and services, providing insights into hardware and application levels, which is crucial for maintaining uptime and performance.

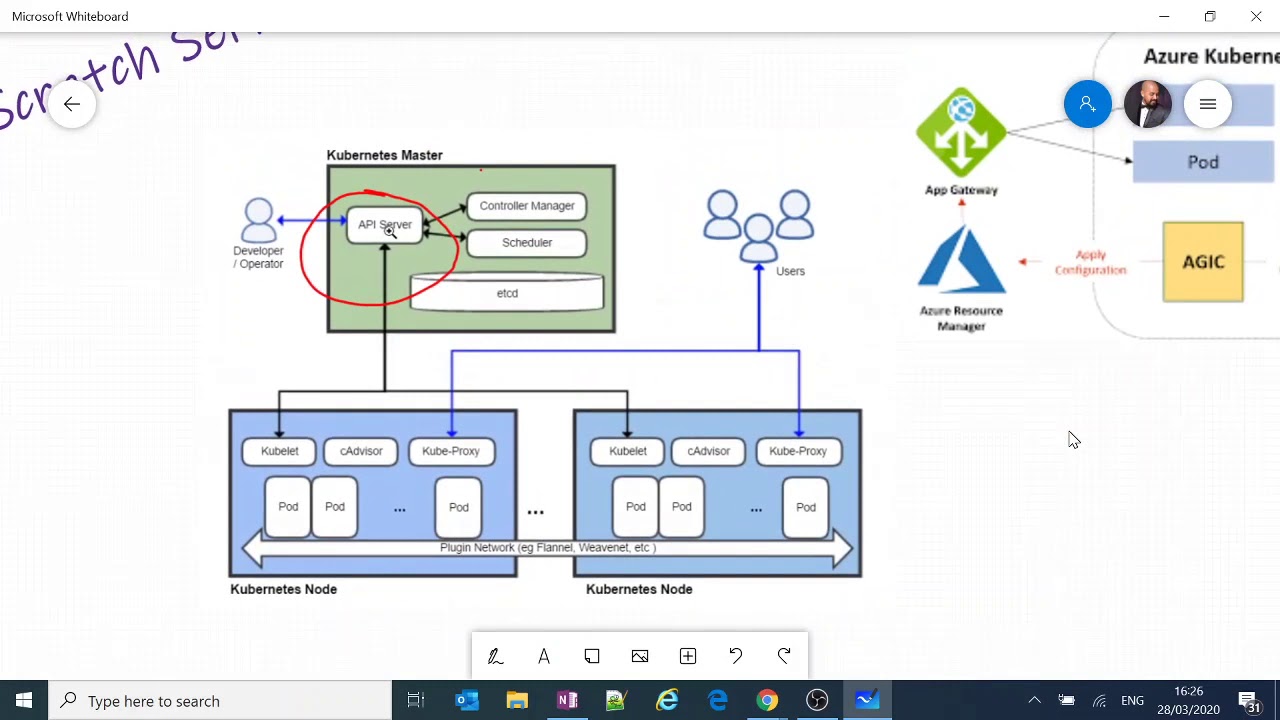

- 🏗️ **Architecture**: Prometheus consists of a server, time series database, data retrieval workers, and a web server/API for querying stored data.

- 🎯 **Key Characteristics**: It's known for its pull model of data collection, which reduces network load and allows for easier service status detection.

- 🚀 **Popularity in DevOps**: It's become a mainstream choice in container and microservice monitoring due to its automation capabilities and efficiency.

- 📊 **Data Collection**: Prometheus collects metrics from targets via an HTTP endpoint, which requires the target to expose a `/metrics` endpoint.

- 🔌 **Exporters**: For services without native Prometheus support, exporters are used to translate metrics into a format Prometheus can understand.

- 💾 **Storage**: It stores data in a local on-disk time series database and can integrate with remote storage systems.

- 📑 **Configuration**: Prometheus uses a `prometheus.yml` file to configure target scraping and rule evaluation intervals.

- 🔥 **Alerting**: The Alertmanager component is responsible for handling alerts based on defined rules and sending notifications through various channels.

- 🔄 **Scalability**: While Prometheus is reliable and self-contained, scaling it across many servers can be challenging due to its design.

Q & A

What is Prometheus and why is it important in modern infrastructure?

-Prometheus is a monitoring tool designed for highly dynamic container environments like Kubernetes and Docker Swarm. It's important because it helps monitor and manage complex infrastructures, providing insights into hardware and application levels to prevent downtimes and ensure smooth operations.

What are the different use cases of Prometheus?

-Prometheus can be used for monitoring containerized applications, traditional non-container infrastructure, and microservices. It's particularly useful in environments where there's a need for automated monitoring and alerting to maintain system reliability and performance.

What is the architecture of Prometheus?

-Prometheus architecture consists of a Prometheus server that includes a time series database for storing metrics, a data retrieval worker for pulling metrics from targets, and a web server/API for querying stored data. It also includes components like exporters for non-native Prometheus targets and client libraries for custom application metrics.

How does Prometheus collect metrics from targets?

-Prometheus collects metrics by pulling data from HTTP endpoints exposed by targets. This requires the target to expose a /metrics endpoint in a format that Prometheus understands. Exporters are used to convert metrics from services that don't have native Prometheus endpoints.

What is the significance of the pull model in Prometheus?

-The pull model allows Prometheus to reduce the load on the infrastructure by not requiring services to push metrics. It also simplifies the monitoring process and makes it easier to detect if a service is down since it's not responding to the pull request.

How does Prometheus handle short-lived targets that aren't around long enough to be scraped?

-For short-lived targets like batch jobs, Prometheus offers a component called Pushgateway. This allows these services to push their metrics directly to the Prometheus database.

What is the role of the Prometheus Alertmanager?

-The Alertmanager is responsible for firing alerts through various channels like email or Slack when certain conditions specified in the alert rules are met.

How does Prometheus store its data and how can it be accessed?

-Prometheus stores metrics data in a local on-disk time series database in a custom format. It can also integrate with remote storage systems. The data can be queried through its server API using the PromQL query language or visualized through tools like Grafana.

What is the difficulty with scaling Prometheus?

-Scaling Prometheus can be challenging due to its design to be reliable even when other systems have outages. Each Prometheus server is standalone, and setting up an extensive infrastructure for aggregation of metrics from multiple servers can be complex.

How is Prometheus integrated with container environments like Docker and Kubernetes?

-Prometheus components are available as Docker images, making it easy to deploy in Kubernetes or other container environments. It integrates well with Kubernetes, providing cluster node resource monitoring out of the box.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

5.0 / 5 (0 votes)