43 R CNNs, SSDs, and YOLO

Summary

TLDRThis video explores the evolution of object detection in computer vision, from traditional methods like HOG and SVM to deep learning approaches. It covers the breakthrough of R-CNNs, their evolution into Fast R-CNNs and Faster R-CNNs, and introduces Single Shot Detectors (SSDs) and the revolutionary YOLO algorithm. The video explains how these advanced detectors work, their speed and accuracy, and touches on metrics like Intersection over Union (IoU) and Mean Average Precision (mAP). It concludes with a look at the latest advancements in YOLO, setting the stage for implementing these detectors using Python and OpenCV.

Takeaways

- 🔎 **Object Detection Overview**: Object detection is a crucial part of computer vision that combines object classification and localization to identify and locate objects within images.

- 🐾 **Historical Context**: Early object detection methods like Haar cascade classifiers and Histogram of Gradients (HOG) with SVMs have been largely replaced by deep learning approaches due to their inefficiency with multiple object classes.

- 🚀 **Deep Learning Breakthrough**: In 2014, the introduction of Region-based Convolutional Neural Networks (R-CNNs) marked a significant advancement in object detection, achieving high performance in the PASCAL VOC challenge.

- 📈 **Selective Search Algorithm**: R-CNNs use selective search to propose regions of interest within an image, which are then classified by a CNN and refined using a linear regression for bounding boxes.

- 📏 **Intersection over Union (IoU)**: IoU is a metric used to evaluate the accuracy of object detection by measuring the overlap between the predicted bounding box and the ground truth.

- 🏎️ **Fast R-CNN**: An evolution of R-CNN, Fast R-CNN improved speed by reducing the need for multiple model training and execution, using region of interest (ROI) pooling.

- 🚀 **Faster R-CNN**: Building on Fast R-CNN, Faster R-CNN further increased speed by eliminating the need for selective search, thus speeding up the region proposal stage.

- 🏁 **Single Shot Detectors (SSDs)**: SSDs introduced multiscale features and default boxes to significantly increase detection speed, achieving near real-time performance with minimal accuracy loss.

- 🌐 **YOLO (You Only Look Once)**: YOLO revolutionized object detection by using a single neural network applied to the full image, allowing for fast and efficient detection by predicting bounding boxes and class probabilities directly.

- 🏆 **YOLOv3**: The latest version of YOLO, YOLOv3, includes improvements like multi-scale training and batch normalization, enhancing its ability to detect objects of various sizes and resolutions.

Q & A

What is object detection in the context of computer vision?

-Object detection is a crucial aspect of computer vision that combines object classification and localization. It not only identifies the presence of an object within an image but also determines the region or bounding box of the object, allowing for multi-class detection unlike earlier single-class detectors.

How do traditional non-learning methods for object detection compare to deep learning methods?

-Traditional methods such as Haar cascade classifiers and Histogram of Gradients (HOG) with Linear SVMs are considered outdated compared to deep learning methods. Deep learning object detectors significantly outperform these traditional methods, offering better accuracy and applicability in a wider range of scenarios.

What was the breakthrough in deep learning object detection in 2014?

-In 2014, the introduction of Regions with CNNs (R-CNNs) marked a significant breakthrough in deep learning object detection. R-CNNs achieved remarkably high performance in the PASCAL VOC challenge, a benchmark in computer vision for object detection.

How does the Selective Search algorithm contribute to object detection?

-The Selective Search algorithm contributes to object detection by segmenting the image into different regions based on similarities in color or texture. It proposes regions of interest that are then passed through a CNN for classification, thus streamlining the process of generating bounding box proposals.

What is Intersection over Union (IoU) and why is it important in object detection?

-Intersection over Union (IoU) is a metric used to measure the accuracy of an object detector by calculating the overlap between the predicted bounding box and the ground truth box. An IoU score above 0.5 is generally considered acceptable, indicating a good match between the predicted and actual object location.

How does the Mean Average Precision (mAP) metric help in evaluating object detectors?

-Mean Average Precision (mAP) is a metric used to evaluate the performance of object detectors, especially when dealing with multiple detections for the same object. It provides a measure of accuracy by considering both the precision and recall of the detector across various classes of objects.

What improvements did Fast R-CNN bring over the original R-CNN?

-Fast R-CNN improved upon the original R-CNN by reducing the computational overhead. It eliminated the need for separate models for feature extraction, classification, and bounding box regression by running the CNN only once over the entire image and then applying Region of Interest (ROI) pooling.

How do SSDs achieve near real-time performance in object detection?

-SSDs (Single Shot MultiBox Detectors) achieve near real-time performance by using multiscale features and default boxes, which allow for efficient processing without the need for region proposal networks. They also reduce the resolution of images fed into the classifier, which contributes to their speed.

What is the core concept behind YOLO (You Only Look Once) object detection?

-The core concept behind YOLO is to use a single neural network that processes the full image at once, allowing it to reason globally about the image content. It divides the image into a grid and predicts bounding boxes and class probabilities for each grid cell, which simplifies the detection process and enables fast performance.

How does YOLO's architecture differ from other object detection methods?

-YOLO's architecture differs by using a single, unified model that combines region proposal, object recognition, and classification into one full convolutional neural network. This design allows for fast and efficient object detection without the need for multiple stages or models, which is a departure from methods like R-CNNs and SSDs.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

On-device object detection: Introduction

Chapter 5 - Video 2 - Image Detection Machine Learning

Best Free resource I used to learn AI/ML | IIT DELHI

How computers learn to recognize objects instantly | Joseph Redmon

Object Detection Using OpenCV Python | Object Detection OpenCV Tutorial | Simplilearn

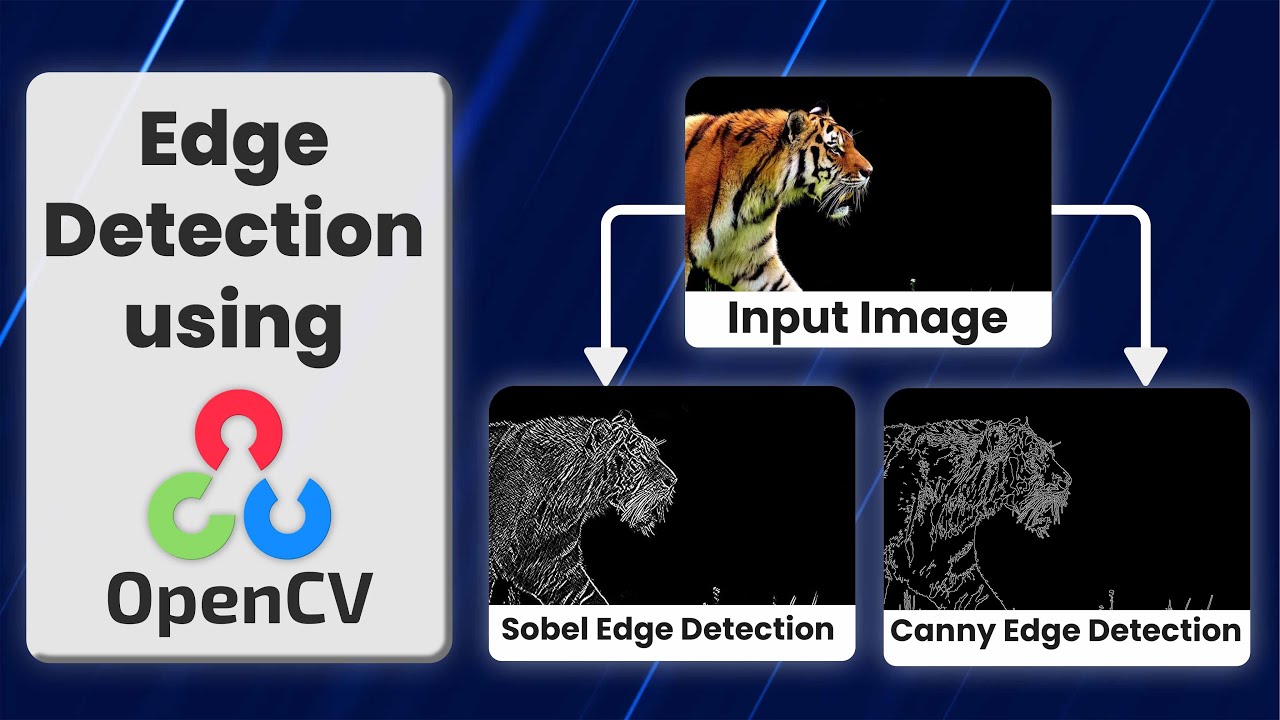

Edge Detection Using OpenCV Explained.

5.0 / 5 (0 votes)