On-device object detection: Introduction

Summary

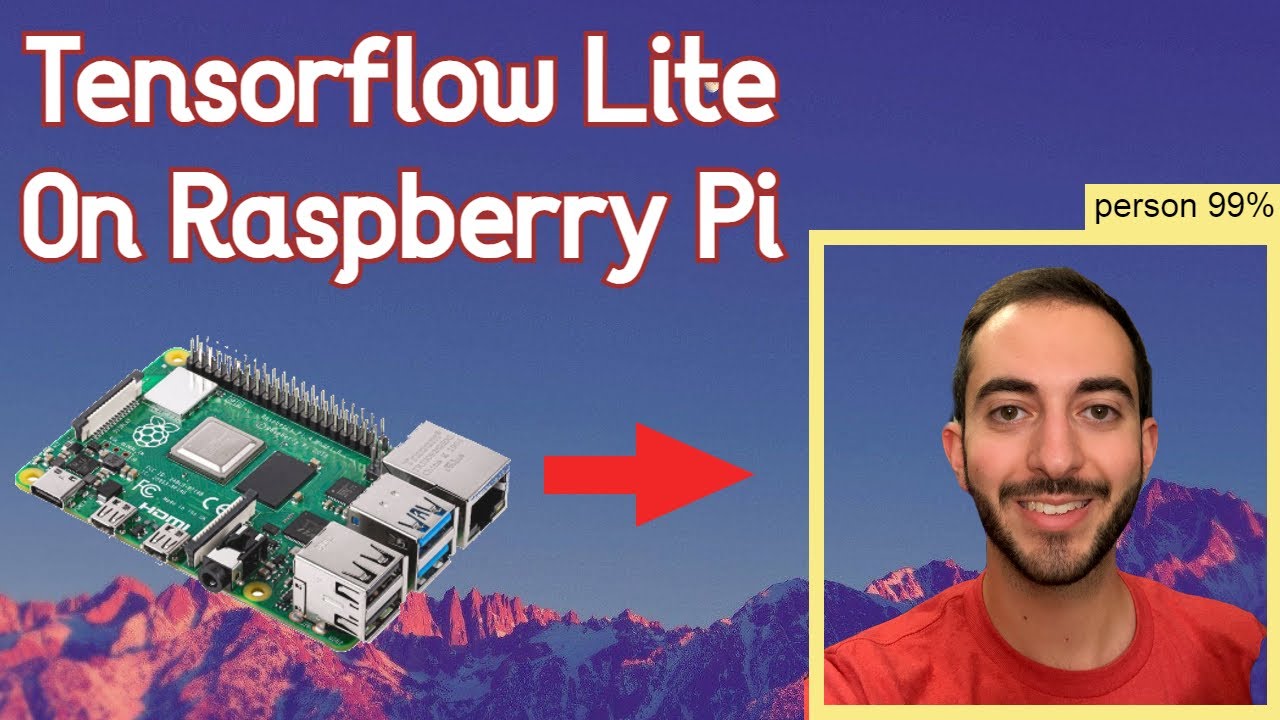

TLDRIn this video, Khanh, a developer advocate from the TensorFlow team, introduces object detection, a computer vision task that identifies and locates objects within images. Unlike image classification, which only labels objects, object detection provides the position of each object in the form of a bounding box. The video explains two approaches to implementing object detection in mobile apps: using pre-trained models or training a custom model. Khanh outlines the steps for both methods, including data collection, model training, and deployment using TensorFlow Lite. The video sets the stage for future tutorials on object detection.

Takeaways

- 😀 Object detection is a computer vision task that analyzes images to identify and locate objects using bounding boxes.

- 😀 The difference between object detection and image classification is that the former identifies object locations, while the latter only provides labels.

- 😀 Image segmentation is an advanced task that also detects object locations but uses pixel masks instead of bounding boxes.

- 😀 Object detection can be used in various real-world applications, such as detecting empty parking spots or identifying road problems like potholes.

- 😀 There are two main ways to add object detection to mobile apps: using a pre-trained model or training and deploying your own model.

- 😀 Pre-trained models are recommended for general object detection tasks, such as recognizing home goods, food, or plants.

- 😀 Training your own model is necessary if you need to detect domain-specific objects, like different food ingredients or types of road damage.

- 😀 ML Kit’s Object Detection and Tracking API makes it easy to integrate a pre-trained object detection model into a mobile app with minimal code.

- 😀 TensorFlow Lite’s Model Maker can be used to train a custom object detection model if a pre-trained model doesn't meet your needs.

- 😀 Collecting and labeling a training dataset is one of the most time-consuming steps when training your own object detection model.

- 😀 TensorFlow Lite’s Task Library simplifies the deployment of custom models to mobile apps, enabling quick integration with just a few lines of code.

Q & A

What is object detection?

-Object detection is a computer vision task that analyzes an input image and returns a list of known objects, along with their labels and locations in the image. The objects' locations are represented as bounding boxes, which are rectangles around the detected objects.

How is object detection different from image classification?

-In image classification, the model only returns labels identifying objects in an image but does not specify their locations. Object detection, on the other hand, identifies both the objects and their exact locations using bounding boxes.

What is image segmentation, and how does it differ from object detection?

-Image segmentation is similar to object detection because it also identifies where objects are in an image. However, instead of using bounding boxes, it uses a mask that specifies which pixels belong to each object.

Why is object detection useful?

-Object detection is valuable because it enables applications such as detecting empty parking spots, identifying road hazards like potholes, or even recognizing products in stores, offering practical solutions across various domains.

What are the two ways to add object detection capabilities to a mobile app?

-The two ways to add object detection to a mobile app are: 1) Using a pre-trained model, and 2) Training your own custom model.

When should you use a pre-trained object detection model?

-You should use a pre-trained object detection model when you need to detect common objects like home goods, food, or plants. Pre-trained models can be quickly deployed without needing to train your own model.

When is it necessary to train your own object detection model?

-Training your own model is necessary when the existing pre-trained models do not cover your specific use case, such as detecting domain-specific objects like different types of food ingredients or road problems like potholes and cracks.

What is ML Kit’s Object Detection and Tracking API?

-ML Kit’s Object Detection and Tracking API is a tool that allows developers to integrate object detection functionality into mobile apps with minimal coding, using pre-trained models for general object detection.

What steps are involved in training and deploying a custom object detection model?

-The steps for training and deploying a custom object detection model are: 1) Collect and label the training data, 2) Train the model using TensorFlow Lite's Model Maker, and 3) Deploy the trained model to a mobile app using TensorFlow Lite Task Library.

What tools can be used to train and deploy custom object detection models?

-TensorFlow Lite's Model Maker and Task Library are the tools that simplify the process of training and deploying custom object detection models, requiring only a few lines of code for each step.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)