DP-203: 01 - Introduction

Summary

TLDRIn this engaging YouTube series, the host, Spirit, guides viewers on a journey to become an Azure Data Engineer and prepares them for the DP-203 exam. With over 18 years of experience in data engineering and multiple certifications, Spirit promises an in-depth and passionate approach to learning. The course is free, with no hidden costs, and assumes that learners have some hands-on experience and basic knowledge of Azure. The content is structured around the natural lifecycle of data, covering everything from data ingestion to transformation and modeling. Spirit emphasizes the importance of taking notes and provides resources such as GitHub links for diagrams. The series also touches on the challenges faced by data engineers, including data source connectivity, authentication, and transformation requirements. The host's real-life example of automating his wife's book sales data retrieval showcases the practical application of data engineering concepts. The series is designed to be informative, interactive, and enjoyable, with a commitment to answering viewer questions in future episodes.

Takeaways

- 🎓 The course is designed to prepare individuals to become Azure data engineers and to pass the related exams.

- 💼 The instructor has over 18 years of experience in data engineering and holds multiple certifications, ensuring a high-quality learning experience.

- 📈 The course is free of charge, with no hidden costs, making it accessible to a wide audience.

- 📚 Learners are expected to have hands-on experience and to practice the topics covered in the course.

- 🔑 For those without an Azure subscription, a free trial is recommended and a link is provided in the video description.

- 📈 The course aims to go beyond exam requirements, delving deeper into important topics for a comprehensive understanding.

- 📒 It is advised to take notes during the course, using tools like OneNote, Excel, or physical notes to retain information.

- 🖥️ The instructor will provide sketches and diagrams to explain concepts, which will be available on GitHub.

- 📅 New episodes will be released at least twice a month, with an option to subscribe for updates.

- 🤔 The course encourages questions and interaction, with the instructor committing to answering in future episodes.

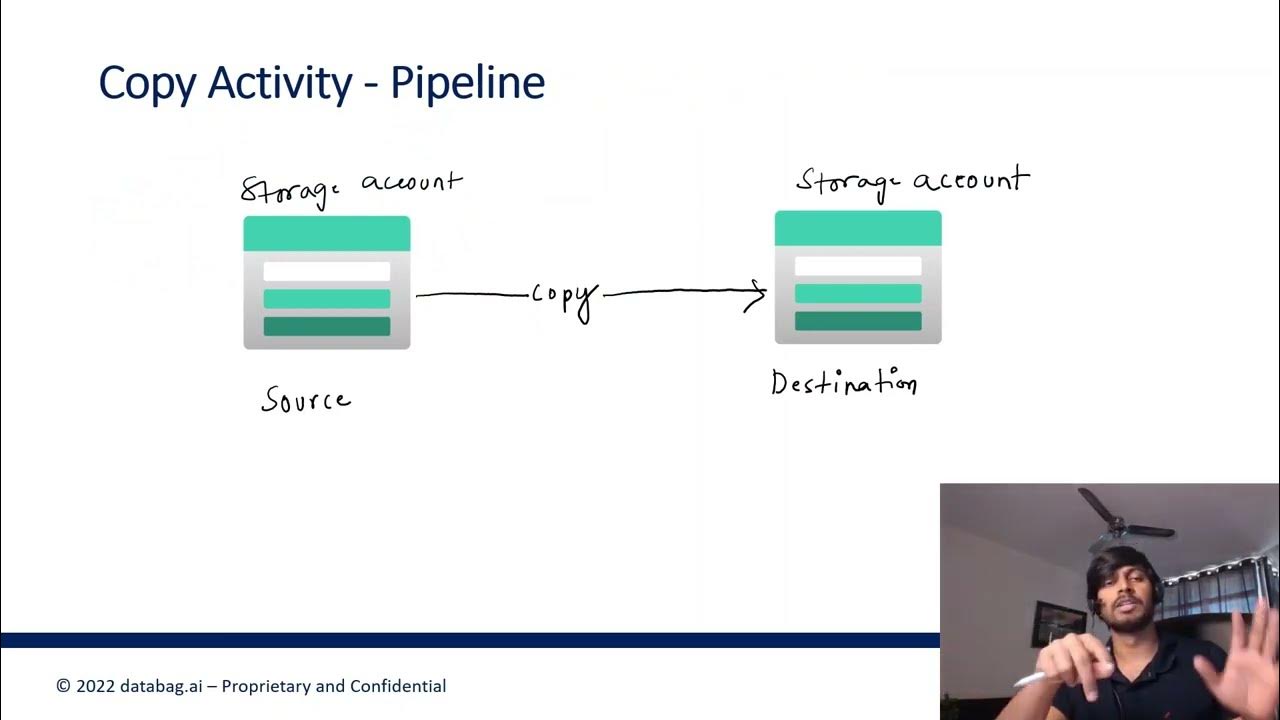

- 📈 Data engineering involves challenges such as data source identification, authentication, transformation, and analysis, which will be covered in the course.

Q & A

What is the primary goal of the YouTube series presented by Spirit?

-The primary goal of the series is to help viewers become Azure data engineers and prepare them to pass the DP-203 exam.

Why should one choose this course over other available courses?

-This course is special because it is taught by an experienced professional with over 18 years in data engineering, multiple certifications, and positive feedback from previous trainings. Additionally, it is completely free with no hidden costs.

What is the importance of having hands-on experience in Azure for this course?

-Hands-on experience is crucial as the course assumes that learners will practice the discussed topics, which is essential for truly understanding and mastering the material.

What does the instructor recommend for those who do not have an Azure subscription?

-The instructor recommends using a free trial subscription, which should be sufficient for the training purposes of the course.

Why does the instructor suggest taking notes during the course?

-Taking notes is advised because it helps to reinforce learning, especially when dealing with similar-sounding services and features within Azure.

How often does the instructor plan to release new episodes of the series?

-The instructor plans to release new episodes at least twice a month, with the possibility of more frequent uploads.

What is the real-life example used to explain data engineering in the script?

-The example involves automating the process of checking book sales for the instructor's wife, who is a writer. This involves data extraction, transformation, and analysis from various sources including a publisher's website, an Excel file, and the Facebook marketing API.

What is the difference between batch processing and streaming in the context of data solutions?

-Batch processing involves processing data in chunks or batches, often during off-peak hours, while streaming involves the continuous processing of data as it is generated or received in real-time.

Which part of the data lifecycle does a data engineer typically handle?

-A data engineer typically handles everything between data sources and data modeling/serving, which includes data ingestion, transformation, and storage.

What is the recommended approach for keeping track of the different services and features within Azure?

-The instructor recommends taking notes using a tool like OneNote, Excel, Word, a mind map, or even physical notes to keep track of the various services and features.

How can one access the detailed study guide for the DP-203 exam?

-One can access the detailed study guide by searching for 'DP-203' in a browser, which will lead to the Microsoft Learn page containing the study guide.

What is the current inclusion status of Microsoft Fabric in the DP-203 exam?

-As of the time of the script, Microsoft Fabric is not yet included in the DP-203 exam, but it is expected to be added in the future.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

DP 203 Dumps | DP 203 Real Exam Questions | Part 2

DP-900 Exam EP 03: Data Job Roles and Responsibilities

Requirements, admin portal, gateways in Microsoft Fabric | DP-600 EXAM PREP (2 of 12)

I Tried 50 Data Analyst Courses. Here Are Top 5

Azure Data Factory Part 3 - Creating first ADF Pipeline

How Hard Is AWS Certified Data Engineer Associate DEA-C01

5.0 / 5 (0 votes)