Requirements, admin portal, gateways in Microsoft Fabric | DP-600 EXAM PREP (2 of 12)

Summary

TLDRThis DP600 exam preparation course's first chapter guides viewers on planning a data analytics environment in Azure Synapse Analytics. It covers identifying solution requirements, including capacity planning, data ingestion methods, and storage options. The chapter also instructs on using the Azure Synapse admin portal for settings management and creating custom Power BI report themes. Interactive scenarios and practice questions test understanding, aiming to solidify key concepts for the exam.

Takeaways

- 📘 The course is designed to prepare for the DP600 exam, focusing on planning a data analytics environment in Azure Synapse Analytics.

- 🔍 The first chapter covers crucial elements like identifying solution requirements, recommending settings in the Azure Synapse admin portal, choosing data gateway types, and creating custom Power BI report themes.

- 🎯 The goal is to extract client requirements through a workshop to build a plan for a new Azure Synapse environment and potentially secure a new contract.

- 🏢 Capacity planning involves determining the number and size of capacities needed, influenced by factors like data residency regulations, billing preferences, and workload types.

- 📊 Data ingestion methods are explored, with options including shortcut, database mirroring, ETL via data flows or pipelines, and event streams, depending on data source and team skills.

- 🚀 The importance of choosing the right data storage option (Lakehouse, data warehouse, or KQL database) based on data type, team skills, and real-time needs is emphasized.

- 🛠️ The Azure Synapse admin portal is introduced as a key tool for managing tenant settings, capacities, and other organizational configurations.

- 🌐 Understanding data gateways, both on-premise and virtual network, is crucial for securely accessing and ingesting data into Azure Synapse.

- 🎨 Customizing Power BI report themes for consistency in reporting is discussed, including updating existing themes or importing new ones via JSON files.

- 📝 The session concludes with practice questions to reinforce learning and a teaser for the next lesson, which will delve into setting up access control, sensitivity labeling, workspaces, and capacities in Azure Synapse.

Q & A

What is the main focus of the first chapter in the DP600 exam preparation course?

-The main focus of the first chapter is to teach how to plan a data analytics environment in Azure Synapse Analytics (referred to as 'fabric' in the transcript), covering key elements such as identifying solution requirements, recommending settings in the fabric admin portal, choosing data gateway types, and creating custom Power BI report themes.

What are the three elements to focus on when identifying requirements for a fabric solution?

-The three elements to focus on when identifying requirements for a fabric solution are capacities, data ingestion methods, and data storage options.

How does data residency regulation impact the number of capacities required in a fabric environment?

-Data residency regulations can impact the number of capacities required because they dictate where data must be stored. For example, if data must reside in the EU due to GDPR, a separate capacity might be needed for EU data.

What factors can influence the sizing of a capacity in fabric?

-The factors that can influence the sizing of a capacity in fabric include the intensity of expected workloads, such as high volumes of data ingestion, heavy data transformation, and machine learning training requirements. Additionally, the client's budget and their propensity to wait for data processing can also dictate the capacity sizing.

What are the different data ingestion methods available in fabric, and what are the deciding factors for choosing them?

-The data ingestion methods available in fabric include shortcut, database mirroring, ETL via data flow, ETL via data pipeline, and event stream. The deciding factors for choosing them include the location of the external data, the skills existing in the team, the volume of data, and the need for real-time data streaming.

Why might an organization choose to use the on-premise data gateway in fabric?

-An organization might choose to use the on-premise data gateway in fabric to securely access and bring data into fabric from on-premise SQL Servers or other on-premise data sources.

What is the role of the virtual network data gateway in fabric?

-The virtual network data gateway in fabric provides a secure mechanism to access data that is stored in Azure behind a virtual network, such as in blob storage or ADLS Gen2.

What are the key settings that Camila, as a fabric administrator, needs to understand in the fabric admin portal?

-Camila needs to understand key settings such as allowing users to create fabric items, enabling preview features, managing guest users, single sign-on options, blocking public internet access, enabling Azure private link, and allowing service principal access to fabric APIs.

How can custom Power BI report themes be created and applied in fabric?

-Custom Power BI report themes can be created by updating the current theme in Power BI Desktop, writing a JSON template, or using a third-party online tool. They can be applied by importing a JSON file theme in Power BI Desktop under the 'View' tab in the 'Themes' section.

What is the process of setting up a virtual network data gateway in fabric?

-The process of setting up a virtual network data gateway in fabric involves registering a Power Platform resource provider in Azure, creating a private endpoint and subnet in the Azure environment, and then creating a new virtual network data gateway connection in fabric.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

"Azure Synapse Analytics Q&A", 50 Most Asked AZURE SYNAPSE ANALYTICS Interview Q&A for interviews !!

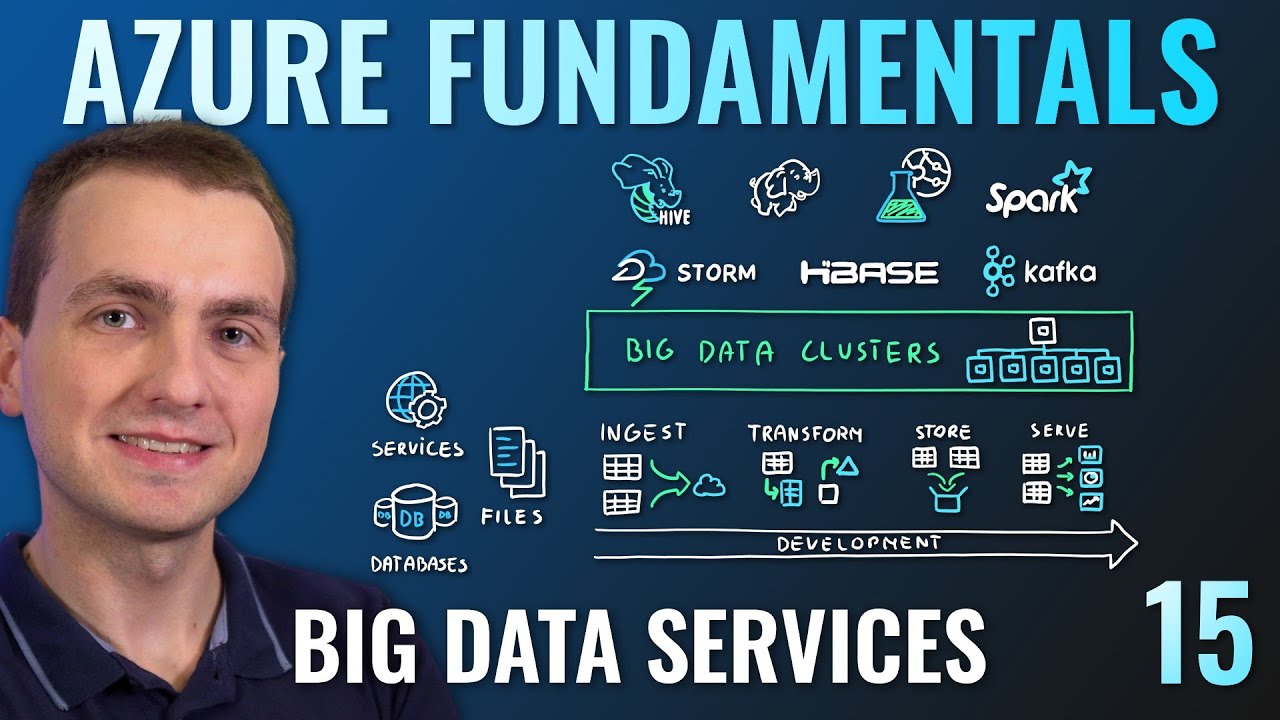

AZ-900 Episode 15 | Azure Big Data & Analytics Services | Synapse, HDInsight, Databricks

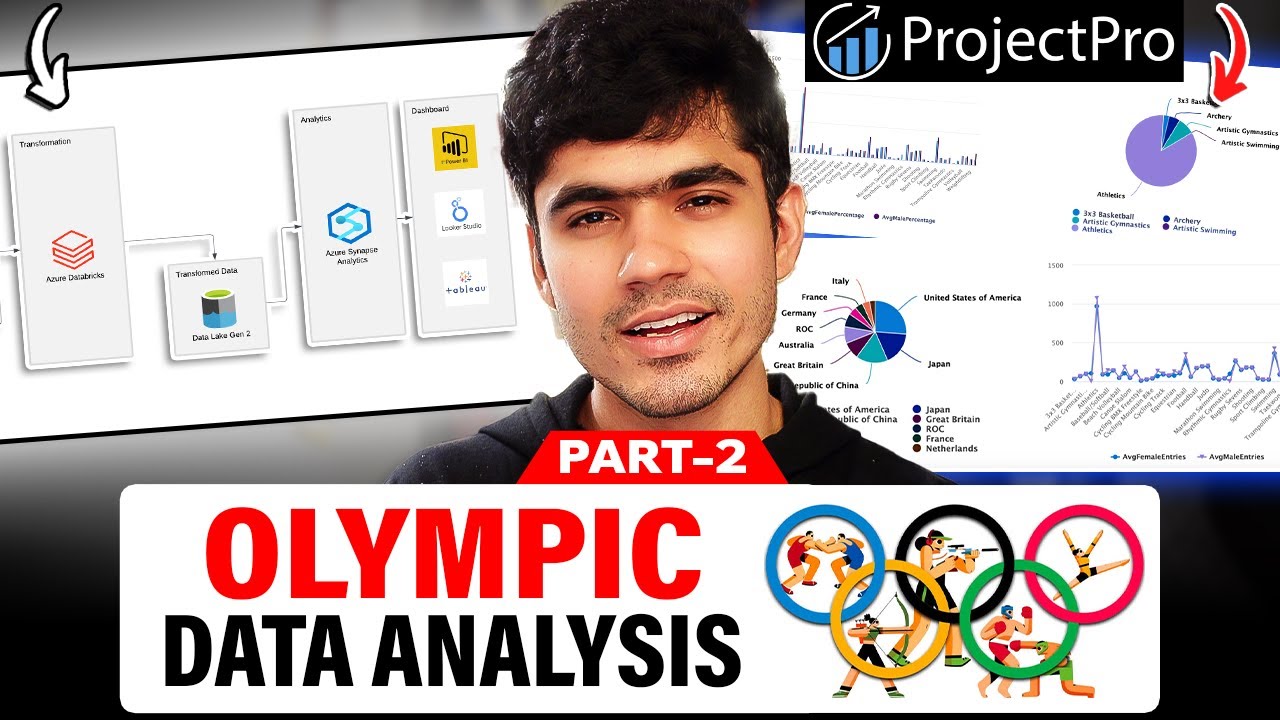

Olympic Data Analytics | Azure End-To-End Data Engineering Project | Part 2

🚀 Introduction to Microsoft Fabric | Microsoft Fabric Playlist

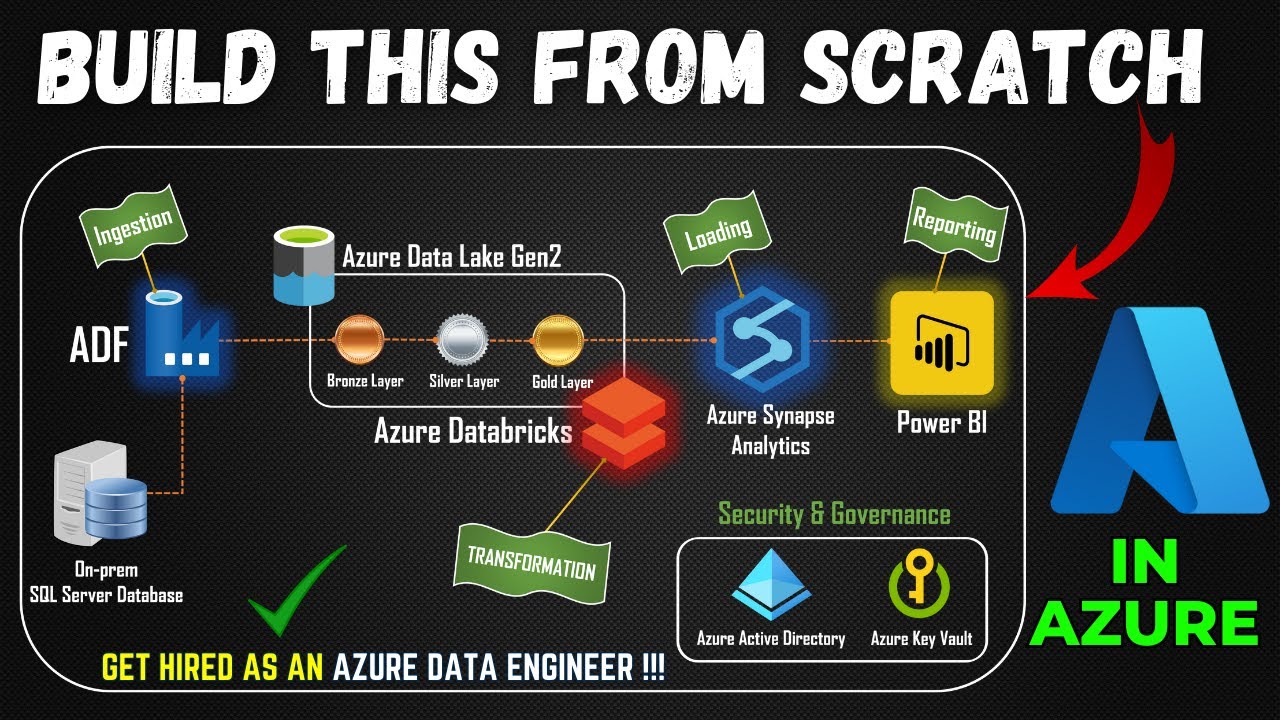

Part 1- End to End Azure Data Engineering Project | Project Overview

DP 203 Dumps | DP 203 Real Exam Questions | Part 2

5.0 / 5 (0 votes)