Image Processing on Zynq (FPGAs) : Part 1 Introduction

Summary

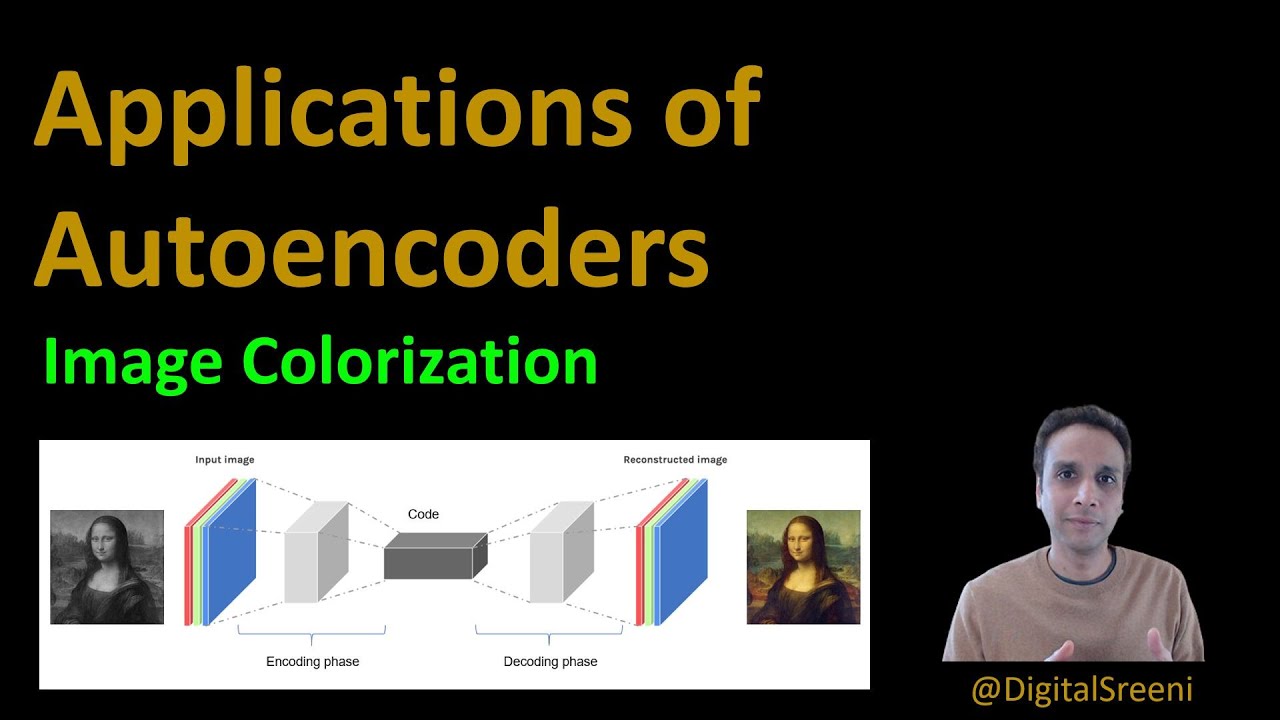

TLDRThis tutorial series introduces image processing on FPGAs and Zynq chips, focusing on grayscale and color images as two-dimensional arrays. It differentiates between point processing, where output pixels depend solely on corresponding input pixels, and neighborhood processing, which considers neighboring pixels for output values. The architecture involves streaming images from DDR memory to an IP core via a DMA controller. The tutorial also covers convolution operations using kernels and the challenges of processing image edges. It discusses system design considerations, including buffering strategies and the use of line buffers and multiplexers for efficient processing. The series will progress to coding and implementation on FPGAs.

Takeaways

- 📚 The tutorial series will cover image processing on FPGAs and Zynq chips, with a focus on practical application rather than theoretical details.

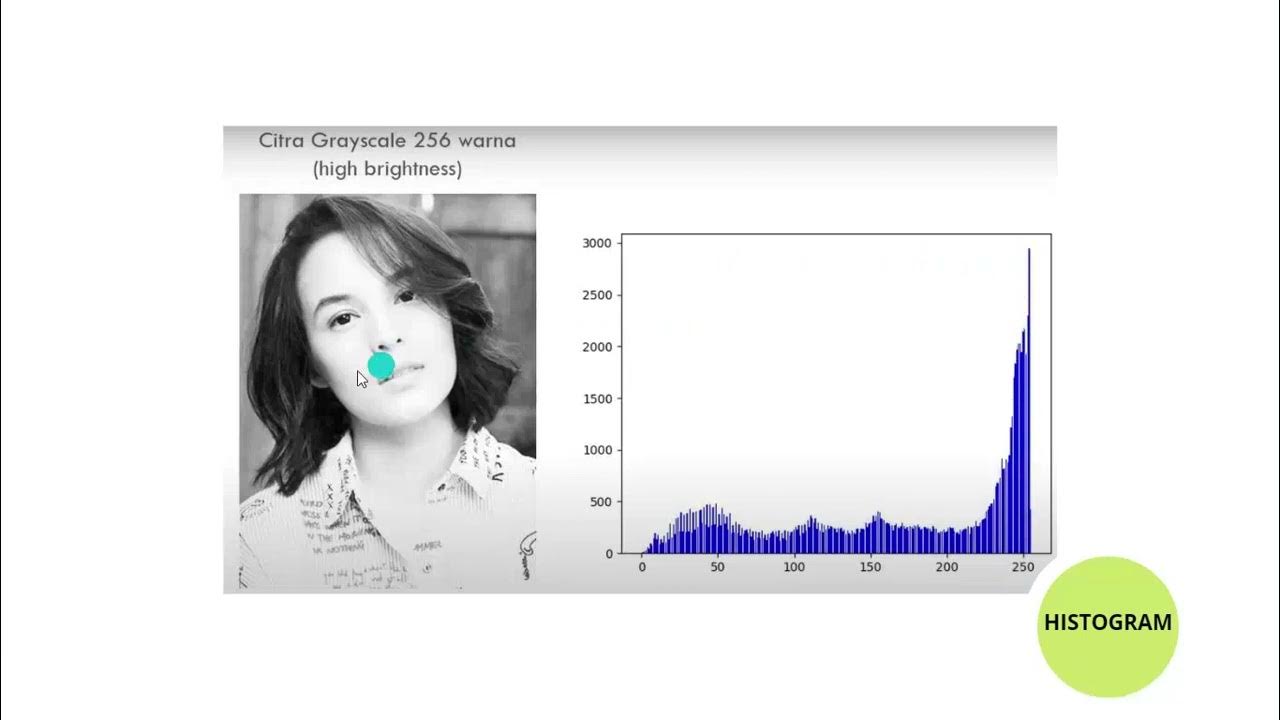

- 🖼️ Images are described as two-dimensional arrays, with grayscale images being 2D and color images typically 3D arrays, where each element represents a pixel.

- 🔄 Image processing is divided into point processing and neighborhood processing, with point processing involving transformations that depend solely on the value of a single pixel.

- 🔀 In point processing, operations like image inversion can be performed by simple transformations such as 255 minus the input pixel value.

- 🌐 For system design in image processing, images are initially stored in external DDR memory and then streamed to the processing IP via a DMA controller.

- 🔄 Neighborhood processing involves considering the pixel's neighbors for operations, which can include different types of neighbors and convolution with a kernel.

- 🛠️ Hardware implementation for neighborhood processing requires buffering parts of the image within the FPGA due to non-consecutive pixel processing needs.

- 💾 Line buffers are used to store parts of the image within the FPGA, and multiplexers are employed to intelligently reuse lines for efficient processing.

- 🔗 The tutorial discusses the importance of parallelizing data transmission and processing to improve system performance, such as adding extra line buffers.

- 🛡️ Interrupt-based processing is mentioned as a method to handle data streaming between the IP and the PS, with an interrupt service routine to manage data flow efficiently.

Q & A

What is the main focus of the tutorial series mentioned in the transcript?

-The tutorial series focuses on image processing on FPGAs and specifically on Zynq chips.

Which textbook is recommended for detailed information on image processing?

-For detailed information on image processing, the transcript recommends 'Digital Image Processing' by Gonzalez.

How are images represented in terms of data structure?

-Images are represented as two-dimensional arrays or matrices. Grayscale images are typically one-dimensional arrays, while color images are three-dimensional arrays with RGB values for each pixel.

What are the two broad categories of image processing mentioned in the transcript?

-The two broad categories of image processing mentioned are point processing and neighborhood processing.

How does point processing differ from neighborhood processing?

-In point processing, the value of a pixel in the output image depends only on the corresponding pixel in the input image. In contrast, in neighborhood processing, the value of a pixel in the output image depends on the pixel and its neighboring pixels in the input image.

What is an example of a point processing operation discussed in the transcript?

-An example of a point processing operation is image inversion, where the pixel value in the output image is calculated as 255 minus the pixel value in the input image.

How is the image data transferred from external DDR memory to the image processing IP in the described architecture?

-The image data is transferred from external DDR memory to the image processing IP using a DMA controller, which is configured by a driver running on the processor.

What is the role of line buffers in the neighborhood processing architecture?

-Line buffers in the neighborhood processing architecture are used to store parts of the image necessary for processing. They allow for the buffering of image lines to facilitate the convolution operation with a kernel.

Why is pure streaming architecture not suitable for neighborhood processing?

-Pure streaming architecture is not suitable for neighborhood processing because the pixels being processed are not consecutive. The convolution operation requires specific groups of pixels that may not be in sequential order.

What is the purpose of multiplexers in the line buffer architecture?

-Multiplexers in the line buffer architecture are used to intelligently select and reuse the correct line buffers for the convolution operation, ensuring that the same line is not unnecessarily fetched multiple times from external memory.

How does adding a fourth line buffer improve the system performance in the architecture?

-Adding a fourth line buffer allows data transmission and processing to happen in parallel, reducing idle time and improving overall system performance by enabling continuous data flow and processing without waiting for one operation to complete before starting another.

What is the significance of the interrupt-based processing mentioned in the transcript?

-Interrupt-based processing is significant as it allows for efficient communication between the IP and the processor, enabling the processor to send the next line of data to the IP as soon as a convolution operation is completed, thus maintaining a smooth and continuous processing workflow.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

5.0 / 5 (0 votes)