Deep Learning Project Environment Setup | Installing Tensorflow Cudatoolkit Nvidia driver in Windows

Summary

TLDRThis video tutorial walks through the steps of setting up a machine learning environment using TensorFlow with GPU support. It covers installing necessary libraries like Pandas, Matplotlib, and Streamlit, and configuring CUDA and NVIDIA GPU drivers to optimize performance. The speaker demonstrates how to activate a Conda environment and run model training, using GPU utilization to speed up the process. It also provides troubleshooting tips for users without a GPU, explaining how to install TensorFlow for CPU use. The tutorial is aimed at helping users set up their project environments for various machine learning tasks like genre classification and disease prediction.

Takeaways

- 😀 Ensure that you have the correct Python environment set up before starting TensorFlow installation, and create a separate environment for your project using Conda.

- 😀 To install TensorFlow with GPU support, you need to install the NVIDIA GPU drivers, CUDA, and the CUDNN toolkit before installing TensorFlow.

- 😀 If you don’t have a GPU, TensorFlow will automatically install with CPU support when you run `pip install tensorflow`.

- 😀 Training on a GPU significantly speeds up the process compared to using only a CPU, even if the GPU is not very powerful.

- 😀 Use `nvidia-smi` to monitor GPU utilization while training to check if the GPU is being fully utilized.

- 😀 The installation of TensorFlow with GPU support relies on the proper detection and installation of CUDA, which in turn requires the presence of an NVIDIA GPU and corresponding drivers.

- 😀 To install required libraries for your project, use `pip install <library>` or create a `requirements.txt` file and run `pip install -r requirements.txt`.

- 😀 If you install TensorFlow without the CUDA toolkit and GPU drivers, it defaults to CPU installation, which will result in slower training times.

- 😀 The script emphasizes the need to first install the necessary dependencies, such as CUDA and NVIDIA drivers, before setting up TensorFlow with GPU support.

- 😀 Training time on TensorFlow can vary depending on your hardware setup; even without a powerful GPU, the process is still faster than training on a CPU.

- 😀 The video guides users through the entire process of setting up a TensorFlow environment, ensuring that all dependencies are correctly installed for both GPU and CPU versions.

Q & A

What is the first step in setting up TensorFlow for GPU usage?

-The first step is to install the NVIDIA GPU driver and the CUDA toolkit. This is essential for TensorFlow to detect and utilize the GPU for faster training.

What is the purpose of installing CUDA and the NVIDIA driver before TensorFlow?

-Installing CUDA and the NVIDIA driver is necessary because TensorFlow uses CUDA for GPU acceleration. If CUDA is not installed, TensorFlow will fall back to using the CPU instead of the GPU.

How can you check if the GPU is being utilized during training?

-You can use the `nvidia-smi` command to check GPU usage. It shows the GPU memory utilization and percentage of GPU being used during model training.

What happens if you don't have a GPU on your system?

-If you don't have a GPU, TensorFlow will install the CPU version by default. You can manually install the CPU version by running `pip install tensorflow`.

What is the role of the virtual environment in TensorFlow setup?

-The virtual environment helps isolate project dependencies to avoid conflicts with other libraries. You need to activate the environment before installing or running TensorFlow-related tasks.

What command is used to activate the virtual environment in the script?

-The command `conda activate tensorflow_environment` is used to activate the specific virtual environment created for the project.

How does TensorFlow decide whether to use the GPU or CPU?

-TensorFlow checks if CUDA is installed and if an NVIDIA GPU driver is available. If both are found, it uses the GPU. Otherwise, it defaults to the CPU version.

Why is the training time faster on the GPU compared to the CPU?

-The GPU is designed to handle parallel computations efficiently, which makes it significantly faster than a CPU, especially for deep learning tasks involving large datasets.

What is the model size mentioned in the script, and how does it affect the training time?

-The model is around 29 MB. The model size can affect the training time, as larger models generally require more computation and memory, though in this case, the model size is relatively small, and the training is still taking a noticeable amount of time.

What is the importance of using a `requirements.txt` file for installation?

-A `requirements.txt` file helps automate the installation of all necessary libraries and dependencies for the project. Running `pip install -r requirements.txt` installs all the libraries listed in the file, ensuring consistency across different environments.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Aula 01: OpenGL Legacy - Janela GLFW

Cara Instalasi WordPress di Localhost | Buat Website Tanpa Coding!

Training Neural Networks on GPU vs CPU | Performance Test

Image Classification App | Teachable Machine + TensorFlow Lite

How to run FreeBSD in UTM/QEMU on an Apple M3

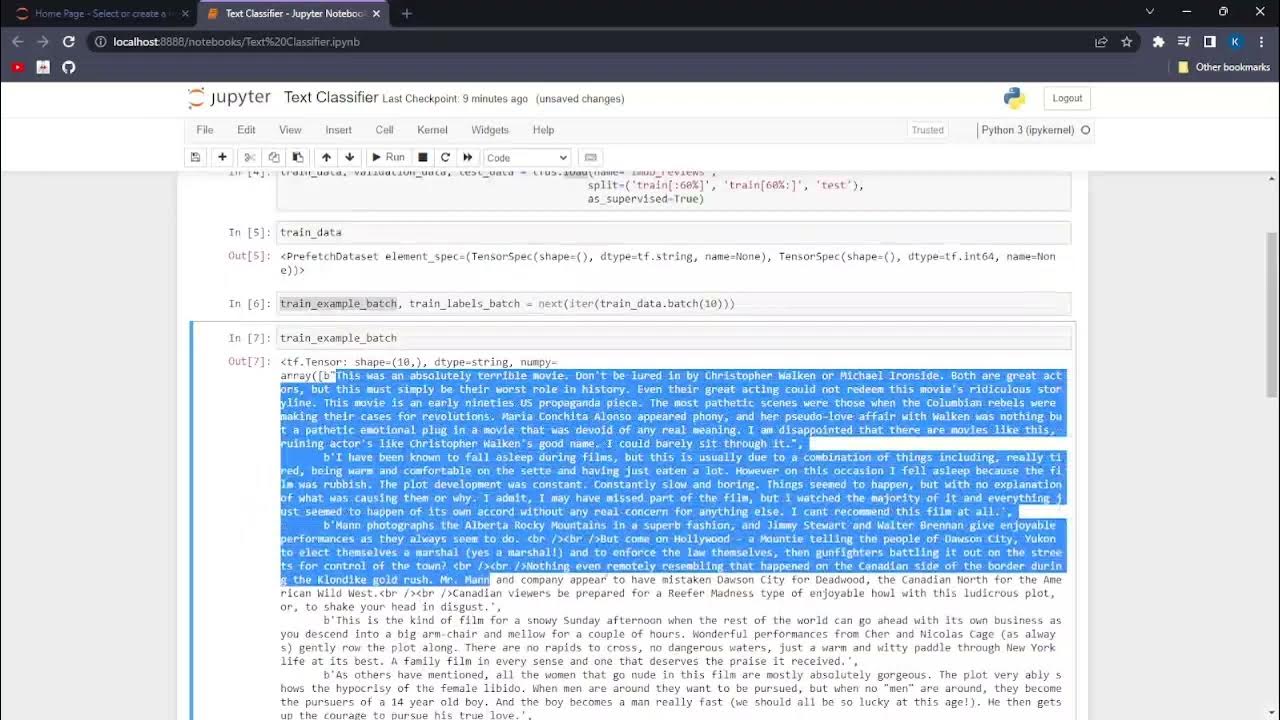

AIP.NP1.Text Classification with TensorFlow

5.0 / 5 (0 votes)