Introduction to OpenMP: 02 part 1 Module 1

Summary

TLDRIn this insightful talk, the speaker discusses the enduring relevance of Moore's Law and its impact on processor performance. While hardware has traditionally handled performance improvements, the rise of power limitations and the shift to multi-core processors have created a new dynamic between hardware and software. The speaker emphasizes the need for software developers to actively engage in parallel computing to ensure performance gains, as automatic parallelism has failed. Ultimately, the responsibility for optimization now rests with software developers in the age of power-efficient, multi-core architectures.

Takeaways

- 😀 Moore's Law, proposed by Gordon Moore in the 1960s, predicted that the number of transistors on a device would double approximately every two years, which has largely held true for over 40 years.

- 😀 The performance of hardware has continued to improve over time, with a steady increase in performance as the number of transistors doubles.

- 😀 Software developers have traditionally relied on hardware improvements for performance, often ignoring optimization due to hardware's ability to compensate.

- 😀 Programming languages like Java were designed with ease of use in mind but lack focus on low-level performance, leaving the responsibility of optimization to the hardware.

- 😀 The issue of power consumption became critical as hardware performance increased, with power usage rising sharply alongside performance, leading to the concept of the 'power wall.'

- 😀 To address the power problem, Intel re-optimized its architecture, simplifying pipeline stages and focusing on reducing power consumption while maintaining performance.

- 😀 Performance and power consumption are now intimately linked, and modern hardware is designed with power optimization as a priority, requiring new approaches in architecture.

- 😀 The power consumption of chips can be reduced by lowering the frequency and voltage, and using multiple cores instead of increasing clock speed.

- 😀 Parallel computing has become essential in modern architecture, as using multiple cores allows for the same output at significantly lower power consumption.

- 😀 The industry, including Intel, AMD, Nvidia, IBM, and ARM, is moving towards using more cores on a single chip to achieve performance improvements while managing power consumption.

- 😀 The shift in hardware architecture means that software developers will have to take more responsibility for performance, particularly by adopting parallel computing, as automatic parallelism has not been successful.

Q & A

What is Moore's Law, and how has it influenced the semiconductor industry?

-Moore's Law, formulated by Gordon Moore in the 1960s, predicts that the number of transistors on a semiconductor device would double approximately every two years, leading to an increase in performance. This has greatly influenced the semiconductor industry by setting a pace for innovation, with hardware continually improving in terms of processing power.

How does the number of transistors relate to performance in the context of Moore's Law?

-The number of transistors directly correlates to performance in the sense that more transistors allow for more processing power. As Moore's Law has progressed, the doubling of transistors has resulted in consistent increases in performance, enabling faster and more capable devices.

What sociological impact has Moore's Law had on software development?

-Moore's Law has led to a mindset in software development where developers often rely on hardware to deliver performance. Over generations, programmers have been trained to prioritize software functionality without focusing heavily on optimizing for performance, as hardware would compensate for inefficiencies.

What is the 'power wall,' and how did it emerge in the semiconductor industry?

-The 'power wall' refers to the unsustainable increase in power consumption as performance improved. As seen in the transition from the Pentium Pro to the Pentium 4, performance improvements were accompanied by a significant rise in power usage, which was not feasible to continue. This problem led to architectural changes aimed at improving power efficiency.

How have semiconductor architectures evolved to address power concerns?

-To manage power concerns, modern architectures have been designed with fewer pipeline stages and simplified features. For instance, the Pentium M architecture focused on lowering power consumption by reducing complexity, which has influenced current architectures aiming for more energy-efficient designs.

How does the equation involving capacitance, voltage, and frequency help explain power consumption?

-The equation relates to power consumption by showing how capacitance, voltage, and frequency impact the energy used in a circuit. The formula, Power = Capacitance × Voltage² × Frequency, illustrates that as these factors increase, power consumption rises, emphasizing the need to manage these variables carefully to optimize energy use.

What are the benefits of using multiple cores in processors from a power efficiency standpoint?

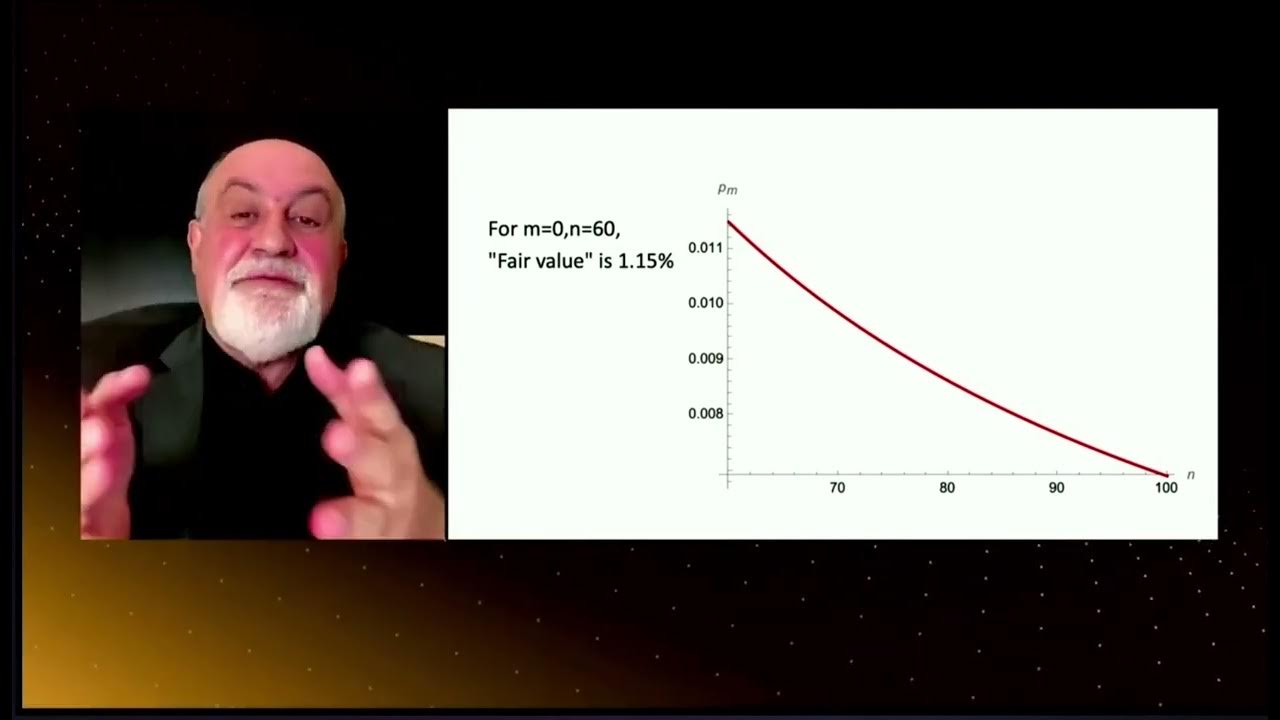

-Using multiple cores allows for achieving the same performance at a lower frequency, which reduces power consumption. A study showed that by reducing the frequency of two cores while maintaining the same output, power could be reduced by up to 40%. This is a key reason why parallel computing has become essential in modern processors.

What is the role of parallel computing in today's hardware development?

-Parallel computing has become crucial because it allows for efficient use of multiple cores to handle tasks simultaneously, reducing power consumption while maintaining or improving performance. As processors with more cores are developed, software must adapt to make use of these cores effectively to achieve optimal performance.

Why is automatic parallelism unlikely to be a solution for software optimization?

-Automatic parallelism, where compilers attempt to convert serial code into parallel code, has consistently failed over several decades of research. This is because parallelizing code often requires deep understanding and manual intervention to ensure that tasks are divided effectively, something compilers have not been able to do successfully.

What does the phrase 'the free lunch is over' mean in the context of software and hardware?

-'The free lunch is over' refers to the idea that programmers can no longer rely solely on hardware advancements to improve performance. With the shift towards parallel computing and the power wall, software developers will now need to take active responsibility for optimizing their code to leverage hardware capabilities effectively.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Things every developer absolutely, positively needs to know about database indexing - Kai Sassnowski

Masa Lalu Adalah Pelajaran Yang Berharga - Eps. 6 #GuruTalks

Introduction

Why we love, why we cheat | Helen Fisher

MINI LECTURE 17: Maximum Ignorance Probability (a bit more technical)

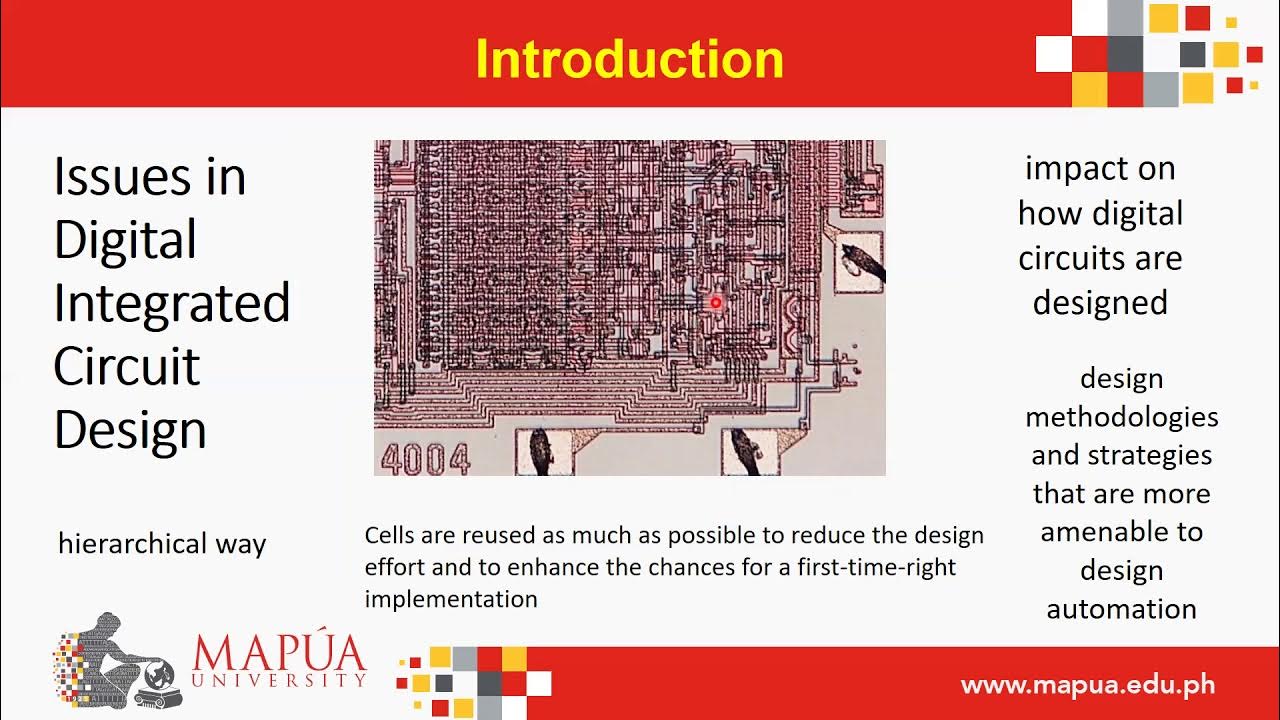

Introduction to Digital IC Design2

5.0 / 5 (0 votes)