Deep Learning(CS7015): Lec 1.3 The Deep Revival

Summary

TLDRThis transcript outlines the evolution of deep neural networks (DNNs) from 1989 to 2016. Initially hindered by practical training challenges, DNNs gained renewed interest in 2006 thanks to Hinton and Salakhutdinov's breakthrough in training deep networks. From 2009-2012, deep learning achieved success in areas like handwriting and speech recognition, and the use of GPUs further accelerated progress. The pivotal moment came in 2012 with AlexNet's win in the ImageNet competition, showcasing the power of convolutional neural networks (CNNs). By 2016, deep learning had revolutionized fields such as computer vision, NLP, and speech recognition, marking its widespread adoption.

Takeaways

- 😀 In 2006, Hinton and Salakhutdinov made a significant contribution to deep neural networks by introducing a technique for effectively training very deep networks, which revived interest in their potential for solving practical problems.

- 😀 Despite the universal approximation theorem's promise that deep neural networks could solve a wide range of problems, practical training challenges limited their use from 1989 to 2006.

- 😀 From 2006 to 2009, research into unsupervised pre-training and improved network initialization, optimization, and regularization techniques contributed to overcoming deep network training issues.

- 😀 Between 2006 and 2009, deep neural networks gained popularity as solutions to challenges in training were found, rekindling interest in their practical applications.

- 😀 Starting in 2009, deep neural networks began to show notable successes in applications like handwriting recognition and speech recognition, outperforming existing systems.

- 😀 The introduction of GPUs in 2010 revolutionized deep learning by significantly reducing training and inference times, enabling large-scale practical applications.

- 😀 By 2010, deep neural networks set a new record on the MNIST handwritten digit recognition dataset, showcasing their growing effectiveness.

- 😀 Deep learning achieved a significant milestone in 2012 with AlexNet's success in the ImageNet competition, outperforming all other systems by a large margin.

- 😀 From 2012 to 2016, deep neural networks continued to improve in ImageNet and other fields, such as Natural Language Processing and speech recognition, with networks becoming deeper and more accurate.

- 😀 By 2016, deep neural networks, particularly convolutional neural networks (CNNs), began surpassing human-level performance in tasks like image classification, with error rates lower than human error in ImageNet.

Q & A

What significant contribution did Hinton and Salakhutdinov make in 2006?

-Hinton and Salakhutdinov introduced an effective method for training very deep neural networks, which revived interest in deep learning and demonstrated its potential for solving practical problems.

Why were deep neural networks difficult to train prior to 2006?

-Although the algorithm for training deep neural networks existed, practical difficulties such as computational limitations and data requirements made it challenging to train these networks effectively.

How did unsupervised pre-training help in training deep neural networks?

-Unsupervised pre-training helped initialize deep neural networks more effectively, improving their ability to learn from data and making them more practical for real-world applications.

What role did GPUs play in the success of deep neural networks after 2010?

-GPUs allowed for significantly faster computation compared to CPUs, enabling more efficient training of deep neural networks and reducing the time required for both training and inference.

What was the impact of deep neural networks on handwriting recognition by 2009?

-By 2009, deep neural networks outperformed other systems in a handwriting recognition competition, particularly in Arabic handwriting, demonstrating their superior performance in pattern recognition tasks.

What achievement did deep neural networks reach in speech recognition?

-Deep neural networks significantly reduced the error rates in existing speech recognition systems, improving accuracy and making them more effective.

How did deep neural networks perform on the MNIST handwritten digit dataset in 2010?

-In 2010, deep neural networks set a new record for accuracy on the MNIST dataset, a popular dataset for handwritten digit recognition, further demonstrating their effectiveness in pattern recognition.

What was the significance of the ImageNet competition from 2012 onwards?

-The ImageNet competition became a pivotal event for deep learning, with the 2012 success of AlexNet, a convolutional neural network, outperforming all other systems by a wide margin and marking a turning point in the popularity of deep neural networks for image classification.

How did the depth of neural networks change from 2012 to 2016, and what were the results?

-From 2012 to 2016, the depth of neural networks increased significantly, from 8 layers in AlexNet to 152 layers in later models. This led to continuous improvements in error rates, surpassing human-level performance in some cases, and further solidifying the importance of deep neural networks in computer vision.

What were the main fields that saw success from deep neural networks during the period from 2009 to 2016?

-From 2009 to 2016, deep neural networks achieved significant success in fields such as image classification, handwriting recognition, speech recognition, and natural language processing, with breakthroughs in each of these domains driving increased adoption of deep learning.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Neural Networks Explained: From 1943 Origins to Deep Learning Revolution 🚀 | AI History & Evolution

Deep Learning(CS7015): Lec 1.5 Faster, higher, stronger

Reaching Motion Planning with Vision-Based Deep Neural Networks for Dual Arm Robots

1. Pengantar Jaringan Saraf Tiruan

Tutorial 1- Introduction to Neural Network and Deep Learning

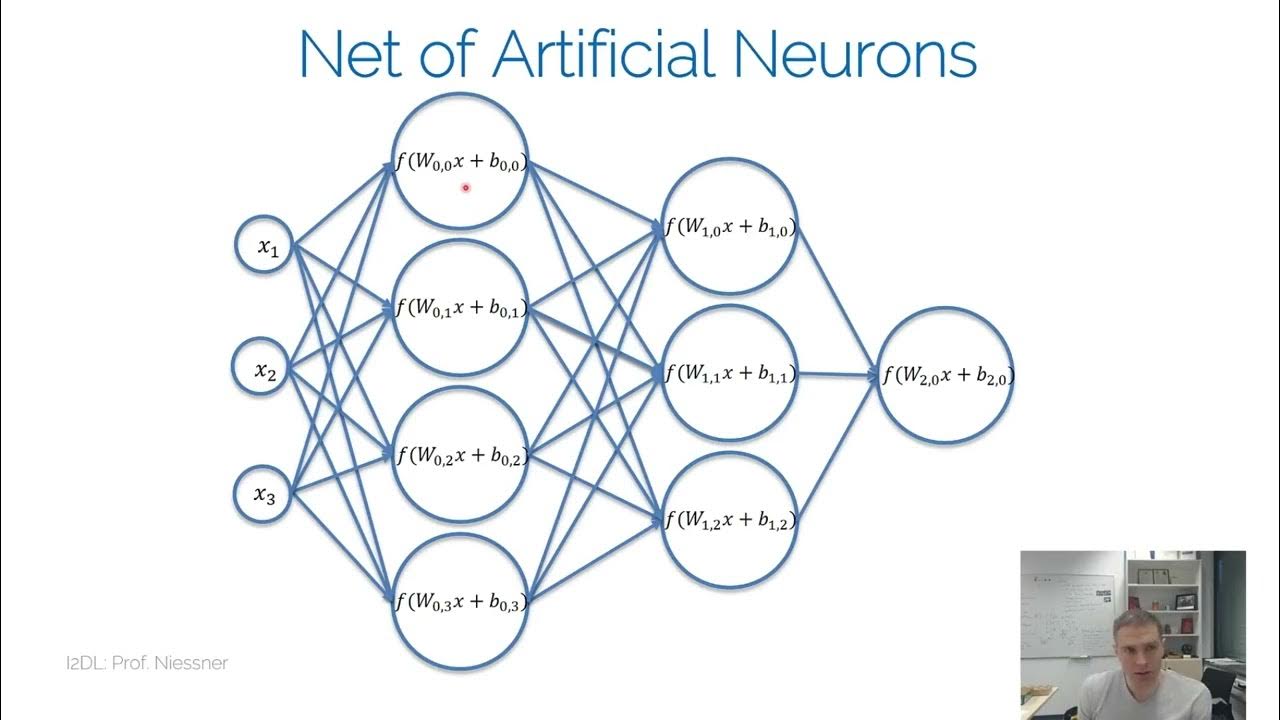

I2DL NN

5.0 / 5 (0 votes)