How to Prompt FLUX. The BEST ways for prompting FLUX.1 SCHNELL and DEV including T5 and CLIP.

Summary

TLDRThis video explores the differences between FLUX and Stable Diffusion's image generation processes, focusing on the two text encoders in FLUX: the standard clip encoder and the advanced T5 encoder, which uses natural language for more flexible prompts. It demonstrates how FLUX handles complex, multi-element prompts more effectively than SD 1.5, showing its ability to manage positioning, styles, and backgrounds. The video also covers practical tips for refining prompts, fixing errors, and enhancing creative control, offering a comparison between the SCHNELL and DEV models, and providing insights into using natural language prompts for optimal results.

Takeaways

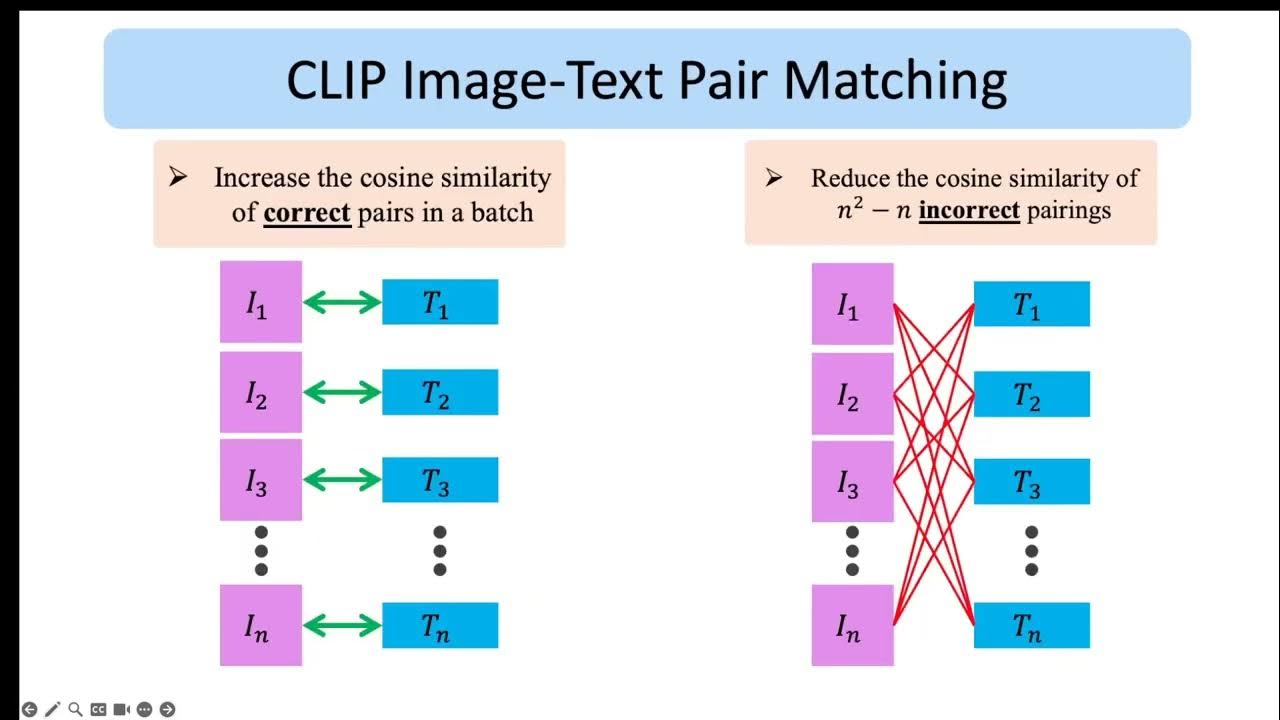

- 😀 FLUX utilizes a T5 text encoder (Text-to-Text Transformer) that interprets natural language, offering more flexibility than Stable Diffusion's CLIP encoder.

- 😀 FLUX supports token-based prompts like Stable Diffusion, but natural language-based prompts offer more control over the image generation process.

- 😀 The video demonstrates how FLUX can better adhere to specific creative directions, such as adjusting positions, lighting, and attributes compared to Stable Diffusion.

- 😀 Testing with a variety of subjects and backgrounds (e.g., a blonde woman in silver armor, dragons) shows FLUX's superior ability to handle complexity in images.

- 😀 Despite its strengths, FLUX’s T5 encoder still struggles with generating correct text in images, revealing some limitations in its prompt interpretation.

- 😀 SCHNELL, a FLUX model, performs particularly well for generating diverse creative styles, offering flexibility when experimenting with different artistic directions.

- 😀 DEV, another FLUX model, provides high-quality outputs but tends to favor specific styles (e.g., photorealism), limiting its adaptability to new styles.

- 😀 The video highlights the importance of adjusting prompts for error correction, such as repositioning objects or tweaking text elements for better accuracy.

- 😀 FLUX models do not support negative prompts directly, but you can rephrase negative instructions into positive prompts to work around this limitation.

- 😀 Using detailed prompts, including both subjects and their attributes/activities, is essential for generating accurate and controlled outputs in FLUX.

Q & A

What is the primary difference between FLUX's T5 encoder and Stable Diffusion's clip encoder?

-FLUX's T5 encoder uses natural language for generating prompts, offering more flexibility and control over the image output. In contrast, Stable Diffusion's clip encoder relies on token-based prompts, which may not provide the same level of creative control, especially with complex prompts.

How does FLUX perform when handling complex image generation prompts compared to Stable Diffusion?

-FLUX handles complex prompts, such as generating multiple characters and intricate scenes, more effectively than Stable Diffusion. For example, FLUX was able to generate a dragon pair without issues, while Stable Diffusion struggled with similar complexity.

What role does the SCHNELL model play in FLUX, and how does it compare to the DEV model?

-The SCHNELL model in FLUX is known for its versatility, handling a variety of creative styles well, and is recommended for users seeking flexibility in their image generation. The DEV model, while producing high-quality outputs, especially in photo-realistic styles, is less adaptable to different artistic styles compared to SCHNELL.

What is the impact of using natural language prompts in FLUX, and how does it compare to token-based prompts?

-Natural language prompts can yield good results in FLUX, but they require careful phrasing to avoid conflicting or underwhelming outcomes. While they can simplify the prompt creation process, token-based prompts tend to offer more precise control over the details and outcome, especially for complex compositions.

How does FLUX handle text in generated images, and what issues might arise?

-FLUX can generate text within images, but the text might not always be accurate or properly rendered, as seen in the example of the incorrect text on a shield. The prompt can be adjusted or placed in specific sections of the encoder to correct these issues.

What is the purpose of using brackets in prompts for FLUX, and do they affect the image output?

-Brackets are used in FLUX prompts primarily for readability and organization. Unlike in Stable Diffusion, where brackets might influence prompt weight, in FLUX, they don’t affect the output significantly but help structure and clarify complex prompts.

How does FLUX's T5 encoder perform with specific creative styles like black and white or anime art?

-FLUX's T5 encoder shows variable results with different creative styles. For example, when generating a black-and-white image with colored dragons, the result was desaturated instead of matching the intended style. However, it performed well with anime art, such as producing a drawing in the style of Shinji Aramaki.

Why does the video suggest using ChatGPT for extending prompts in FLUX?

-ChatGPT is recommended for extending prompts to add more detail and complexity. By asking ChatGPT to translate token-based prompts into more descriptive natural language, users can achieve longer and more detailed prompts, though results may vary depending on the accuracy of the language.

What is the recommended approach for fixing small errors in FLUX-generated images?

-Small errors, like incorrect text or missing details, can be fixed by rephrasing the prompt, adjusting the guidance parameters, or rearranging parts of the prompt. This can help refine the output without relying on more complex tools like control nets or inpainting.

What is the role of guidance values in FLUX, and how do they affect image generation?

-Guidance values in FLUX help control how closely the generated image follows the prompt. Higher values may lead to more accurate results, but they are more useful with models like DEV. For SCHNELL, however, guidance is not supported, meaning the image output is more dependent on the prompt's details and structure.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

OpenAI CLIP model explained

ULTIMATE FREE UNCENSORED AI Model Workflow Is HERE! Start HERE!

Les codeurs optiques

Stable Diffusion 3.5 vs Flux 1: Free Access, Review, Comparison || ULTIMATE SHOWDOWN

What is an Encoder

Text to Image generation using Stable Diffusion || HuggingFace Tutorial Diffusers Library

5.0 / 5 (0 votes)