But How Does ChatGPT Actually Work?

Summary

TLDRThis video explains how ChatGPT works, focusing on its underlying technologies like natural language processing (NLP), machine learning techniques such as supervised learning and reinforcement learning, and the Transformer architecture. It covers the steps of NLP that help the model understand and generate human-like text, such as tokenization, stemming, and speech tagging. The video also delves into the pre-training process of ChatGPT, where it learns from vast amounts of data and improves through trial and error, much like reinforcement learning. The speaker highlights the potential of ChatGPT to revolutionize technology and art, ushering in a new era of AI-driven innovation.

Takeaways

- 😀 ChatGPT reached 1 million users in just 5 days, making it one of the most talked-about tech topics right now.

- 😀 ChatGPT is based on Generative Pre-trained Transformers (GPT), which are a type of natural language processing (NLP) model developed by OpenAI.

- 😀 NLP is the field of AI that enables machines to understand, interpret, and generate human language, involving techniques like tokenization and segmentation.

- 😀 Tokenization involves breaking text down into smaller units, or tokens, which are processed to improve model performance.

- 😀 A key step in NLP is removing stopwords, such as 'about' and 'the', which do not significantly contribute to the meaning of a sentence.

- 😀 Stemming and lemmatization help reduce words to their root or base form, making it easier for the model to generalize and recognize patterns.

- 😀 ChatGPT uses **Transformers**, which consist of an encoder and a decoder. The encoder processes input, while the decoder generates human-like responses.

- 😀 Transformers utilize a self-attention mechanism, allowing the model to focus on relevant parts of the input to produce contextually accurate outputs.

- 😀 ChatGPT was trained using supervised learning, reinforcement learning, and a reward model, where human feedback helped rank responses from best to worst.

- 😀 Reinforcement learning enables the model to improve over time through trial and error, learning from rewards and penalties, much like a dog learning a command.

- 😀 ChatGPT's development marks a breakthrough in AI, with the potential to transform industries like business, technology, and art in the coming years.

Q & A

What is ChatGPT and why is it so popular?

-ChatGPT is a generative pre-trained transformer (GPT) developed by OpenAI. It has gained popularity due to its ability to generate human-like text and provide valuable assistance across various tasks, from answering questions to content generation. It reached a record 1 million users in its first five days of existence.

What does the term 'Generative Pre-trained Transformer' mean?

-'Generative' means the model can produce or generate text, 'Pre-trained' indicates the model was trained on vast amounts of data before deployment, and 'Transformer' refers to the architecture that enables efficient processing of language by focusing on important aspects of the input.

What is Natural Language Processing (NLP)?

-Natural Language Processing (NLP) is a subfield of artificial intelligence that enables computers to understand, interpret, and generate human language. It's used in various applications like autocorrect, plagiarism detection, and chatbots like ChatGPT.

How does a computer understand human language using NLP?

-Computers don't inherently understand human languages. NLP breaks down language into smaller units (tokens), processes them through several steps like tokenization, stemming, and lemmatization, and uses these patterns to understand the meaning and structure of the input.

What are tokens in NLP?

-Tokens are individual units of a sentence or text, typically words or parts of words. In NLP, sentences are split into tokens to be processed more efficiently. For instance, 'learning' might become 'learn' during stemming.

What are the key steps involved in the NLP process?

-The key steps in the NLP process include segmentation (splitting text into tokens), tokenization (converting tokens to a standard format), removing stop words, stemming or lemmatization (reducing words to their base forms), speech tagging (assigning grammatical roles), and named entity recognition (identifying proper nouns and entities).

What is a Transformer model in AI?

-A Transformer is a deep learning model architecture introduced in 2017. It uses a self-attention mechanism to process sequences of data, allowing the model to focus on the most relevant parts of the input for generating accurate output. It's highly effective for tasks like language processing.

How does the Transformer model work in ChatGPT?

-In ChatGPT, the Transformer model uses an encoder to process input sequences and convert them into numerical representations. The decoder then generates the output sequence based on these representations. The use of self-attention allows the model to focus on the context of the input while generating the response.

What is the role of reinforcement learning in training ChatGPT?

-Reinforcement learning is used to fine-tune ChatGPT by rewarding the model for generating high-quality responses. Just like a dog learns through rewards and punishments, the model improves by maximizing rewards for better actions, such as providing more accurate answers.

How was ChatGPT initially trained before reinforcement learning was applied?

-ChatGPT was initially trained using supervised fine-tuning. This involved providing the model with prompts and having humans rank the responses to create a reward model. This data was used to guide the model's learning process.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

U6-30 V5 Maschinelles Lernen Algorithmen V2

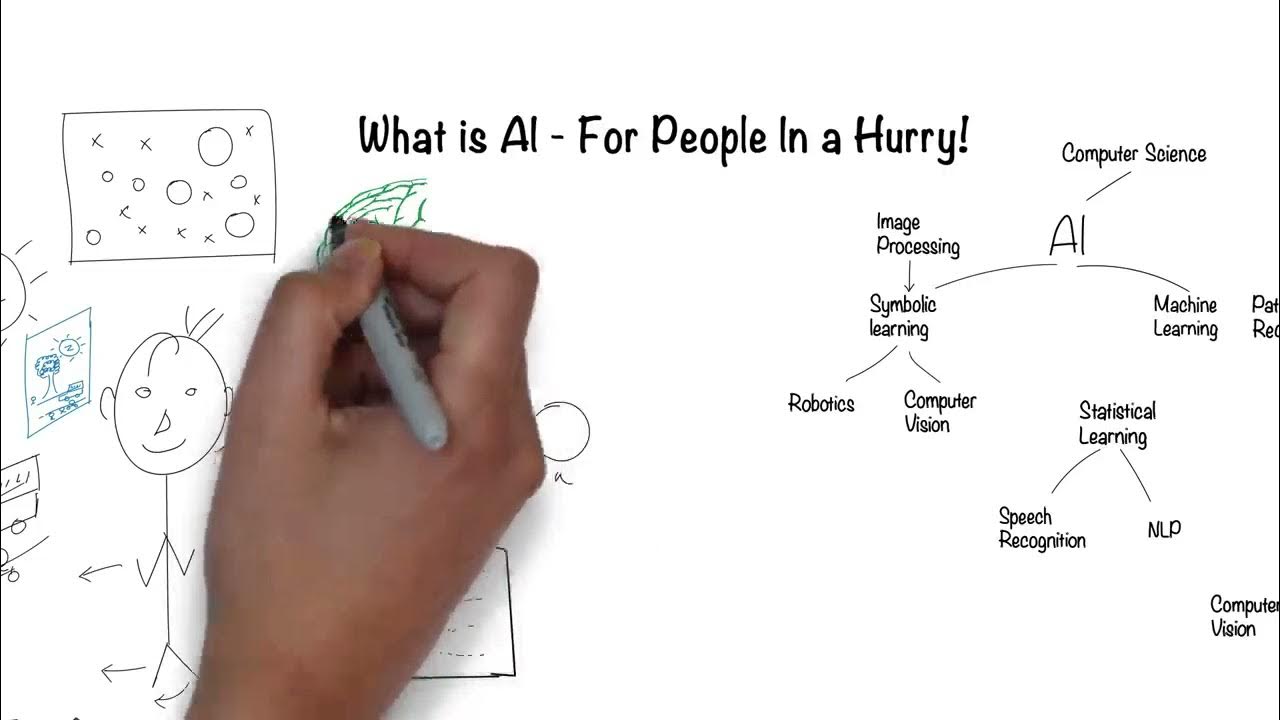

Artificial Intelligence (AI) for People in a Hurry

So How Does ChatGPT really work? Behind the screen!

M. Faris Al Hakim, S.Pd., M.Cs. - Implementasi Kecerdasan Buatan

The History of Natural Language Processing (NLP)

Pengenalan Natural Language Processing dan Penerapannya | E-Learning AI

5.0 / 5 (0 votes)