Neural Networks Part 5: ArgMax and SoftMax

Summary

TLDRIn this StatQuest episode, Josh Starmer explains the arg max and softmax functions in neural networks. He highlights how arg max selects the highest output for easy interpretation but cannot be used for backpropagation due to its constant outputs. In contrast, softmax converts raw outputs into probabilities that sum to 1, preserving their order and enabling effective training through usable derivatives. While softmax is used for training, arg max is utilized for classification. The video also sets the stage for a future discussion on cross-entropy as a fitting measure for models using softmax.

Takeaways

- 😀 Understanding neural networks requires familiarity with backpropagation and multi-output nodes.

- 🌱 Raw output values from a neural network can be greater than 1 or less than 0, making interpretation challenging.

- 🏴☠️ The Argmax function simplifies output interpretation by setting the highest value to 1 and others to 0.

- ❌ Argmax is not suitable for optimizing weights and biases during backpropagation because its derivative is zero.

- 🧸 The Softmax function transforms raw outputs into probabilities that sum to 1, ensuring all outputs are between 0 and 1.

- 🔄 Softmax retains the order of raw output values, making it easier to interpret predictions.

- 📈 Softmax allows for the calculation of useful derivatives for backpropagation, enabling effective training of neural networks.

- ⚖️ Predicted probabilities from Softmax should be treated with caution, as they depend on initial random weights and biases.

- 🔍 Cross-entropy is often used as a loss function when employing Softmax, replacing the sum of squared residuals.

- 🎓 The video encourages viewers to explore further content on neural networks, including detailed explanations of derivatives.

Q & A

What is the primary focus of StatQuest Part 5?

-The primary focus of StatQuest Part 5 is to explain the concepts of arg max and softmax functions in neural networks, particularly their roles in output classification and training.

What is arg max, and how does it function in neural networks?

-Arg max identifies the highest output value from a neural network and assigns it a value of 1, while all other values are set to 0. This makes it easy to interpret the predicted class, but it cannot be used for optimizing weights and biases during training.

Why are the raw output values from a neural network sometimes hard to interpret?

-Raw output values can be outside the range of 0 to 1, including negative values, making them difficult to interpret in terms of probabilities. This is why functions like arg max and softmax are used to standardize these outputs.

What are the limitations of using arg max for training neural networks?

-The limitations of arg max for training include that it produces constant output values (0 and 1), which results in zero derivatives. This makes it impossible to optimize weights and biases during backpropagation.

How does the softmax function differ from arg max?

-Softmax transforms raw output values into a probability distribution that sums to 1, ensuring all output values are between 0 and 1. Unlike arg max, softmax provides useful gradients for optimization during training.

What is the mathematical approach to calculating softmax outputs?

-Softmax outputs are calculated by raising e to the power of each raw output value and normalizing these values by dividing each by the sum of all e raised to the raw outputs.

What are the three main properties of softmax output values?

-The properties of softmax output values include that they maintain the original ranking of raw outputs, all values lie between 0 and 1, and they sum to 1, allowing for interpretation as predicted probabilities.

Why should we be cautious about trusting predicted probabilities from softmax?

-Predicted probabilities should be treated cautiously because they depend not only on input values but also on the randomly selected initial weights and biases. Different initializations can yield different outputs and probabilities.

What role does the derivative of the softmax function play in training neural networks?

-The derivative of the softmax function is useful for backpropagation as it is not always zero. This allows for the adjustment of weights and biases during training, facilitating convergence towards optimal parameter values.

What will be discussed in the next StatQuest segment following Part 5?

-The next segment will cover cross entropy, a metric used to evaluate how well a neural network fits the data, especially when softmax outputs are involved.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Neural Networks Part 8: Image Classification with Convolutional Neural Networks (CNNs)

Recurrent Neural Networks (RNNs), Clearly Explained!!!

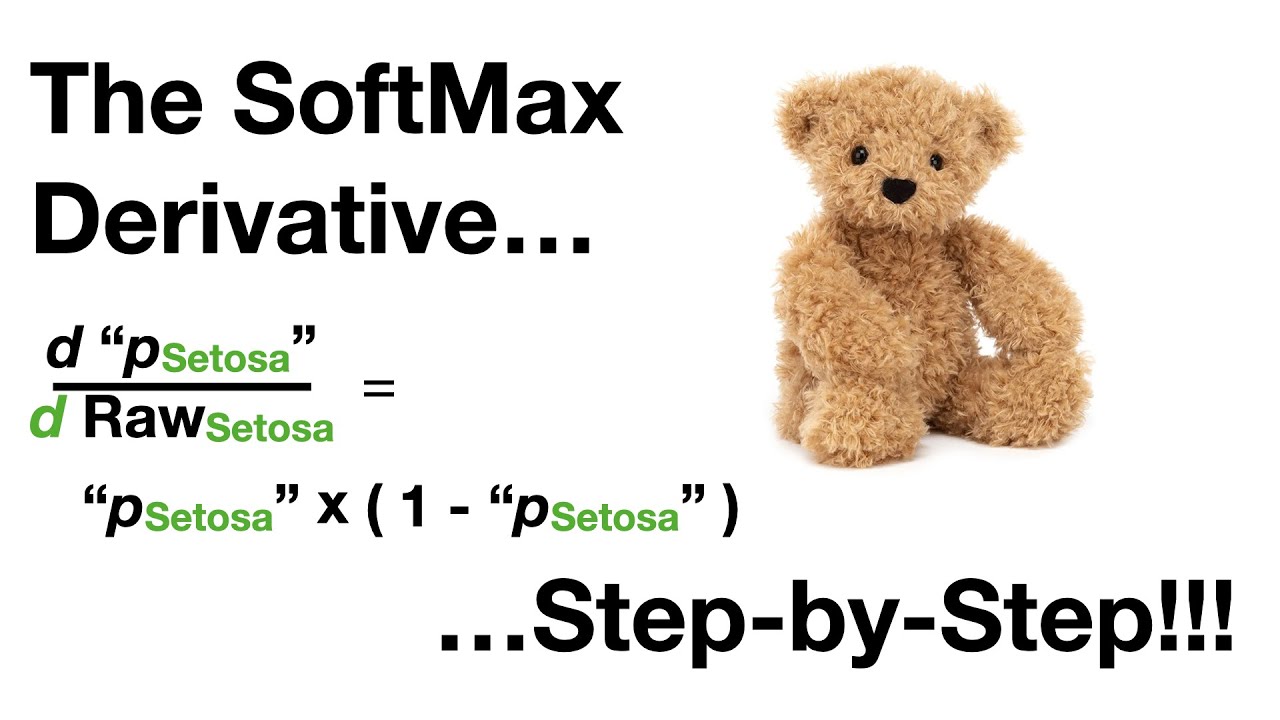

The SoftMax Derivative, Step-by-Step!!!

Neural Networks Pt. 2: Backpropagation Main Ideas

Long Short-Term Memory (LSTM), Clearly Explained

Attention for Neural Networks, Clearly Explained!!!

5.0 / 5 (0 votes)