ESPN com discusses IBM WebSphere eXtreme Scale at Impact 2011

Summary

TLDRThis video highlights how ESPN handles over 10,000 requests per second during events, utilizing web page caching and personalization for millions of users. Their system, built around a relational database called the 'Personalization DB,' relies on IBM WebSphere Extreme Scale for lightning-fast, sub-millisecond response times. The infrastructure includes 10 commodity servers, each with 32 GB of RAM, enabling the processing of 12 million users with scalability and redundancy. The setup can dynamically adjust server count in real-time, ensuring seamless performance even under heavy load, all developed within just three months.

Takeaways

- 🚀 ESPN handles over 10,000 requests per second continuously during events.

- 💾 Caching is critical for scaling, but it doesn't work well for personalized content.

- 📊 ESPN has over 10 million users, with about 5KB of data stored per user on average.

- 🗃️ Handling user data requires around 200GB of storage with redundancy for personalized content.

- 🌐 Personalization works across multiple platforms, including ESPN.com, mobile sites, and partner websites like Soccernet.com.

- 🧩 The personalization system is built on a relational database paired with an in-memory grid using IBM WebSphere Extreme Scale for fast responses.

- ⚡ The grid provides sub-millisecond response times, ensuring personalization happens almost instantly.

- 💻 The grid runs on 10 commodity servers, each with 32GB of RAM, using 20GB per server for a total of 200GB of memory.

- 🔄 ESPN can dynamically add or remove servers at runtime without disrupting service, ensuring scalability and fault tolerance.

- ⏱️ The system was built, tested, and deployed in just three months, with the legal process taking longer than the technical implementation.

Q & A

How many requests per second does ESPN.com handle during events?

-ESPN.com handles over 10,000 requests per second, and this rate is sustained for hours during major events.

What challenge does ESPN.com face with personalized content?

-Web page caching, which is critical for scaling, doesn't work as effectively for personalized content.

How much data does ESPN need to handle for personalized content?

-ESPN plans to store data for over 10 million users, with each user averaging 5 kilobytes of data, resulting in about 200 gigabytes of data.

Why can't the data for ESPN's users fit into a few servers?

-Storing 200 gigabytes of data with full redundancy requires more resources than a few servers, necessitating a large-scale system.

What is the role of the personalization system across ESPN's platforms?

-The personalization system ensures that user preferences, such as favorite teams, are consistent across ESPN's websites and mobile apps.

What are the two key components that power ESPN's personalization system?

-The two key components are 'The Grid' (an in-memory representation using IBM WebSphere Extreme Scale) and 'The Composer' (which facades the complexity of interacting with ESPN's back-end services).

How does 'The Grid' improve performance in ESPN's system?

-'The Grid' provides sub-millisecond response times by storing user preferences in-memory, making it extremely fast for personalization.

What does 'The Composer' do in ESPN's architecture?

-'The Composer' manages communication with 'The Grid' and the back-end services, organizing requests and retrieving the necessary data for personalized content.

What hardware is used to power 'The Grid' at ESPN?

-'The Grid' runs on 10 commodity servers, each with 32 GB of RAM. 20 GB of each server's RAM is dedicated to storing user data, giving the system a total of 200 GB of memory.

How scalable is ESPN's system in terms of handling requests?

-ESPN's system was tested with 12,000 and 15,000 requests per second, and CPU usage on all servers stayed below 5%. Additionally, the system can dynamically scale by adding or removing servers in real-time.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Load Testing Web dengan Python Locust: Perkenalan

19# Всё о кешированиии в битриксе | Видеокурс: Создание сайта на 1С Битрикс

How does Caching on the Backend work? (System Design Fundamentals)

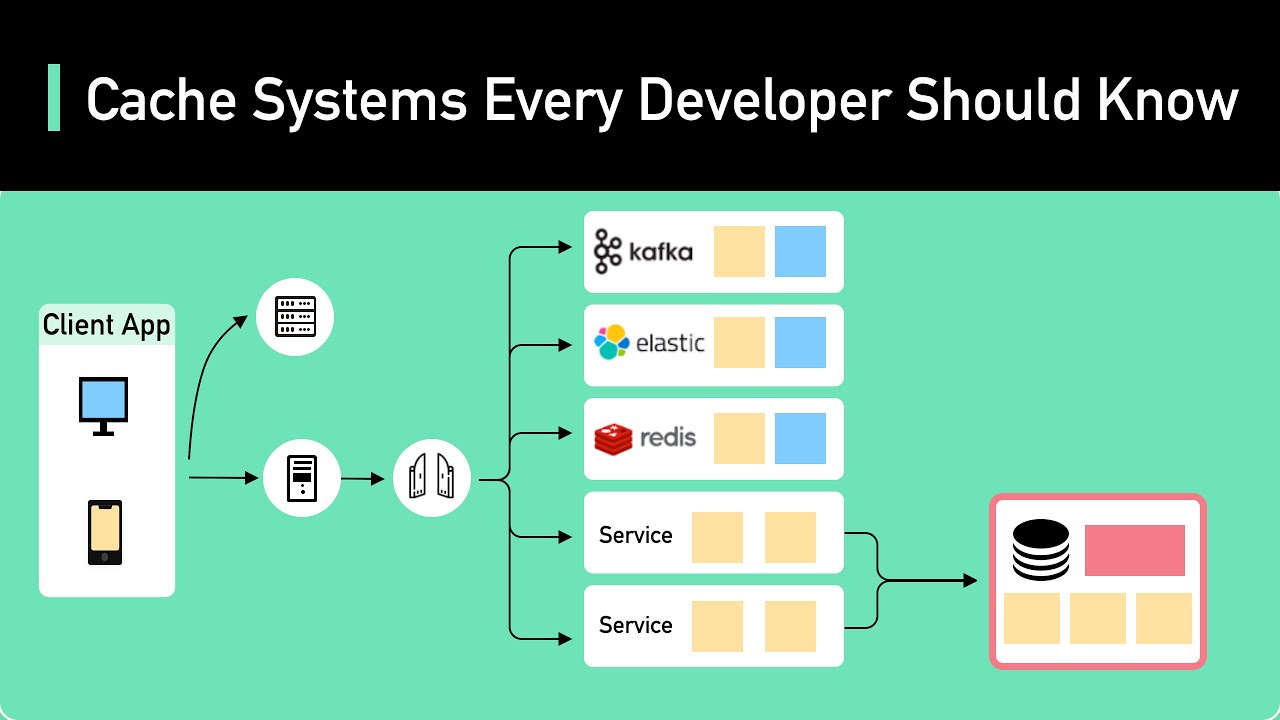

Cache Systems Every Developer Should Know

ASP.NET Web APIs Explained in 9 Minutes

2021 Cloudflare: Basic Pages Rules and Cache Levels, Standard and "Cache Everything"

5.0 / 5 (0 votes)