Practical Intro to NLP 26: Theory - Data Visualization and Dimensionality Reduction

Summary

TLDRThis video script discusses the importance of data visualization in identifying outliers and understanding data sets through movie plot examples. It explains dimensionality reduction techniques like PCA, t-SNE, and UMAP, which convert high-dimensional data into lower dimensions while preserving global and local structures. The script highlights UMAP as the current state-of-the-art for visualization and clustering, emphasizing its balance between local and global structure preservation. The benefits of visualization include quick data overviews, outlier detection, identifying clusters and similarities, and exploring relationships between different data entities.

Takeaways

- 📊 **Data Visualization Importance**: Visualization is crucial for identifying outliers and getting a high-level overview of data.

- 🎬 **Movie Plots Visualization**: Movie plots are visualized to spot patterns, clusters, and outliers among different movies.

- 🔢 **Dimensionality**: Movies are represented as high-dimensional vectors (e.g., 768 dimensions), which need to be reduced for visualization.

- 🌐 **Preserving Structure**: Dimensionality reduction aims to preserve both local and global structures of the data.

- 📉 **PCA Limitations**: Principal Component Analysis (PCA) is a linear technique that preserves global structure but may not be suitable for local structure preservation or nonlinear data.

- 🔄 **t-SNE for Nonlinear Data**: t-Distributed Stochastic Neighbor Embedding (t-SNE) is a nonlinear technique that focuses on preserving local structure and is effective for nonlinear data separation.

- 🚀 **UMAP Advantages**: Uniform Manifold Approximation and Projection (UMAP) is a state-of-the-art technique that balances local and global structure preservation and is faster than t-SNE.

- 🔍 **Outlier Detection**: Visualization helps in automatically detecting and removing outliers, which can improve the quality of algorithmic results.

- 👥 **Cluster Identification**: It's possible to identify clusters and similarities in data, such as grouping similar movies or movie series.

- 🔗 **Multi-Entity Visualization**: Visualizing different entities together, like movies and directors, can reveal interesting relationships and potential collaborations.

Q & A

What is the importance of data visualization in analyzing movie plots?

-Data visualization is crucial for identifying outliers and getting a high-level overview of the data. It allows for the immediate detection of patterns, clusters, and anomalies within the dataset of movie plots.

How are movies represented in data visualization?

-Movies are represented as high-dimensional vectors, such as 768-dimensional vectors, where each dimension corresponds to a specific feature of the movie.

Why is dimensionality reduction necessary for visualizing movie plots?

-Dimensionality reduction is necessary because humans can only visualize data in two or three dimensions. It converts high-dimensional data into a lower-dimensional form that can be plotted on a 2D or 3D graph.

What does it mean to preserve local and global structure in dimensionality reduction?

-Preserving local and global structure means maintaining the relative distances and relationships between data points in the reduced dimensions as they were in the original high-dimensional space. This ensures that similar items remain close together and dissimilar items remain distant.

How are the weights for the dimensions calculated in dimensionality reduction?

-The weights are calculated using dimensionality reduction algorithms, which may employ techniques like matrix factorization. These algorithms determine the optimal weights to preserve the data's structure in the reduced dimensions.

What is the difference between linear and nonlinear dimensionality reduction?

-Linear dimensionality reduction uses a weighted combination of dimensions, while nonlinear dimensionality reduction may involve more complex transformations, such as logarithmic or polynomial functions, to better capture the data's structure.

Why is PCA considered a linear dimensionality reduction technique?

-PCA is considered linear because it uses a weighted sum of the original dimensions to create new dimensions, preserving the global structure but not necessarily the local structure.

What are the advantages of t-SNE over PCA in dimensionality reduction?

-t-SNE is a nonlinear technique that better preserves local structure and can separate data that cannot be linearly separated. However, it is slower and may require tuning of hyperparameters for optimal results.

How does UMAP differ from t-SNE and PCA?

-UMAP is a state-of-the-art, nonlinear dimensionality reduction technique that constructs a neighbor graph in higher dimensions and projects it into lower dimensions. It is faster than t-SNE and allows for a balance between preserving local and global structures.

What are some other use cases for dimensionality reduction besides visualization?

-Dimensionality reduction can also be used for fast clustering, feature extraction, and improving the performance of machine learning algorithms by reducing the complexity of the data.

How can data visualization help in identifying outliers and clusters?

-Data visualization allows for the quick detection of outliers and clusters by visually inspecting the plot for points that deviate from the norm or group together, which can be crucial for cleaning data or identifying trends.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

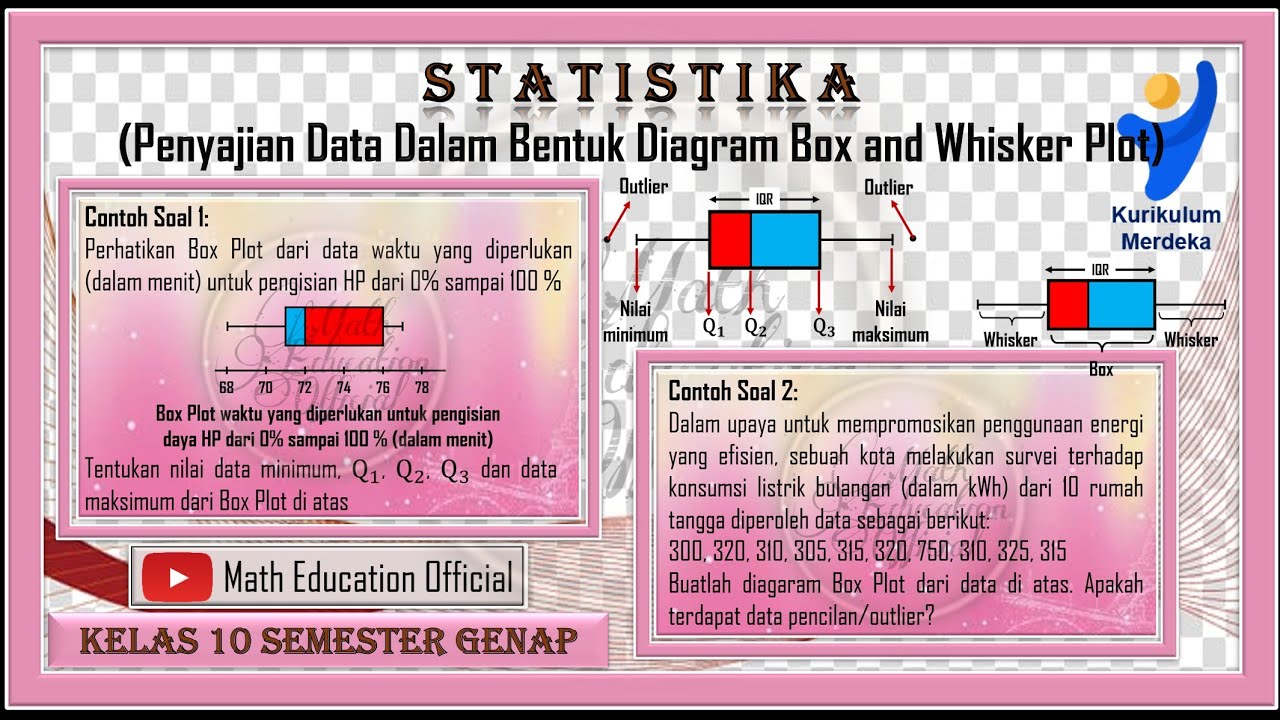

How To Make Box and Whisker Plots

Stats 1 Week 1-8 in One Shot For Quiz 2 |All Concepts & Formulas Revision IIT Madras BS Data Science

Estatística Descritiva Univariada Quantitativa - Boxplot

SEM Series (2016) 2. Data Screening

Penyajian Data Dalam Bentuk Box and Whisker Plot Box Plot

AP Biology Practice 5 - Analyze Data and Evaluate Evidence

5.0 / 5 (0 votes)