Floating Point Numbers: IEEE 754 Standard | Single Precision and Double Precision Format

Summary

TLDRThis video from ALL ABOUT ELECTRONICS dives into the IEEE 754 standard for floating-point numbers. It explains the 32-bit single precision and 64-bit double precision formats, detailing how numbers are stored with one bit for the sign, variable bits for the exponent, and the rest for the mantissa. The video covers biased representation for exponent storage, the trade-off between range and precision, and the significance of all zeros or ones in the exponent field. It also touches on the conversion between decimal and IEEE format, emphasizing the importance of understanding floating-point representation in electronics and computing.

Takeaways

- 😀 IEEE 754 is a standard for representing floating-point numbers in computer memory.

- 🔑 In IEEE 754, the floating-point number is divided into three parts: sign bit, exponent, and mantissa.

- 💡 The standard includes five different formats ranging from 16 bits (half precision) to 256 bits for storing floating-point numbers.

- 🎯 Single precision format uses 32 bits, with 1 bit for the sign, 8 bits for the exponent, and 23 bits for the mantissa.

- 🌟 The exponent is stored using a biased representation, where a bias value is added to the actual exponent value to handle negative exponents.

- 📉 The bias for single precision is 127, allowing an exponent range from -126 to +127 after bias subtraction.

- 🔢 The mantissa stores the fractional part of the normalized binary number, excluding the leading '1' that is implicit in normalized numbers.

- ⚖️ The largest number representable in single precision is approximately 3.4 x 10^38, and the smallest is about 1.1 x 10^-38.

- 🔄 Floating-point numbers offer a wider range compared to fixed-point numbers but at the cost of precision, with single precision offering about 7 decimal digits of precision.

- 📚 The video also covers the conversion process between decimal numbers and their IEEE 754 single precision binary representation.

Q & A

What is the IEEE standard used for?

-The IEEE standard, specifically IEEE 754, is used for the storage of floating-point numbers in computer memory.

How many bits are reserved for the sign bit in a floating-point number stored in single precision format?

-In the single precision format, 1 bit is reserved for the sign bit.

What is the purpose of the exponent in floating-point representation?

-The exponent in floating-point representation is used to store the power to which the base (usually 2) is raised, allowing the representation of both large and small numbers efficiently.

How many bits are used to store the mantissa in single precision floating-point format?

-In the single precision floating-point format, 23 bits are used to store the mantissa.

What is the biased representation used for in the IEEE 754 standard?

-The biased representation is used to store the exponent in the IEEE 754 standard, allowing for a continuous range of exponent values including both positive and negative numbers.

What is the value of the bias used for the 8-bit exponent in single precision format?

-The value of the bias used for the 8-bit exponent in single precision format is 127.

How is the mantissa stored in the IEEE 754 standard?

-In the IEEE 754 standard, the mantissa is stored as the fractional part of the normalized binary number, excluding the leading 1 that is implicit in the normalized form.

What is the significance of all zeroes and all ones in the exponent field in IEEE 754?

-In IEEE 754, exponent fields of all zeroes and all ones are reserved for special purposes, such as representing special values like infinity and NaN (Not a Number).

What is the range of the exponent in the single precision format after subtracting the bias?

-After subtracting the bias, the range of the exponent in the single precision format is from -126 to +127.

How does the IEEE 754 standard handle the representation of negative zero?

-The IEEE 754 standard represents negative zero as a special case, where the sign bit is set to 1 and the exponent and mantissa fields are all zeroes.

What is the largest number that can be represented in the single precision format?

-The largest number that can be represented in the single precision format is approximately 3.4 x 10^38.

What is the smallest positive normalized number that can be represented in the single precision format?

-The smallest positive normalized number that can be represented in the single precision format is approximately 1.1 x 10^-38.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Floating Point Numbers | Fixed Point Number vs Floating Point Numbers

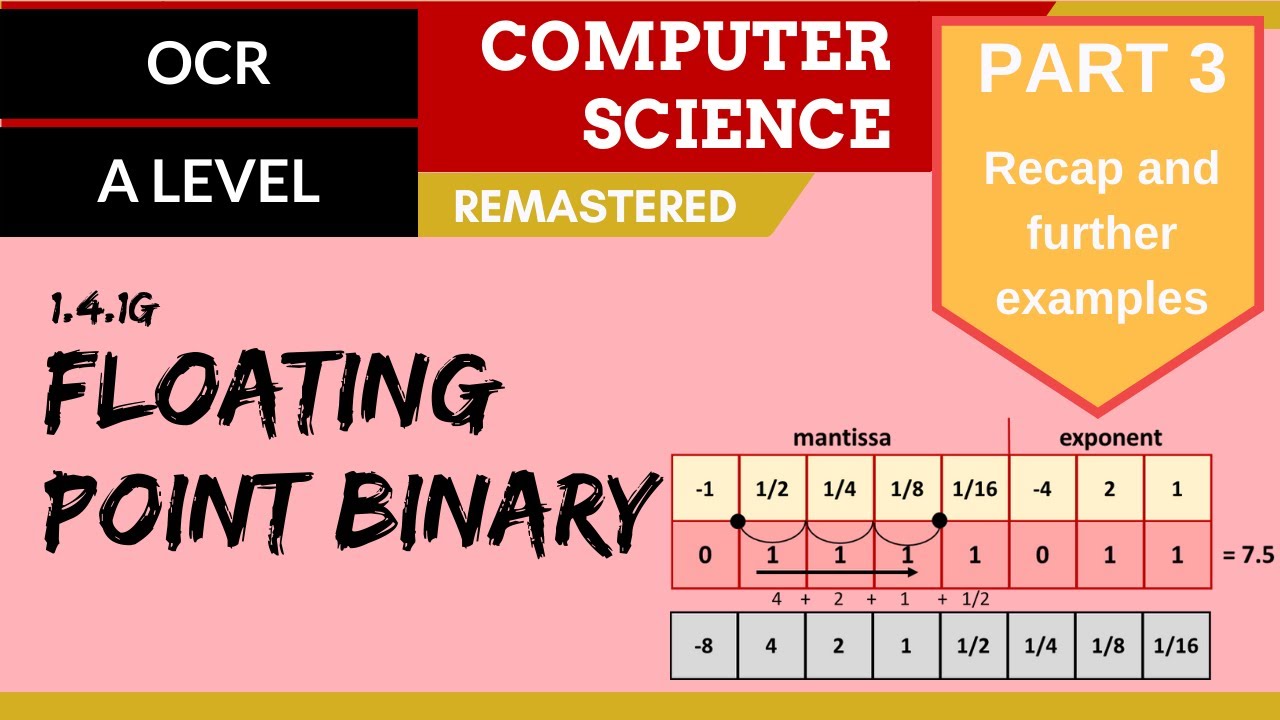

81. OCR A Level (H446) SLR13 - 1.4 Floating point binary part 3 - Recap and further examples

L25 Floating Point

HOW TO: Convert Decimal to IEEE-754 Single-Precision Binary

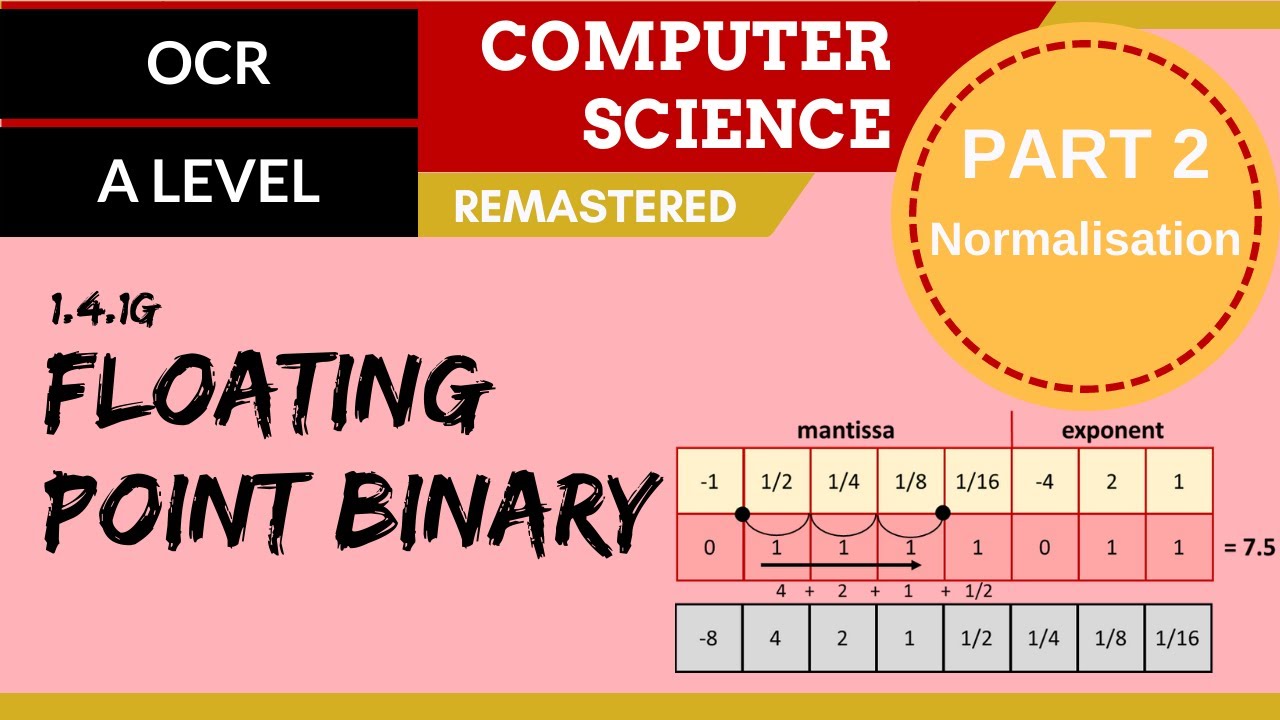

80. OCR A Level (H046-H446) SLR13 - 1.4 Floating point binary part 2 - Normalisation

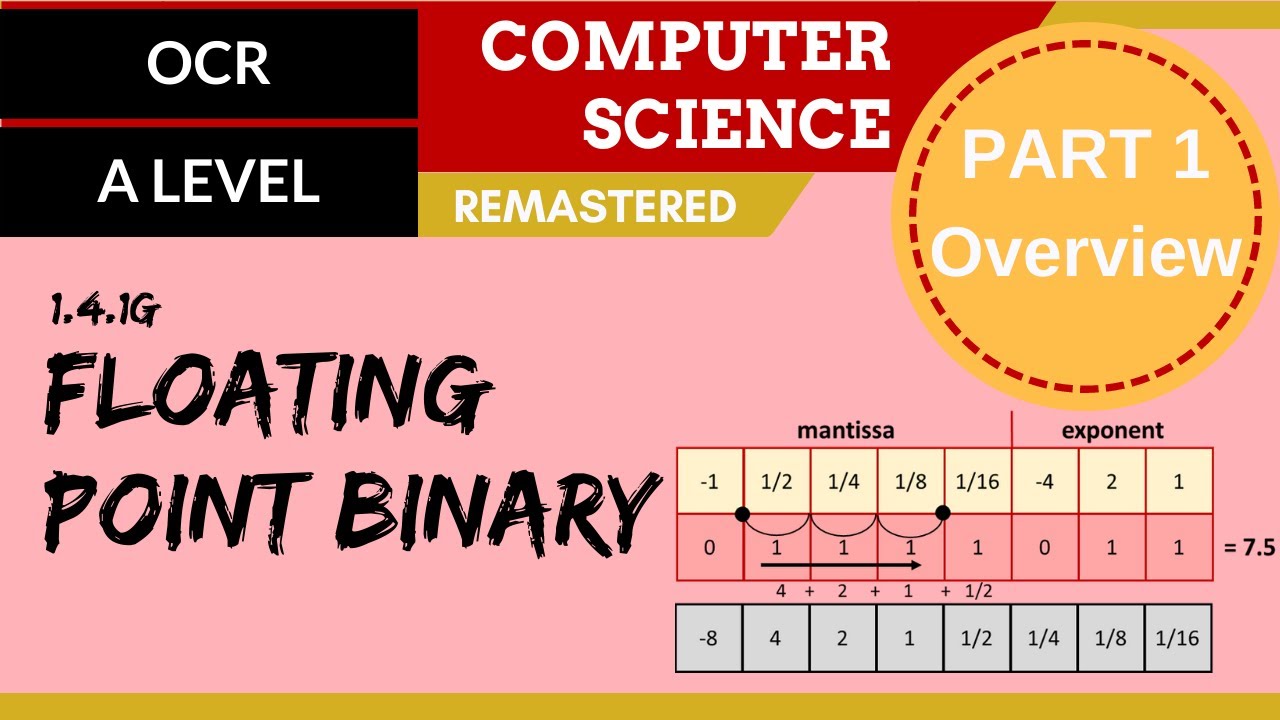

79. OCR A Level (H046-H446) SLR13 - 1.4 Floating point binary part 1 - Overview

5.0 / 5 (0 votes)