L25 Floating Point

Summary

TLDRThis tutorial delves into the complexities of floating-point mathematics, focusing on the IEEE 754 32-bit representation. It explains the structure of floating-point numbers, common pitfalls like round-off errors, and real-world implications, including a significant incident involving the Patriot missile system. The discussion emphasizes the importance of avoiding floating-point arithmetic for critical applications like timekeeping, advocating for alternatives such as integers or fixed-point representations. It concludes with best practices for handling special values and ensuring robust computations, highlighting the need for careful consideration in programming to prevent errors.

Takeaways

- 😀 Floating point math approximates real numbers, often leading to round-off errors due to limited precision.

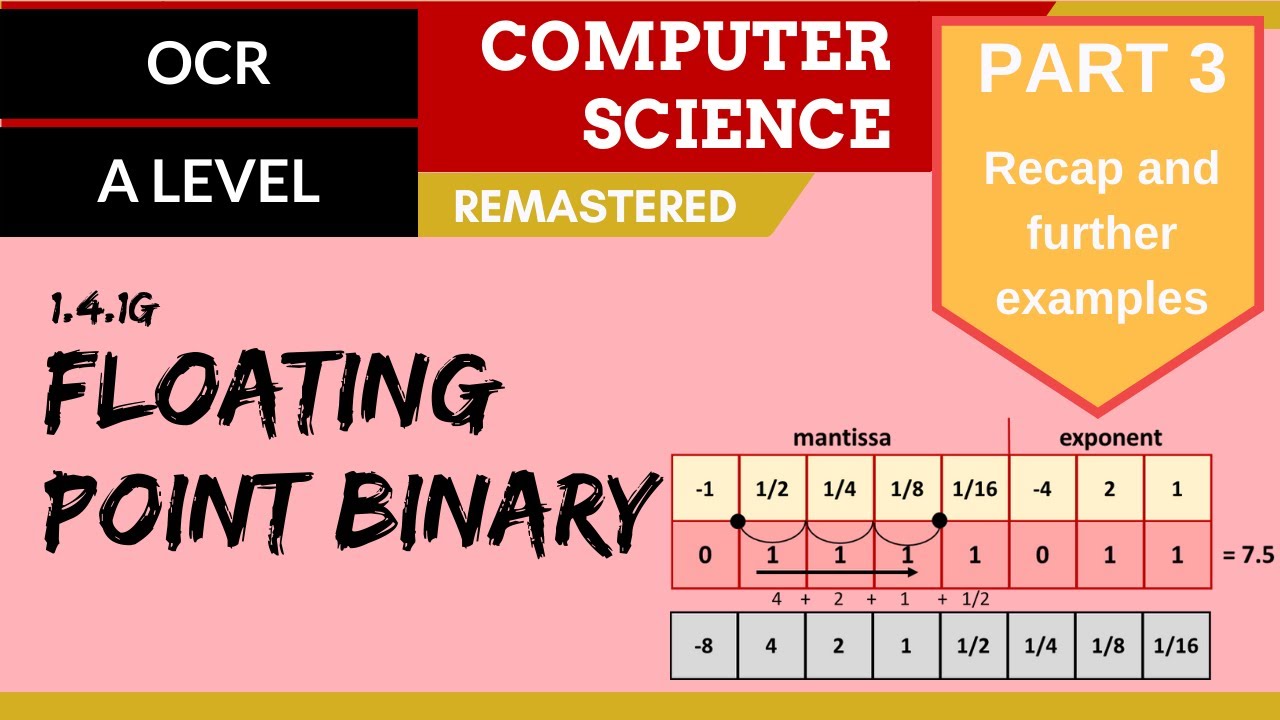

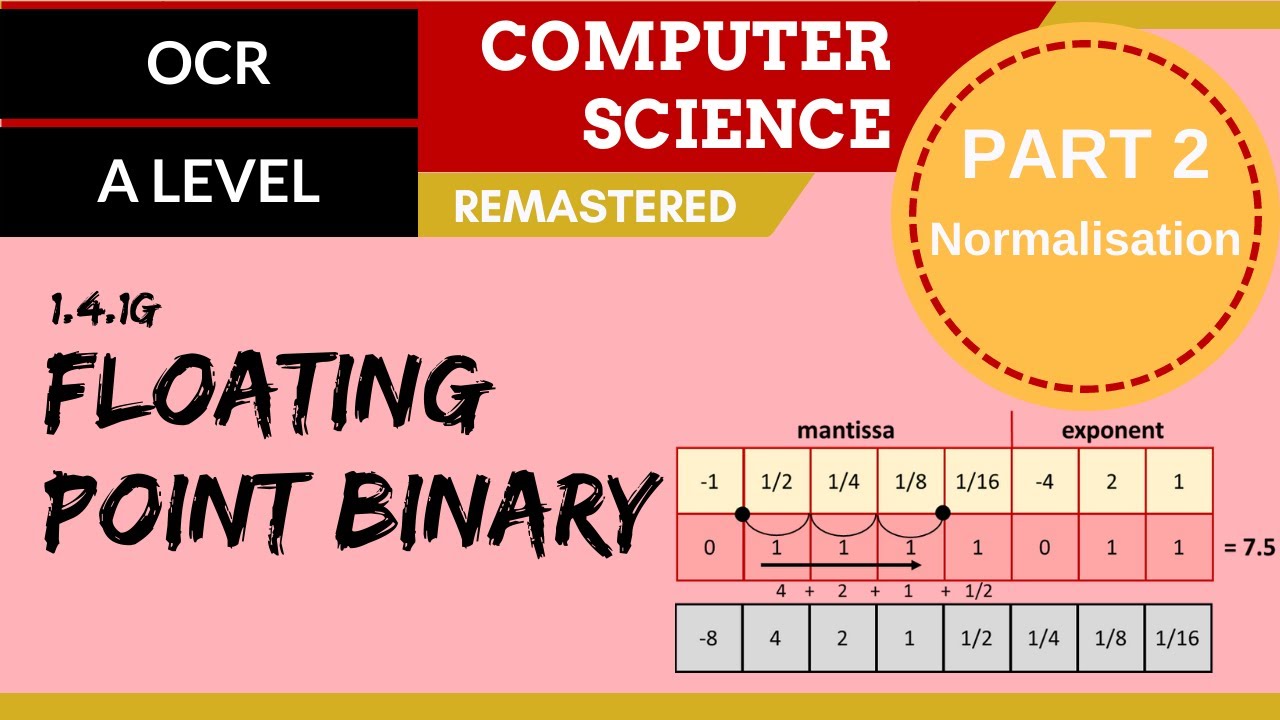

- 😀 The IEEE 32-bit single precision format consists of a sign bit, an 8-bit exponent, and a 23-bit mantissa.

- 😀 Special values in floating point representation include zero, infinity, NaN (not a number), and denormalized numbers.

- 😀 Round-off errors can accumulate over calculations, leading to significant discrepancies in results.

- 😀 Converting between integer and floating point can result in loss of precision, as seen in the example with hexadecimal values.

- 😀 Comparing floating point numbers for equality can be problematic due to potential differences in round-off errors.

- 😀 A historical example highlighted the dangers of using floating point for time representation, leading to a military mishap.

- 😀 Alternative methods to avoid floating point errors include using scaled integers, binary-coded decimal (BCD), or fixed point representation.

- 😀 It is crucial to handle special values correctly in floating point computations to avoid unexpected behavior.

- 😀 Best practices for using floating point include minimizing its use, especially in iterative processes, and being cautious with comparisons.

Q & A

What is floating point math?

-Floating point math is a method of representing real numbers that include fractions, as opposed to just integers, using a finite number of bits.

What does the IEEE 32-bit single precision format consist of?

-It consists of a 1-bit sign, an 8-bit exponent, and a 23-bit mantissa.

How is the value of a floating point number calculated?

-The value is calculated as: sign × (1.mantissa) × 2^(exponent - 127).

What are some common special values in floating point representation?

-Common special values include positive and negative zero, infinity, NaN (not a number), and denormalized numbers.

What is a significant problem associated with floating point math?

-Round-off errors can occur due to limited precision, leading to inaccuracies in calculations over time.

Why can comparing floating point numbers be problematic?

-Comparing floating point numbers can be misleading due to accumulated round-off errors, which can cause two seemingly equal values to differ.

What example demonstrates the dangers of round-off errors?

-The 1991 incident with the Patriot missile system illustrates how round-off errors in time calculation led to a failure to intercept an incoming missile.

What are some best practices for using floating point numbers?

-Best practices include avoiding floating point when possible, using scaled integers, implementing special value checks, and utilizing approximate equality for comparisons.

What alternative methods can be used instead of floating point arithmetic?

-Alternatives include Binary Coded Decimal (BCD), fixed-point arithmetic, and using integers for precise calculations.

What should developers be aware of regarding NaN values?

-NaN values can propagate through calculations, leading to unexpected results, and comparisons involving NaN will always return false.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Floating Point Numbers: IEEE 754 Standard | Single Precision and Double Precision Format

HOW TO: Convert Decimal to IEEE-754 Single-Precision Binary

81. OCR A Level (H446) SLR13 - 1.4 Floating point binary part 3 - Recap and further examples

Floating Point Numbers | Fixed Point Number vs Floating Point Numbers

ECAP268 - U01L04 - Fixed point and floating point representation

80. OCR A Level (H046-H446) SLR13 - 1.4 Floating point binary part 2 - Normalisation

5.0 / 5 (0 votes)