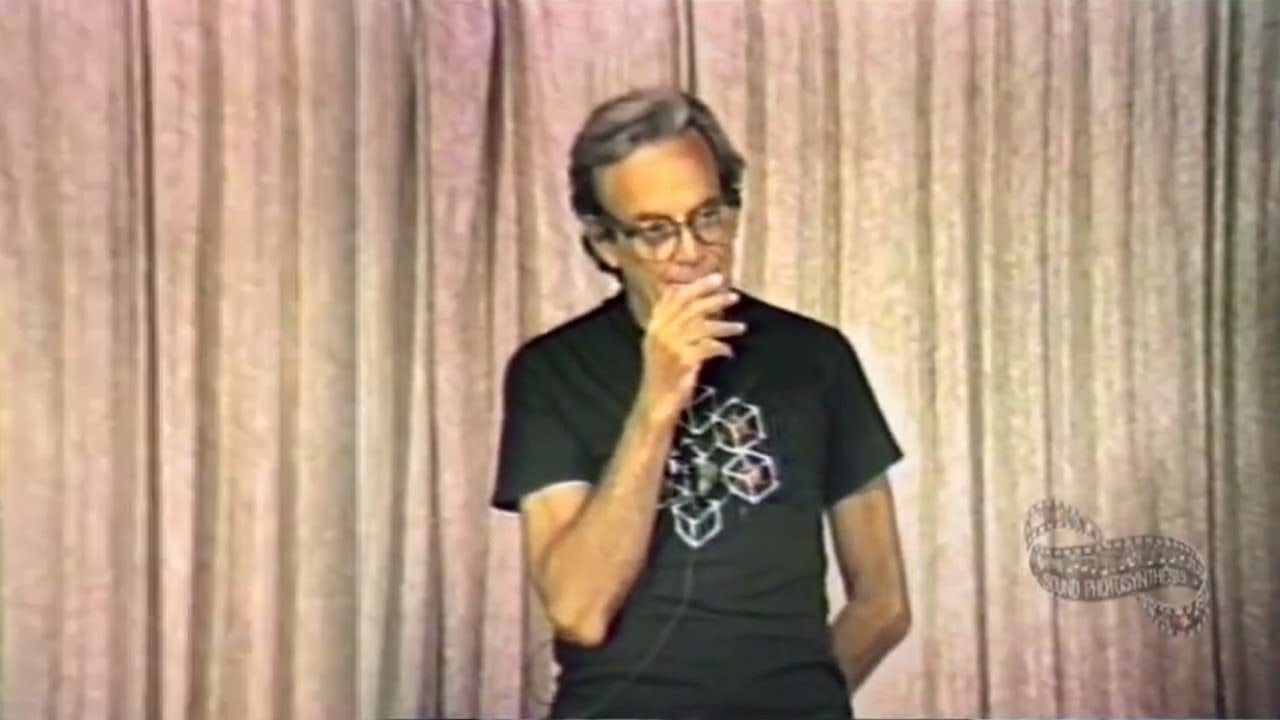

How we teach computers to understand pictures | Fei Fei Li

Summary

TLDRFei-Fei Li discusses advancements in computer vision and artificial intelligence, highlighting the challenges of teaching machines to interpret visual information like humans. Through her work with Stanford's Vision Lab and the ImageNet project, Li illustrates how vast data sets help train computers to recognize objects, generate sentences, and understand complex visual scenes. Despite progress, machines still struggle with deeper comprehension. Li envisions a future where computers assist in healthcare, safety, and exploration, emphasizing the potential of AI to improve human life by augmenting our ability to see and understand the world.

Takeaways

- 👶 A three-year-old child can easily describe what they see in photos, demonstrating how natural it is for humans to interpret visual information.

- 🧠 Despite technological advancements, computers still struggle to interpret visual data in the way humans do because they lack true understanding.

- 🚗 Computer vision is essential for applications like self-driving cars, which need to differentiate between various objects to function safely.

- 👁️ Vision is not just about the eyes but involves complex brain processing, which has evolved over millions of years.

- 🔬 Fei-Fei Li's research at Stanford's Vision Lab focuses on teaching computers to see and understand like humans through computer vision and machine learning.

- 🐱 Simple object recognition for computers is challenging due to the infinite variations in appearance, positioning, and context of objects like cats.

- 📊 The ImageNet project, launched in 2007, created a massive dataset of labeled images to help train computer vision algorithms, drawing on millions of images sourced from the internet.

- 💡 The combination of big data (ImageNet) and convolutional neural networks (a type of machine learning algorithm) has led to significant progress in object recognition.

- 🧩 Computer vision algorithms have evolved from recognizing individual objects to generating human-like sentences that describe entire scenes.

- 🤖 Although there have been advancements, current AI still struggles with more nuanced understanding, like context, emotions, or cultural significance in images.

Q & A

What is the main task that a three-year-old child is an expert at, according to Fei-Fei Li?

-A three-year-old child is an expert at making sense of what they see, describing the world based on visual perception.

What is the current limitation of advanced machines and computers, despite technological progress?

-Despite technological progress, advanced machines and computers still struggle with understanding and interpreting visual information like humans do.

Why is it difficult for computers to interpret visual information, such as distinguishing a crumpled paper bag from a rock on the road?

-It's difficult because computers do not naturally understand the meaning behind visual data. Cameras capture pixels, but those pixels lack the semantic meaning needed to interpret complex situations accurately.

How does Fei-Fei Li's research aim to improve computer vision?

-Fei-Fei Li's research aims to teach computers to see and understand visual information by leveraging large datasets and machine learning algorithms, similar to how a child learns from real-world experiences.

What was the significance of the ImageNet project in advancing computer vision?

-The ImageNet project provided an extensive dataset of 15 million labeled images, enabling computers to learn from a vast range of visual examples and significantly improving the accuracy of object recognition algorithms.

Why did Fei-Fei Li emphasize the importance of providing computers with 'training data' similar to what a child experiences?

-She emphasized that instead of focusing solely on improving algorithms, it's crucial to expose computers to large quantities of real-world examples, just like a child who learns by seeing millions of images throughout early development.

What role did convolutional neural networks play in advancing computer vision?

-Convolutional neural networks, which mimic the structure of the human brain with layers of interconnected neurons, became a breakthrough architecture in computer vision, enabling better object recognition when trained with the massive data from ImageNet.

What limitations still exist in current computer vision systems, as demonstrated in the TED talk?

-Current computer vision systems still make mistakes, such as confusing objects like a toothbrush for a baseball bat or misinterpreting artistic images. These limitations show that computers are far from understanding the world with the nuance and depth of human perception.

How does Fei-Fei Li envision the future of visual intelligence in machines?

-She envisions a future where machines collaborate with humans, assisting in tasks like diagnosing patients, navigating disaster zones, and discovering new materials. Machines with visual intelligence will enhance human capabilities in ways previously unimaginable.

What example does Fei-Fei Li give to illustrate the deeper understanding that computers currently lack in visual perception?

-She gives the example of her son Leo's birthday cake picture. While a computer can identify objects like 'a person and a cake,' it lacks the deeper context—such as knowing the cake is an Italian Easter cake or understanding the boy's emotional connection to his shirt, which was a gift.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Introduction to Computer Vision: Image and Convolution

Capire l'intelligenza artificiale con la filosofia: conversazione con Cosimo Accoto

Computer Vision Explained in 5 Minutes | AI Explained

Richard Feynman: Can Machines Think?

Introduction to Artificial Intelligence

How Computer Vision Applications Work

5.0 / 5 (0 votes)