M1. L3. Measuring Computer Power

Summary

TLDRThis script delves into the fundamental components of computer systems, highlighting the roles of the CPU, input and output devices, and storage. It explains the CPU's internal workings, including the ALU, cache, registers, and buses, and the significance of the clock in timing operations. The Von Neumann architecture and its impact on programmable computers are discussed, along with the instruction sets of CISC and RISC. The binary system's role in digital computing is explored, as well as the evolution of data storage and addressing. The script also touches on the challenges of artificial intelligence, such as emotion recognition and independent decision-making, and the computer's reliance on human input for complex tasks.

Takeaways

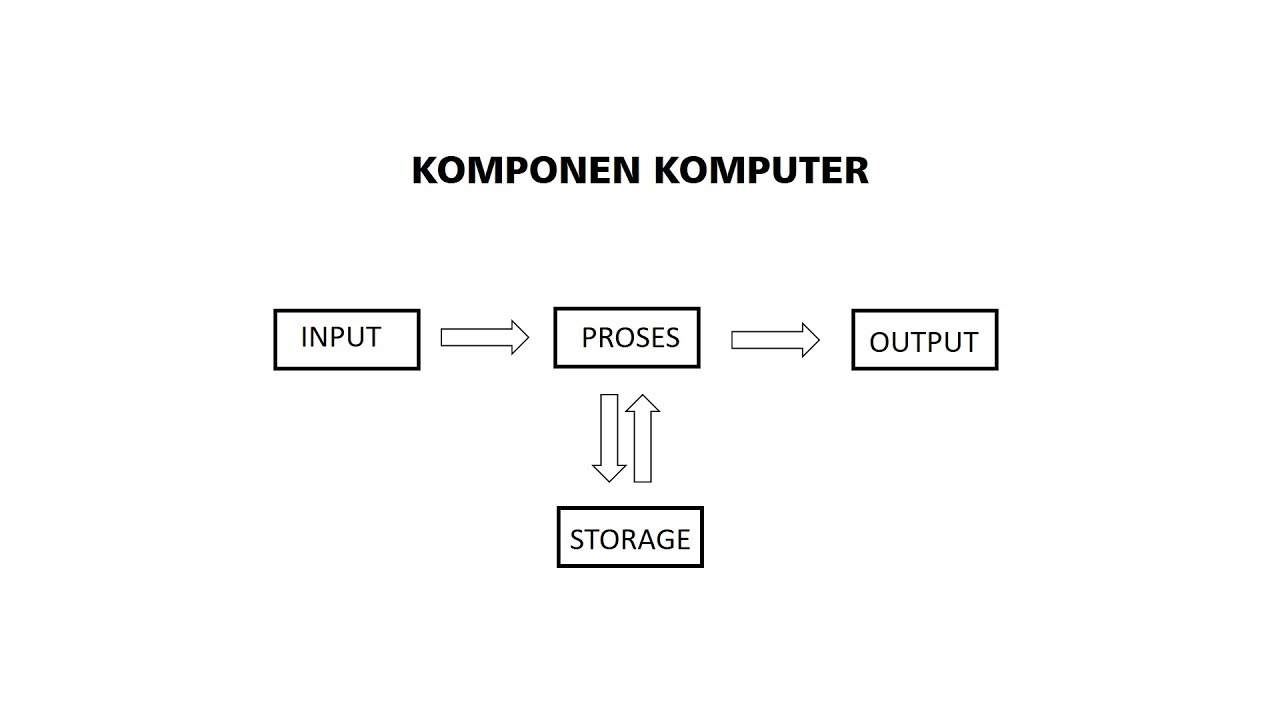

- 🏠 Computers are built with a plan, similar to a house, and consist of basic components like input, storage, output devices, and the CPU.

- 🔢 The CPU, despite being a small chip, contains vital components such as the ALU, cache, registers, buses, and clock, which are crucial for system control.

- 📈 The ALU in the CPU performs all arithmetic and logical operations, while the cache is high-speed RAM that stores frequently used data and instructions.

- 🗂️ Registers within the CPU are high-speed memory sections, including general and special purpose registers, that are close to the processor for quick data access.

- 🚦 Buses within the CPU act as internal connections, with address buses for memory addresses, data buses for data, and control buses for control signals.

- ⏱️ The CPU's clock regulates the timing of all processes, with cycles measured in hertz, indicating the number of instructions processed per second.

- 🛠️ The Von Neumann architecture, proposed by John Von Neumann, is the foundation of modern computing, allowing for storing program instructions alongside data.

- 📚 Special registers in the Von Neumann architecture, like the PC, CIR, MAR, MDR, and ACC, play specific roles in instruction fetching, decoding, and execution.

- 🛠️ Instruction sets define the commands a processor can execute, with CISC and RISC being two categories, where CISC focuses on complex instructions and RISC on simpler, more efficient ones.

- 🔢 Digital computing is based on binary, with bits representing on/off states, forming the basis of all modern computing, including images, music, and movements.

- 📊 A byte, traditionally 8 bits, is the standard unit for data representation, with larger units like kilobytes, megabytes, and gigabytes used for larger data sets.

Q & A

What are the basic components of a computer system?

-The basic components of a computer system include input devices, storage devices, output devices, and the CPU.

What is the role of the CPU in a computer system?

-The CPU, or Central Processing Unit, is a small chip that performs most of the processing in a computer. It contains important components like the control unit, arithmetic logic unit, registers, cache, buses, and clock, which together provide system control.

What is the function of the Arithmetic Logic Unit (ALU) in the CPU?

-The ALU performs all the mathematical and logical operations within the CPU, including decision-making tasks.

What is cache and why is it important in a CPU?

-Cache is a small, high-speed RAM built directly into the processor. It holds data and instructions that the processor is likely to use, reducing the time needed to access frequently used data.

What are registers in the context of the CPU, and what is their purpose?

-Registers are small sections of high-speed memory found inside the CPU. They store data and instructions close to the processor, facilitating faster access and processing.

How do buses function within a CPU?

-Buses are internal high-speed connections that transfer data, memory addresses, and control signals between the CPU and other components, similar to roads for cars.

What is the significance of the clock in a CPU and how is it measured?

-The clock in a CPU keeps time for all processes, ensuring they are precisely timed. It is measured in hertz, which are cycles per second, and indicates how many processing cycles the CPU can complete in a second.

What is the Von Neumann architecture and its significance in computing?

-The Von Neumann architecture is a design where program instructions and data are stored together in memory. It allows computers to be programmable and to perform a wide range of tasks, limited only by the programmer's imagination.

What are the five special registers in the Von Neumann architecture?

-The five special registers are the Program Counter (PC), Current Instruction Register (CIR), Memory Address Register (MAR), Memory Data Register (MDR), and the Accumulator (ACC).

What is the difference between CISC and RISC in terms of instruction sets?

-CISC (Complex Instruction Set Computer) aims to complete tasks with fewer lines of assembly code, often using microcode for complex instructions. RISC (Reduced Instruction Set Computer) uses simpler instructions that can be executed in one clock cycle, requiring more lines of code but often resulting in simpler and more efficient processor design.

How does the binary system form the basis of all modern computing?

-Modern computing is based on the binary system, which uses only two states, represented by 0 and 1. These states are used to represent all data in a computer, from images and music to text and commands.

What is a byte and how did it become the standard unit of data representation?

-A byte is a standard unit of data representation consisting of eight bits. It became the standard as computers started using eight bits to represent characters, and the term 'byte' was deliberately misspelled to avoid confusion with the word 'bite'.

Why are powers of two important in memory addressing and storage devices?

-Powers of two are important in memory addressing and storage devices because every time the number of address bits is increased, the number of possible storage locations doubles. This ensures efficient use of memory and avoids issues with non-addressable locations.

What is the challenge for computers in terms of emotion and decision-making?

-Computers struggle with showing emotion and making independent decisions because they rely on binary logic and are programmed based on specific instructions. They lack the intuitive understanding and emotional context that humans possess.

What is the fetch-decode-execute cycle in computing?

-The fetch-decode-execute cycle is the process where instructions are fetched from RAM, decoded by the CPU to understand them, and then executed. This cycle is fundamental to how computers process instructions.

How is a computer's speed measured and what factors influence it?

-A computer's speed is measured by the number of cycles it can complete in a second. Factors that influence this include clock speed, cache size, and the number of cores in the CPU.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

PENGERTIAN KOMPONEN KOMPUTER INPUT PROSES OUTPUT STORAGE

Komputer dan Komponen Penyusunnya | Bab Sistem Komputer

Computer Concept - Module 3: Computer Hardware Part 1A (4K)

Komponen Komputer || Pengertian INPUT, PROSES, OUTPUT Fungsi Serta Gambarnya

Computer Hardware & Software Lesson Part 1

1.1 - Basic Elements of Computer & Computer System Architecture - Introduction - OS

5.0 / 5 (0 votes)