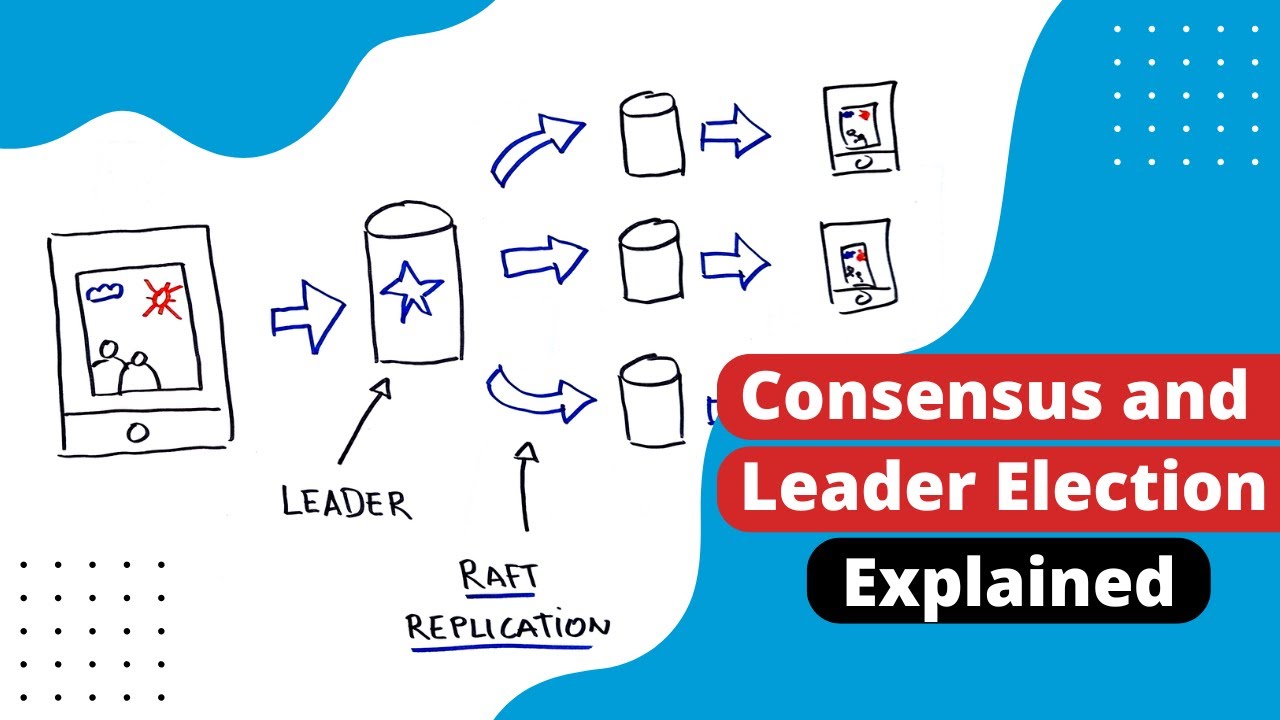

Mastering the Raft Consensus Algorithm: A Comprehensive Tutorial in Distributed Systems

Summary

TLDRThis engaging video introduces the Raft consensus algorithm, crucial for ensuring reliability and consistency in distributed systems, akin to a team of servers working in unison. Through an analogy of overworked interns, it underscores the inevitability of server failures and the catastrophic impact on businesses, proposing the deployment of multiple service instances as a remedy. Highlighting the challenge of keeping data synchronized across replicas, it distinguishes Raft for its simplicity and efficiency over other consensus algorithms like Paxos and Proof of Work. The video delves into Raft's mechanisms, including leader election and log replication, illustrating how it achieves consensus and maintains a strong consistency model, making it a favored choice for distributed systems and databases.

Takeaways

- 📊 Servers, like overworked interns, are prone to failure, which can be devastating for businesses, leading to lost customers and revenue.

- 🔧 Running multiple instances of a service across different regions can mitigate the impact of failures, ensuring zero interruption.

- 🛠️ Consensus algorithms are essential for maintaining sync and consistency across replicas in a distributed system, preventing data discrepancies.

- 💻 Raft is an understandable consensus algorithm that simplifies the coordination of nodes to achieve consensus, making it easier to implement than Paxos.

- 📖 Raft was introduced as a more approachable alternative to Paxos, aiming to solve consensus through single-leader election and log replication.

- 📚 State machine replication across nodes ensures that if they start with the same state and perform the same operations, they will maintain consistency.

- 📬 Raft employs two types of RPCs for in-cluster communication: RequestVotes for leader election and AppendEntries for log replication and as a heartbeat mechanism.

- ⏰ Raft's leader election process involves randomized election timeouts and requires a majority vote from followers, promoting fairness and reducing the risk of split votes.

- 📈 Log replication in Raft ensures all nodes agree on a sequence of values, with the leader committing entries only after a majority of followers have appended them.

- ⚡️ Raft supports linearizability, providing a strong consistency model, but scalability can be a challenge due to the single-leader bottleneck.

Q & A

What is a distributed system and what problem does it introduce related to consensus?

-A distributed system is one where there are multiple nodes or replicas working together to provide a service. The problem this introduces related to consensus is making sure all the nodes stay in sync with the latest data and agree on the system state.

What is the paxos algorithm and what are some of its drawbacks?

-The paxos algorithm is a consensus algorithm commonly used in distributed systems. It is used by systems like Apache Zookeeper and Google's Chubby lock service. Some drawbacks are that it has a reputation for being difficult to understand and implement.

How does Raft tackle the consensus problem differently than Paxos?

-Raft uses leader election and log replication. A single leader node receives requests from clients and replicates log entries to follower nodes. Once a majority of followers have written the log entry, it can be committed.

What are the different states a node can take on in a Raft cluster?

-The different states are: follower, candidate, and leader. All nodes start as followers. If a follower times out without hearing from a leader, it becomes a candidate and attempts to get elected leader.

What types of RPC messages does Raft use for communication?

-Raft uses two types of RPC messages: RequestVote for electing a new leader and AppendEntries for the leader to replicate log entries and send heartbeats.

How does a Raft leader commit a new log entry?

-The leader replicates the new log entry to followers using AppendEntries RPCs. Once a majority of followers have appended the entry, the leader commits it and applies it to its state machine.

What are some benefits of the Raft consensus algorithm?

-Benefits include understandability, relative simplicity in implementation, and linearizability guarantees from having a single leader.

What are some drawbacks of Raft consensus?

-A drawback is potential scalability issues from having all requests go through a single leader node. The leader also requires acknowledgement from a majority of followers for every operation.

What are some examples of systems that use Raft consensus?

-Examples include CockroachDB, MongoDB, Consul, Nomad, and Vault by HashiCorp.

Where can I find open-source Raft implementations to use?

-New Raft by eBay (C++) and Hashicorp's Raft (Go) are two open-source options linked in the video description.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)